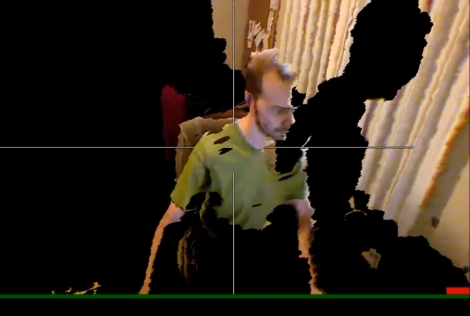

[Oliver Kreylos] is using an Xbox Kinect to render 3D environments from real-time video. In other words, he takes the video feed from the Kinect and runs it through some C++ software he wrote to index the pixels in a 3D space that can be manipulated as it plays back. The image above is the result of the Kinect recording video by looking at [Oliver] from his right side. He’s moved the viewer’s playback perspective to be above and in front of him. Part of his body is missing and there is a black shadow because the camera cannot see these areas from its perspective. This is very similar to the real-time 3D scanning we’ve seen in the past, but the hardware and software combination make this a snap to reproduce. Get the source code from his page linked at the top and don’t miss his demo video after the break.

[youtube=http://www.youtube.com/watch?v=7QrnwoO1-8A]

[Thanks Peter]

@EquinoXe

they could see each other , in fact , the beam is not blocked from confusing both of them , instead , i suggest using the driver,, try aligning both of them so their field of view does not have intersecting ir planes

wait a second , take kinect’s depth sensor and fit it on cars , viola – instant crash proof car from the future

@garak

http://www.wired.com/images_blogs/gadgetlab/2010/11/Canesta-howitworks1.jpg

read it , kinect doesnt use dots and i think my previous statement is incorrect as it uses a plane of infrared , so this intersection might be not a big problem

@Mr Hacker

That image turned out to be a bit of a lie/simplification. It actually does use infrared dots, and it does not use ‘time of flight’, as that image suggests. To get an idea what the dots look like, have a look at http://www.dailyvsvidz.com/2010/11/kinect-infrared-projection-dots-vs.html

@All

– Bandpass filters on the diode and detector would theoretically work, but would SEVERELY cut down on the signal-noise ratio. You have to select the filters very carefully (the set with the widest non-overlapping bandwidth possible, above visible, but less than the cuttoff of the (presumably) silicon detector… then crunch the math on the power loss and possibly find a substantially more powerful diode..

– Gating the signal with a shutter might work, provided the SNR was high enough and the software didn’t tweak out with the dots disappearing at the duty cycle of the shutter. (e.g. some automatic camera exposure algorithm, or the location processing algorithm, etc.)

– Polarization would NOT work. The majority of reflected light would not maintain polarization.

@yeah

– There are no coherence issues here that I can think of (temporal or spatial). AFAIK this system works with incoherent light. Furthermore, gating the signal does not change the temporal coherence properties of the source.

IMHO, the bandpass filters are your best bet for multiplexing multiple units. However, it requires careful selection of the filters, and possibly a more powerful source.

Woah… Looks like I’m gonna get one… as soon as the rush clears and prices become reasonable…

the next step i would take is just get it to remember the sides of objects it can’t see anymore.

Could one simple supercheap option for more complete 3d scanning be using a mirror? You would probably need some matrix algebra magic to do the necessary transformations for the 3d scene seen trough the mirror but it could be possible if you can take the hit from decreased accuracy. Also the refelcted dots from the mirror may interfere the foreside scanning.

So about that kinect IR, that’s a laser right? You could not possibly get that dot pattern over a large area from a LED could you? I’m not sure but it just makes no sense to me for it to be a LED, even though there are some pretty powerful IR LED, but with that pattern and the surface it covers and the seemingly uniformity of intensity (in the youtubes I saw) and all.

Oh and the dots remain the same size as I recall? A non-laser source would have them be larger farther back would it not? And out of focus.

If more kinects will be used, actor’s body may be projected into 3d environment (into video game, for example). If that’s combined with a 3d helmet…

@fdsfdsf This is about hacking it, this is hackaday, this isn’t about microsoft’s plans, although if they made a multi-kinect setup people could hack that too I guess.

If you want to talk about the kinect as used on the xbox, here’s a fun link:

http://www.engadget.com/2010/11/15/microsoft-exec-caught-in-privacy-snafu-says-kinect-might-tailor/

I imagine some geometry could be extrapolated from earlier frames. Especially for things that don’t move, like the background it should be doable. That way you wouldn’t have to solve the problem of using multiple kinects and at least reduce the shadowing a bit depending on the footage. (3D scanning a room dy moving the kinect?)

Also different colors for the dots (multiple kinect setup) and a color filter for the sensors would be nice. I don’t know how well that works in non visible light (are there even filters for 3 different colors in non visible light? Are these in the used spectrum?)

The possibilities of this technology seem to be endless.

impressive!!!!

The most obvious and immediate application of this technology would be to fix webcams.

Because the camera usually sits on top of the screen, and you are presumably looking at the middle of the screen where the picture is.

This has the unintended consequence of making people look down all the time when they’re actually trying to look into your eyes. It’s like they’re peeking at your boobs all the time.

If you’d automatically shift the camera to the point where the user appears to be looking, video conferencing would feel much more natural.

Well, the more kinects the better. You’d probably want at least four (in a pyramid shape) but 6 would be easier to set up (like walls of a cube) and would give better picture output.

I want a kinect now :)

I’ve read somewhere that the IR camera’s only work @ 30 fps. A bit low imho. But perhaps easier to time divide if you can hack it a bit and synchronize. And only if the chip doesn’t freak out.

I would love to see how accurate this is. A 3d scanner with code to interface into solidworks and make solid models would be AMAZING. Maybe one camera waved around at different angles and compared to previous versions of the same item.

Faro arms are expensive :P

I don’t think the resolution of the ir camera is good enough for a detailed real time 3d scanner that can compare to what david laser scanner can do as far as fine detail goes. You are going to need to mod a high res camera to see the kinects ir light pattern to get better detail in the scans I think.

What if you simply placed two kinects on either side of the room facing directly at each other? The pattern from one would be in the shadow of the pattern from the other. A dot from one might land directly on the other’s sensor, but if the pattern stays consistant you’d only need to adjust the positions slightly. You’d have a black vertical band around everything, but it would still be twice as good as with one.

You know this could be really good for video games.

(I know it is but they have been doing so many cool thing it makes you forget)

This might be a great brain wave or could be floored please criticise and share your view of this concept I suggest… Anyway the device projects a “grid” of IR dots over its field of view and uses them for the measurement. There for we are thinking that having more than one will cause the individual intersecting grids to confuse the sensors right? Could we be able to sync both grids into one uniform grid? From this uniform grid each different camera could take their individual perception of the grid and then be pieced together into rendering the 3d environment.

Solving the IR interference problem is easy. Just set up 4 of these and modify 3 of them to put off UV, x-ray and gamma radiation instead of IR.

Oh wait… you want to scan people? Hmm.

Red, Blue, Yellow dots + IR?

This + 3d printer + work = printable action figures of yourself

Has anyone made an object that can be imported into 3d software such as 3ds max?

Thanks!

CJ

Has anyone noted that Mr Kreylos has a web page of interesting projects?

Anyway, I noted that he got two Kinect units working together. Scroll to the bottom of the linked page to see more: http://idav.ucdavis.edu/~okreylos/ResDev/Kinect/index.html

– Robot