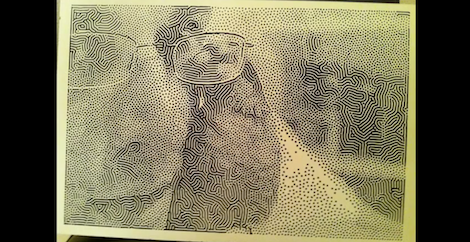

[Jason] was messing around with CNC machines and came up with his own halftone CNC picture that might be an improvement over previous attempts we’ve seen.

[Jason] was inspired by this Hack a Day post that converted a image halftone like the default Photoshop plugin or the rasterbator. The results were very nice, but once a user on the JoesCNC forum asked how he could make these ‘Mirage’ CNC picture panels, [Jason] knew what he had to do.

He immediately recognized the algorithm that generated the Mirage panels as based on the Gray-Scott reaction-diffusion algorithm. With this algorithm, dark areas look a little like fingerprints, meaning the toolhead of the CNC router can cut on the X and Y axes instead of a simple hole pattern with a traditional halftone. After a little bit of coding, [Jason] had an app that converted an image to a reaction-diffusion halftone which can then be converted to vectors and sent to a router.

It’s a very neat build and we imagine that [Jason]’s pictures would cost a bit less than the commercial panels. Check out the video after the break to see the fabrication process.

[youtube=http://www.youtube.com/watch?v=xoJDTPRqI6o&w=470]

Very cool stuff. Any idea if the author has a website or code that he might share?

Check out JasonDorie.com. (or just click the first link in the text to go direct to the CNC page). The source isn’t public, but the executable is. The vectorization and conversion to CNC paths was done by commercial software, but I’m hoping to eventually do that part too.

Thanks for the update Jason (that was me who posted on your youtube link as well). Excellent work.

http://jasondorie.com/page_cnc.html

cooler than the cnc itself is the reactor software, its like a virtual virus that makes images!

http://imgur.com/mBrgp

proof that the software is AWESOME

If you scale up the source image (just use Paint) to around 800 or 900 pixels, Reactor will produce an output to match – The patterning will be more dense, and give you better shading. :)

My cnc machine is almost done…This might be the first test

Man, I’m struggling with inkscape to vectorize this.

Really nice.

One of the issues I have adapting traditional halftone designs to my medium is that I basically have a fixed-size brush. (Like a CNC without fine Z-axis control). The algorithm you show looks like it would be possible to do reasonable quality reproductions while accommodating my tool restriction. Does is use constant width lines, or could it be modified to use constant width lines and maintain the effect?

Matt – It sounds like you might be looking for either stippling (which I’m going to add to the Halftone program at some point) or error diffused sampling, which is the more general term. The Reaction program doesn’t used fixed width dots. They’re close, but there’s a decent amount of subtlety in the output shading that translates into varying dot sizes, and it’s kind of important.

Check out this link for an example of stippling: http://cs.nyu.edu/~ajsecord/stipples.html

Feel free to email me directly if you have questions – My email is listed at the top of the CNC page on my site.

That said, I use a commercial program to compute the tracing paths, and it can be told to use a fixed-width tool. As long as the tool is small enough to fit the smallest dot it can be made to draw the biggest ones by making multiple passes. Would that work for you?

Very nice program, too bad it’s not open source. I would love to see how you made various things :) ( Mainly the pixel data to sample point intensity calculation and “dark boost”-feature )

I have looked at your DXF Half tone 2.1 program and wanted to know if it can be altered to render a jpeg image in 3 diferent drill sizes at a spacing of 12.5 mm precisely

If you look in his Eyes you see a demonstration of the uncanny valley effect.

but great stuff nonetheless

Hi,

can you explain the procedure from image to GCode a bit?:) how to you vectorize the image to a decent quality, I’m kind of wondered about that particulary?

I take the image, scale it up 4x original size using a bicubic filter. The bicubic filter maintains the round shape of the blobs, and upscaling it gives the tracer more data to work with. V1.2 of the program has a “Save Huge” button that does this step for you. From there I use a grayscale tracer with the threshold set to 50%.

props for the update, how about a load button in 1.3?

also although it probably says on your site, do you inkscape or what to vectorize the rendered images?

InkScape will easily do it (I’ve tried it), but I use Vectric’s VCarve, as that’s the tool that computes the cutting paths for me.

Lol, Inkscape seems to crash when I attempt the Trace Bitmap with “Grays”. Probably too much data.

Anyone’s got a tip for free or semi-free software with settings? Or the inkscape?

@Jason: I’ve googled it (http://www.vectric.com/WebSite/Vectric/products/download_products.htm) but I’m wondering which of these you use:)

You could skip the last two stages of painting then pilling off the sticky-back plastic and it would still look good.

What seems to be the right size for this? I’ve noticed you have to play around a bit with the settings to get it to a decent resolution

That depends – Are you working with the Halftone program or the Reactor program? The Halftone one will let you specify the dot size and work area size independently. I generally make prints about 18″ to 24″ across, and use a max dot size of 0.1″. A print of 9″ to 12″ with a max dot size of 0.05″ would produce the same dot pattern, but be physically smaller. The size & resolution you use depends greatly on your intended viewing distance. If you want to look at them from 6 feet away you’ll need a finer dot spacing than if you’re intending to view them from 20 feet.

For the Reactor program the resolution is entirely based on the resolution of the input image. I typically use 800 to 1200 pixels across.

I was working with reactor.

Thanks for your response, I was searching by trial and (mostly) error to get my picture to the right resolution.

The only problem I’ve got atm is that I can convert the image with illustrator (or inkscape) to a vector file and save it as a DXF file, but then I need to convert it to code for my cnc router.

I’ve heard artcam can export to gcode.

But my cnc is from the Holzher brand, and I can’t seem to find a program that converts the .Gcode file to .hops.

I’m trying to write my own software so that it will convert gcode to the necesary code, but it proves to be quite a challenge

btw nice work on the halftoner 1.1.

I myself got started with metalfusion’s software first, but your adjustable settings which auto preview are just what I was looking for!

I’ve got a question though:

– Where to you set the thickness of the plate you will be using?

– What does point retract do?

Thanks a lot btw, I’m ver impressed by halftoner v1.1!

The Halftone program doesn’t have a setting for thickness – The code assumes Z-zero is at the top of the work piece and -Z is into the piece. The code will generate plunges as deep as required to produce the correct dot size with the angle of bit specified.

Point retract specifies how far to lift the tool between neighboring dots. Assuming that your hold downs will only be on the edges, you can set the normal retract height to clear them. The normal retract height will be used to move to the first point, then point retract will be used between points, leading to shorter cut times.

Thanks for your help

Halfoner 1.2 is up – Settings are saved, there’s full support for mm measurements, and there are small help tips for the controls. I also added a “Load Image” button.

I know this is a month out but for vectorizing things potrace is hard to beat. I think it’s what Inkscape uses on the back end, or used to.

I’ll give it a try :)

hi jason,

i’m trying to make a similar image conversion (reactor – like) with gray-scott PDE simulation. yet, somehow my parameters don’t give the nice result your program gives. can you please elaborate on the image preprocessing and the F and k parameters you use?

thanks