We all love the Arduino, but does the Arduino love us back? There used to be a time when the Arduino couldn’t express it’s deepest emotions, but now that [Nick] hooked up a speech synthesis chip from a Speak & Spell, it can finally whisper sweet robotic nothings to us.

The original 1980s Speak & Spell contained a fabulously high-tech speech synthesizer from Texas Instruments. This innovative chip predated [Stephen Hawking]’s voice and went on to be featured in the numerous speech add-ons for 80s microcomputers like the Apple II, BBC Micro, and a number of Atari arcade games.

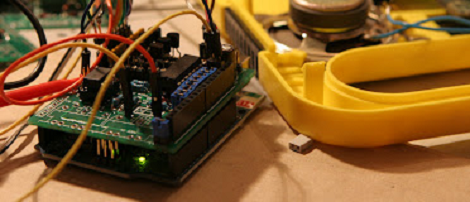

[Nick] has been working on his Speak & Spell project for several months now, and he’s getting around to testing the PCBs he made. By his own admission, connecting an Arduino to a Speak & Spell is a little difficult, but he’s got a few tricks up his sleeve to get around the limitations of the hardware. The final goal of [Nick]’s project is a MIDI-controllable Speak & Sound speech synth for the Arduino. This has been done before, but never from a reverse-engineered Speak & Spell.

You can check out [Nick]’s progress in interfacing the Speak & Spell speech chip after the break. There’s still work to do, but it’s still very impressive.

[soundcloud url=”http://soundcloud.com/noizeinabox/test-the-arduino-midi-speak-1″%5D

I think your Speak And Spell had a stroke

Instructions for interfacing the TI Speak & Spell are available in a 1980s magazine article from Radio Electronics, I believe.

I think Mr. Highly Liquid would be interested to know this has never been done before since he’s been selling kits for years to do just this.

http://highlyliquid.com/kits/midispeak/

Arduino->Phons I believe is what they meant.

Picked up a Speak & Read today for $3.53 at a thrift store…might look into Highly Liquid MIDI

I can highly recommend. (no pun intended ) I did a speak and read and a speak and math and both turned out very well.

Goddamn, you guys always get these bargains in the US! Whenever I look in thrift shops in the UK (aka Charity shops) there’s very little in terms of hackable electronics.

On the contrary, Brian, the TMS5100 (LPC synthesizer) and TMS1000 (4-bit driver microcontroller) were actually remarkably underpowered and low-tech for the time. Even in 1978, when the TI LPC chips were released, there were far more aurally pleasing voice synthesizers in various stages of development and completion – see the Votrax PSS and MITalk for two examples, both of which were rapidly superceded by the Prose, Calltext, and DECTalk lines of hardware solutions (the latter two of which, at least, are used by Dr. Hawking). By contrast, the DECTalk that came out in 1984 used a Motorola 68000 for lexical analysis and a TI DSP to run the actual Klatt algorithm – considerably more “high-tech” than the LPC offerings from TI scarcely a few years prior!

The TI line of speech synthesis chips and 4-bit microcontrollers to drive them that they developed in the late 70’s and early 80’s should be recognized for what they were actually good at: Being cheap, and bringing speech synthesis to the masses. The Speak & Spell, Speak & Math and other TI LPC-based devices were not revolutionary because they were particularly good at pronouncing anything, in fact you can find plenty of people who will vouch how terrible they were. On the contrary, they were revolutionary because they brought *passable* speech synthesis technology into the commodity price range.

Hey thanks for the heads up on the Votrax :) and the rest is well-said as far as bringing it to the masses. There is something charming about weak lil robots doing their thing and I do still enjoy its garbled squeakiness :) I still save TIs whenever I see them at a thrift. Are there any particular gems (outside of the usual suspects) that I should keep an eye out for?

Thanks :)

Honestly, the best you can do is to grab whatever little guys appear to be microcontroller-driven – even in an epoxy blob – and try to keep them in working order rather than hacking them apart for circuit-bending purposes, then contacting the MAME or MESS teams for info on where to send them for dumping. Chips packaged on-board with an epoxy blob instead of a real package, or microcontrollers that have had their security fuses set are a bit more finicky, but anything that uses standard DIP or SMT ROMs is pretty fair game. It’s only tangentially related, but MESS is actually providing one of the first real slot-based emulations of PC hardware. Whereas VirtualPC, DOSBox and the like try to simulate what the BIOS would do and emulate enough of the hardware to trick the software, MESS actually emulates the physical hardware – including any controller chips. Thus, given enough documentation of any microcontrollers or CPUs used on an ISA card or PCI card, plus ROM images of any on-card data, it’s possible to plug in arbitrary combinations of clones of real physical cards into various ISA slots.

More to the point, as someone who has worked on the MAME and MESS emulator projects in his spare time for about a decade now, it’s a knife through my heart whenever I see a CPU-driven or MCU-driven bit of kit hacked to non-functional pieces for some “artist”‘s noisecore gig setup. The grim reality is that there is a finite number of these oddball bits of hardware that are long since out of production, and each piece of kit destroyed on a circuit-bending musician’s workbench is one less that can possibly be used for research and emulation.

It’s an unfortunate paradox that it’s the sheer alacrity with which musicians seek out this hardware that drives forward the clock of technological doom for the hardware. The MAME / MESS projects currently have a deal with a professional reverse-engineering company to decapsulate and optically or electronically read out the contents of microcontrollers that would otherwise be impossible to read out and therefore emulate and preserve, roughly a few hundred bucks per chip, but often the real problem is not so much funding as finding enough samples of the darn things to burn through in order to learn enough to get a clean ROM read. With hardware this old it’s only a matter of a correct ROM read-out and enough time to digest the inner workings of the hardware and emulate the given architecture in MESS, thus providing sufficient code-based documentation for any enterprising musically-inclined engineer to create a VSTi plugin for his audio software of choice, and have an infinitely-reliable and forever-compatible – and free, aside from the ROM dump – copy of whatever bit of kit for which he would have otherwise had to source – and destroy – a physical specimen to put it into his gear rack. Heck, I seem to recall one of the MAME developers poking at (but not yet succeeding at) emulating the Fairlight CMI synthesizer, and all of its 10 or so Motorola 6809 CPUs, one or two per I/O board – good luck finding ten grand for one of the few remaining working specimens! It’s only a matter of time before it’s massaged enough to start working fully, and I look forward to that day.

If anyone has any oddball computers or workstations that aren’t emulated – or ISA or PCI or other expansion cards – that you can identify contains a ROM or known microcontroller that can have its contents read out via test mode or a simple ROM-read circuit (digital I/O pins on an Arduino and a couple latches work *great* for this and it’s probably what most of you have), I would highly encourage you prior to selling or junking it to get decent board scans, reads of any on-board ROMs, and then get ahold of the MESS team so that it can be added to our emulation framework. One oft-overlooked aspect of preserving these oddball bits of hardware via “proper” emulation is a parallel case – Line of Business applications. VirtualPC and its ilk are great if you’re just bringing forward a standard Windows application, but what about when it relies on some arcane combination of authentic ISA cards, PCI cards, or heaven forbid a dongle connected to some port? With hardware-level emulation, there’s the potential that this functionality, too, can be carried over – but I believe I’m digressing well from the topic of preserving audio hardware!

Oh, additionally, regarding things to keep an eye out for – if you’re interested in speech synthesis, I can tell you that MESS currently emulates the DECTalk DTC-01 – so yes, you can more or less emulate Stephen Hawking’s voice now.

However, I seem to recall that one of the other boards Dr. Hawking uses is a Calltext 5000-series board, for which no current ROM dumps exist. Good luck finding a hardware specimen to grab the ROMs off of, too, since it’s classified as a medical device and can still cost upwards of a few thou if you’re not paying with insurance. However, we (the MESS team) do have ROM dumps of the Prose 2xx0-series speech synthesizer, which was another popular hardware-based voice synthesizer solution in the early to late 80’s. The current holdup as far as emulation is concerned is that MESS emulates the NEC uPD7725 DSP, as it was the microcontroller used as an accelerator chip on some SNES games such as Pilotwings and Super Mario Kart (remember that article from a few weeks ago about the overclocked SuperFX?), but the Prose 2xx0 uses the NEC uPD7720, which is opcode-compatible as far as assembly syntax goes, but not instruction-decode-compatible, so I still need to get around to wiring up an appropriate instruction decoder to the CPU emulation core and also plumb the additional GPIO lines that the microcontroller had, but after that there should be a second Klatt-based synth in MESS.

Happy hunting!

HE Wow – a votrax. I still have an original votrax, 30 years old and still works! Those were the days!

Uh okay. If I see Steven Hawking, take his wheelchair. Got it.

Something important that [Nick] should note is that the voice data stored in all of the speak-n-spell line is, effectively, a low-bitrate recording of a real person’s voice. If you want to get your old speak-n-spell saying arbitrary things in that voice, you’ll have to find every syllable in the english language, spoken by that voice, in that rom, and extract them from existing words.

This should be a simple matter of getting the speech synth to begin reading the rom from set locations, which is what the original 4-bit microcontroller in the thing does. And kits from the 80s could do that, but only with whole words that already existed in the SnS’s rom. I suspect it’s not possible to get only portions of existing words, and here’s why:

You’ll notice from listening to that sample that there is a lot of garbage and glitched sounds. If I understand LPC coding correctly, there is feedback involved in the encoding; thus, you can’t start in the middle of a coded waveform, you must start from the beginning. Without the setup provided by the earlier frames, the later frames provide completely unusable decoding.

This is also probably why you can glitch the sound of a Speak-n-Spell so easily; just touching microcontroller or rom pins with your finger is enough to disrput coefficients from a frame or two, and with those frames incorrect, the rest of the waveform falls apart.

Mods, please ignore the Report that I submitted on this comment, the Report button looks too much like the Reply button, still.

tyco, you have LPC more or less correct, but the interesting thing is not so much that it’s a degraded waveform, it’s a *filtered* waveform. Conceptually, imagine that your vocal tract is a pure tone generator – sine wave, for example – that has a set of very complex analog filters attached to it which modify the tone in a parametric way – your vocal tract, your tongue, your lips, your jaw, the shape of your mouth, and so on. In the case of the TI LPC chips used in this case, the TMS5xx0 series, they have a Lattice Filter that can perform various deformations on a base formant waveform, which is recorded in very simple format in ROM. It’s not so much recordings of a person that are stored, as highly-processed recordings of individual phonemes and vocal tract “states”. The TI LPC chips can also take as input the output of an LFSR (Linear Feedback Shift Register) which provides a noise source for plosives (lip-stop-based phonemes such as P, B, etc.) and sibilants (hiss-based phonemes such as S, SH, etc.). It can be mixed back in with the synthesized formants (base vocal tract waveforms) as well, in the case of partially-voiced, partially-hissed phonemes like ‘zh’.

You might investigate Klatt et al, he was one of the pioneers in vocal synthesis algorithms.

Should I point out he’s actually working with a Speak & READ, not a Speak & SPELL?

I grant that it’s the same hardware, but… as I had all four Speak & devices as a kid(including the displayless Speak & Spell Compact), that yellow case being labelled as a Speak & Spell deeply offends me.

Hi,

I know that is is not a Speak and Spell, i’ve got all Speak and x, but I’ve first started with a bended Speak and spell and i did several failing tests.

To be sure my problems where not coming from the speak and spell himself, i decided to continue my development on a untouched (unbended) speak and Read.

Finally the prob where not coming from the speak and spell but from the interface and code and i continued my development with the Speak and read.

Poke around the web and you can find copies of the Windows 3.1 software TI used for encoding LPC data strings from digital audio recordings.

To get a more natural sounding voice a digital recording is analyzed to create control data for various parameters such as attack, decay, sustain, release, pitch and more. The result is a bunch of data that only a TI speech chip can understand and use to synthesize an analog waveform.

With the TI-99/4 and TI-99/4A computers, the speech synthesizer had several words pre-programmed and the Terminal Emulator II cartridge added more, plus phonemes and other data to say just about anything one could type in English. For some words it required rather creative miss-spelling. Games like Parsec used LPC data created from analyzing digital recordings of human voice actors.

The IBM PCjr had an optional speech sidecar that used the same TI chip as TI used for their own Home Computer, but IBM’s implementation wasn’t as good, or so game programmers claimed as a reason for hardly ever using it. If IBM had included the speech chip in many of their PS/2 systems and made an 8 bit ISA speech board, there likely would have been large amounts of software with speech.

Video game consoles like Intellivision and the Maganavox Odyssey^2 had voice modules that were simple DACs reading low bitrate digitized audio stored in the game cartridge’s ROM, which is why so few games with voice were made – the larger ROM chips cost a lot more to manufacture. Intellivision voice games pioneered an early form of variable bitrate recording by varying the data rate for different parts of some words. It was all carefully tweaked by hand to get the best sound and as much speech as possible into the game.

The Odyssey^2 voice module had a vocabulary of words and phonemes built in. AFAIK the games’ code simply directed the voice module to string things together. It wasn’t possible for different games to have different sounding speech like it was for systems with digitized audio or LPC data in the cartridge.

Hi,

I think there is a little misunderstanding here,

I’m not trying to achieve to get my own word spoken in this project, which i think it will impossible with a original Speak and spell, the word are already encoded in the Rom.

As Galane say, there is a window software (don’t remember the name) which could be used to create your own ROM (or simulate one), with this way it should be possible to make it speak what you want, but this is another story.

What i’m trying to achieve is to create a similar kit as Highly liquid (to do some circuit bend sounds) but with a Arduino.

I tried a friend’s Speak and Spell with the highly liquid kit, it’s great but it doesn’t allow to add any function as the code or schematics are not provided. for example add a pitch LFO midi controlled or some midi controlled bends.

I also planned to modify the shield for a teensy to add a direct usb midi port ..

sounds like my circuit bend speak and spell ;-)