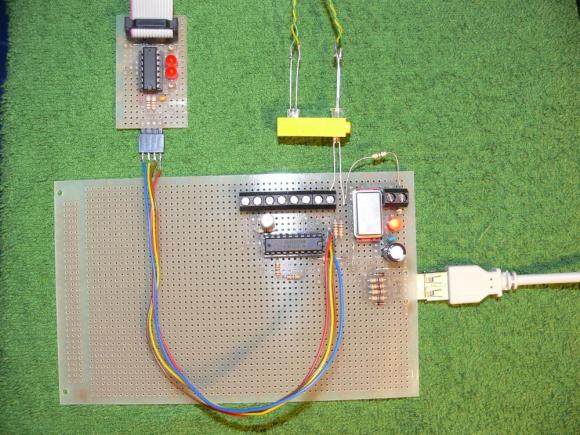

Here’s a rather exciting development for those who work with MSP430 microcontrollers. [M-atthias] worked out a way to implement USB 1.1 on a MSP430G2452. He’s bit banging the communications as this hardware normally doesn’t support the Universal Serial Bus. This is much like using the V-USB stack for AVR micros.

The test hardware seen above uses an 18Mhz crystal to get the timings just right. As this squeezes the most out of the chip it should come as no surprise that the firmware is written in assembly. This is still quite early on in development but the core features are mostly there, having been implemented and debugged over several versions already. Currently the base functionality can be loaded using under 2k of flash memory. You can download the Mecrisp package from [M-atthias’] sourceforge page. If you want to lend a hand testing or developing it would be greatly appreciated.

[via 43oh blog]

Bit-banged “USB 1.1?” What does that mean? USB 1.1 is a specification. Do you mean bit-banged LOW SPEED USB?

1.1 included Low Speed and Full Speed.

The device, not the host, chooses the bus speed, so I’m not sure what’s wrong with saying that he implemented a USB 1.1 device.

Why not say he implemented a 1.0 device. That contained a specification for low speed, too. Why not 2.0. That also contains a definition for low speed. 1.0, 1.1, 2.0, 3.0 all define low speed operation (among other speeds, progressively greater)

he just made a device never made to run as a USB device run a very complicated protocall all in ASM

just because 12mb/s is not fast enough for you no need to shit on his design

if you want USB 2.0 or 3.0 on an 8 bit 22 pin micro controller running at 18MHZ go make it your self

Biozz: You don’t seem to be following this very well. I suggest you read it all again.

I never stated it wasn’t fast enough for me. I never said anything negative at all about the design. I was trying to understand why an older specification was stated as what he created on a device.

He actually made “USB 2.0” on that device. USB 2.0 doesn’t mean only 480 mb/s. It specifies low, full, and high speed.

Things like “vusb” run at 1.5 mb/s. Which “USB” is that? All of them. Like I said once (and you don’t quite follow) USB specifications version 1.0, 1.1, 2.0, 3.0 all define low speed operation.

Indeed. Look at the FT245RL, it’s the FIFO version of the popular FT232RL. It boasts “USB2.0 Full Speed compatible”, and…. “Data rates up to 1Mbyte/second”. So there you go, it can communicate with a USB2.0 host, but not at ‘High Speed’ (480Mbit/s) but only at Full Speed (12Mbit/s), which equals to maximum of 1Mbyte/s.

In short, USB version number is not related to transfer speed.

the usb stack versions grow in complexity, possibly more than the 430 device can reasonably handle. since most modern hosts are all compatible to 1.1 I don’t see the big deal here. moreover, why would anything in > 1.1 be needed? Specifically, what would he need from say, 2.0 that you are crying about?

Or let me guess, you really don’t know what the fk you’re talking about and just trying to wikipedia your way though shitting on someone’s work.

i’ve implemented a 2.0 endpoint and the device required a 25mhz clock. Maybe the msp430 can’t run that fast? Since this is being “bit banged” yes that matters.

USB version number also refers to bus latency, not only speed.

Among other things too. I really don’t know what that dude is bitching about. Probably one of those semantics criers who happens to be confused or flat out wrong.

No way to do fullspeed in software on an 18MHz crystal – low speed it is

how people can bitbang USB makes my jaw drop in awww … very intresting hack here!

not just bit bang it….but bit bang it in assembly

well im an ASM programmer but still amazing work XP

+1

Is that an optoisolator made with LEGO or am I just seeing things?

He says as much. Lego opto, Also, punny. Opto, seeing thiings, i get it.

USB 1.0 : low-speed 1.5 Mbps, full-speed 12 Mbps (low-speed * 8)

USB 1.1 : + hub support

USB 2.0 : + high-speed 480 Mbps

USB 3.0 : + super-speed 5 Gbps (with appropriate cable)

The speed is signaled by the device with pull-up resistors on D-/D+ lines.

Low-speed supports only “interrupt” transfers in very small packets, bulk transfer endpoints are not allowed (in order not to saturate the bus with slow bulk transactions).

This project is obviously a low-speed USB device.

There is nothing magic about bit-banging a serial protocol.

For transmission you can always send data at CPU clock speed or at least half CPU clock speed, easy.

For reception, there is a clock recovery problem. Without clock-recovery hardware (like PLL), the only way to receive data is to oversample it, 8 samples per bit is a minimum with quartz clocks. So for low-speed USB you need at least a 12 MHz CPU clock/sampling.

Now USB is very painful to bitbang because it requires bit stuffing (at least one forced line transition every 7 bit times) and CRC checksums. If the uC isn’t fast enough to do it on the fly, it can always pre-calculate the bit stuffing and the checksums.

All in all, any 12 MHz uC can do low-speed USB in a more or less efficient way.

How come that a minimum of 8x oversampling is necessary? In bitbanged serial comms you normally just wait 0.5 bit-times from the first edge, then sample at 1 bit-times intervals…

The higher bitrate of usb should not affect how to sample, nor the required precision of the crystal. It must be the larger number of bits to get in each “block” that affects the precision requirement. Right?

I think you are talking about RS-232 specifically. In RS-232 you only receive 8 bits at a time, so you can get away with 1x sampling, but it’s not the proper way to do it. Very often systems don’t have have a clock that is an exact multiple of the baudrate, so they use the closest match which has like 2-3% error. This error is cumulative on each bit, on the last bit you can end up sampling with 1/2 bit time offset, even worse if the other end has clock error too. That’s why proper RS-232 hardware use 16x oversampling : AVR UART uses 16x oversampling for clock recovery (8x in fast mode), the classic 16550 RS-232 tranciever uses a 1.8432 MHz quartz which is 115200 (max baud rate) x16.

Now USB is far worse, the minimum useful packet length is like 400 bits, and the only way to stay in sync is to use a clock-recovery technique. The spec suggests PLL, that’s why each packet is prefixed with a 101010.. sequence. Bit stuffing assures that there will be a transition at least every 7 bits. But oversampling works too.

Almost the same goes for 10 Mbps ethernet, either PLL or oversampling is needed.

That’s why 20 MHz can send ethernet frames, but not receive them directly.

I’m just thinking out loud here…. I’m probably oversimplifying this a bit too much, so please bear with me.

If we ignore the cases where the crystal frequency doesn’t match up with the required sample rate and focus on having a crystal made for this purpose.

A decent crystal would have a tolerance of, let’s say, 50ppm and a tempco of another 50ppm in the usable temperature range. Combine this with an equally bad crystal at the other end and you can in worst case end up with a 200ppm difference.

So 200 ppm times 400 bits = 80 000 ppm = 8%. This means that you would still have plenty of margin after the 400 bits – in theory there’s still 42% left before risking to sample outside the current bit-slot.

Am I completely wrong in my thinking?

From a hacking point of view you’re right, it would work under some conditions. For example, if you just want to implement a HID mouse, you will do almost only transmission. First the host will request your device descriptor, then it will assign you an address, then it will ask for the HID descriptor and finally it will poll you for HID events. So the only things you actually need to receive are token packets (a few bytes) and HID requests (few bytes too). That’s why there exist bit banged usb devices with uC running from internal oscillators, they rely on proper clock recovery on the host side.

Still, I insist that there is a difference between “it can work to some extent” and “you can design a product with it”. Moreover you have very cheap uC with full-speed USB hardware. Or if you want to implement a proper host on the cheap, you can make your own tranciever (USB line 8 bit synchronous bus) with a 2$ CPLD.

“… it should come as no surprise that the firmware is written in assembly”.

Well, it come as a surprise to me. Compiled C code is typically faster than assembly, unless the assembly code has been painstakingly optimized. If you want speed, start with good compiled code, find the bottlenecks, look for more efficient algorithm and data structures, repeat until you head hurts, then see if you can eke out a few cycles by rewriting inner loops in assembler.

To those who don’t believe me, check out CRC Implementation With MSP430, TI Application Report SLAA221[http://www.ti.com/lit/an/slaa221/slaa221.pdf]. Their C code is both faster and more compact than equivalent assembler. Here’s a quote:

“For obtaining the following test results, the IAR Embedded Workbench V3.20A was used. Results may differ for other versions of this product. Performance is measured in cycles for the input ASCII sequence 123456789 . The C code was measured using the Profiler in the IAR Simulator. The C-callable assembly was measured using the cycle counter in the Simulator by setting the counter to 0 at the functional call then stepping over the function to the next instruction. The C compiler was configured for speed at the highest optimization level. Note that there are two versions of the crc16MakeBitwise and the crc32MakeBitwise functions. The one without the numeric appendix is an implementation equivalent to the assembly version. The one with the 2 is a more C-efficient implementation. This can be seen in Table 3 and Table 4 which give the code size and CPU time for both.

It’s not a question of speed, but instruction accurate timming. I wrote a small USB stack on AVR once, and the sampling had to be done at very precise moments. It is something that you cannot obtain with a C compiler, at least low-level RX and TX code has to be hand written in assembler. Look at vUSB source, at every line you have comments like “[02]” which is the number of clock cycles left before sampling or transmission.

Even if it is a question of speed, you’re out of your mind to think C always compiles to faster code than assembly. Under the hood, the microcontroller can’t tell the difference.

The real difference is the skill of the programmer(s) involved. No amount of optimizations will make a C routine better or faster than the equivalent ASM if the C programmer doesn’t know what (s)he is doing.

With the ease of entry into the microcontroller field, there are some stunningly bad blocks of code (both C and ASM) floating around out there from “experienced” writers.

Computers are better at chess than humans, why is it surprising that they are usually better at code generation?

There are good reasons to use assembly, including clock cycle timing as mentioned. My gripe is the summary’s implication that asm is typically needed. I don’t buy it. In most cases you can get the job done faster and better by embedding a handful of assembler instructions in the C source, if they are even needed at all.

To quote Stephen Fry: “utter arse water!”

Chess is a strategy game with only two players and a huge matrix of possible solutions. Programming is NOT that.

What IS needed is a _good_ knowledge of BOTH (all) things you are comparing else all you do is expose (and emphasise) your ignorance.

Cyril – is it really that hard to understand that that the compiled C code in the CRC example is both smaller and faster than the assembly version. Honestly, do you think the engineers at TI wrote awful assembly code? I don’t. I think they wrote human readable assembly, which will almost necessarily miss significant optimizations.

In fact, it is striking hard to write highly optimized assembly code. I’ve done it. I know people who used to do it for a living. I also used to write optimizing compiler back ends for a living. If you want your program to run very, very fast, let a compiler do a very good job on the bulk of the code (it will be better than quickly written assembly code), and hand optimize the inner loops.

Lastly, I am puzzled by your comment about C code being compiled for some sort of least common denominator target. When I compile code for a MSP430, I specify the exact target chip. Many optimizing compilers generate different code for different variants of a family of processors.

Reading FAIL. Your example (and reality) says the opposite of what you assert :/

At the _same_level_of_optimisation_, the BEST a C implementation can hope for is the SAME code size and execution speed as the ASM.

Generally, C is is complied to account for ALL possible implementations/usages whereas ASM is “compiled” for THIS specific implementation.

FYI, your comment says a LOT about you and very little about the subject ;)

if the assembly is slower than C, than the code is bad, that’s all.

Also CRC is a very specific and common task that the compiler might be able to recognize.

There is no “human readable assembly” and c compiles to assembly.

Anyone can tell you that “professional programmers from a big company” can produce worse code than a hobbyist,

Well done, Man Gr8 project. But that is a big blow to my creativity I was planning to do something similar for PIc as well as MSP430 within next 100 years :( Someone already did PIC and you did MSP430 now……

The download you have link at the bottom is not the USB stack you’re talking about in the article. “Mecrisp” is a fourth programming language interpreter project they wrote. Pretty sure you meant to link to “Mecrimus-b”: http://mecrisp.sourceforge.net/mecrimus-b.htm or the SF page where both projects reside: http://sourceforge.net/projects/mecrisp/files/