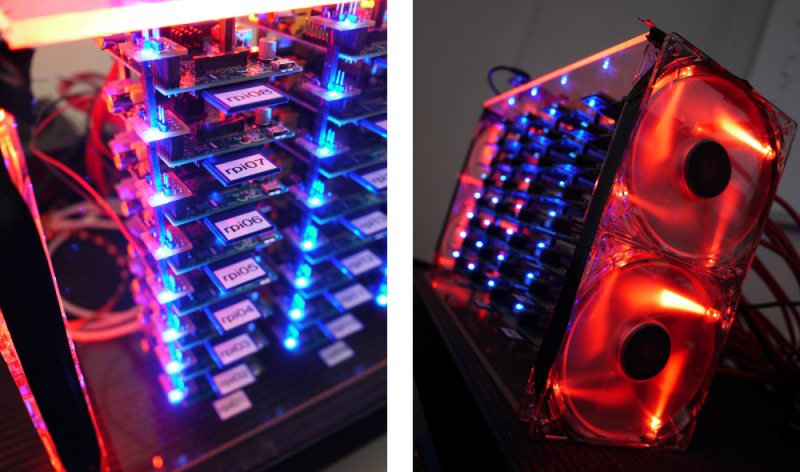

Not only did [Josh Kiepert] build a 33 Node Beowulf Cluster, but he made sure it looks impressive even if you don’t know what it is. That’s thanks to the power distribution PCBs he designed and etched. In addition to injecting power through each of the RPi GPIO headers they host an RGB LED which is illuminated in blue in the images above.

Quite some time ago we saw a 64-node RPi cluster. That one used LEGO pieces as a rack system to hold all of the boards. But [Josh] used stand-offs to create the columns of hardware which are suspended between top and bottom plates made out of acrylic. The only thing that’s unique about each board is the SD card and that’s why each has a label on it that identifies the node. These have been flashed with almost identical images; the host name and IP address are the only thing that changes from one to the next. They’ve been put in order physically so that you can quickly find your way through the rack. But functionally this doesn’t matter… put the card in any RPi and it will automatically identify itself on the network no matter where it’s located in the rack.

Don’t miss the demo video where [Josh] explains the entire setup.

You don’t need a custom images for each Pi: Just configure a DHCP server to assign hostnames and IPs based on MAC address.

True…but then you need a DHCP server that can do that… The RPiCluster is using a crappy Linksys I had laying around…. ;)

If it is compatible, install Tomato firmware or DD-WRT on the router and it should help you with that.

There are a lot of ways to get this working with DHCP. ;) It all came down to where I wanted to spend my time. Since I had to write all the SD cards once anyway to put the initial image on there, it didn’t seem to be a big deal to apply a static IP and hostname while I was at it (I made a script to do this).

I’d recommend using dnsmasq on the controller to provide dhcp and tftp pxe boot support.

Hmm, if only there were a small, cheap, Linux-based network capable ARM computer that could serve as a DHCP server. Maybe something around $35; that should be cheap enough not to blow the price too much.

Arch linux will run on Arm. There is too, a Debian port for Arm.

Mine’s using a junk router too :(

I think the hardcoded IP addresses are for troubleshooting.To find the missing or malfunctioning PI.

You can assign the same hardcoded IPs to each node by identifying the MAC address, and then adding them to the DHCP server. If he ever wants to update the image on the SD card, he has to redo all those setup steps again. DHCP + tftp boot means you just replace the image on the tfpt host and that’s it. On a small project like this where he’s only setting the software up once, it’s not a big deal. Sometimes taking the time to learn how to do all this takes longer, but over time the knowledge will save you time :)

Obligatory http://xkcd.com/1205/

But then you lose 1 raspberry pi to just do dhcp. Better to hard code and easier to track down troublesome nodes. The guy made acryllic case for God’s sake, he knows about dhcp…

Apart from blinking lights, wouldn’t there be more simulation power in just a single cheap GPU?

Yes, but he’s not testing simulation power. He’s working with distributed computing. Which leads me to wonder why he doesn’t fire up 32 copies of QEMU on a PC with sufficient memory.

because 32 copies of qemu lack awesomeness and blinking leds.

yeah its kind of feasible. From experience I can say that a single x86_64 CPU with 3Ghz can emulate an ARM CPU which feels around 500Mhz. The emulated CPU won’t be that fast considering current SoCs but I guess it would allow some applications. A N-core CPU can effectively provide you a cluster of N ARM VMs.

That’s only if you tie yourself to emulating ARM on an AMD64. If you run AMD64 VMs on AMD64 hardware, you can launch hundreds on a single PC, as long as you turn on KSM. You’ll be limited to the processing capabilities of your 8-core i7 or whatever, but running 32 x86_64 VMs on top of an i7 may well match the capabilities of 32 raspberry pis.

did you even read TFA and parent’s reply or do you just enjoy going offtopic?

as stated yeah you probally can on most tasks but believe it or not there are some tasks that a several hundred core GPU cant do any faster if not slower than an 4 core GPU

GPU cores are quite limited and require workarounds for many computing tasks

Looks like the case uses more electricity than the hardware inside of it.

Possibly, but it is also better looking than even most Hollywood stuff.

Too bad the YouTube demo is using the cluster to drive the LEDs. ;-)

33 nodes… it’s the binary snobbery like 11 on the volume dial! :)

Actually it is 32 nodes and a master ;)

And I am not the creator FYI, he just happens to share an awesome name

i would make a point that one power supply was enough but thats far from the only overkill in this project! … granted < $2000 for a 33 core beowulf is about as good as it gets with all new parts!

Its slower than $500 PC

Thats not the point … the point is to do as many parallel tasks at once as possible

simply not possible with a $500 dual core (or even quad core) 3.2ghz PC

You my friend fail at logic AND math (must of been hard in shool for you).

Math :

Xeon E3-1230 V2 = 8x 3.7GHz

AMD FX-8350 = = 8x 4.2GHz

rasppi is crappy ARMv6 at 700MHz

Now get a calculator and do your magic. I wont even bother to worry your pretty little head with details like FPU, SIMD, memory throughput, real IPC. I wont even mention scheduler issues with slow ass ethernet interconnects.

I can also do it from another angle.

Radeon HD 7750 = 512 x 800MHz

Logic:

What is faster, one 100 ton truck doing three courses to deliver rocks in one day, or 300 one ton trucks delivering same rocks in one day?

Not that it matters. As I understand it, the project is intended as a learning exercise.

Which is faster: A Beowulf cluster of 32 AMD FX-8350s and an operator with no clue how to make it work, or a Beowulf cluster of the same kit with an operator who has actually learned how to make it work after having successfully tinkered with a couple score of Raspberry Pis?

Baby steps, FFS. My first useful circuit that I planned myself was a few red LEDs, some perfboard, and a voltage divider. One must start somewhere.

Virtual machine will be cheaper and more flexible. One click and you spawn 30 nodes.

I bet you did pretty well in SHOOL, huh?

Sure, a decent PC will be faster. But this setup lets you optimize your programming for real distributed computing that comes into play when you stop running your software on the “practise” datasets but move to the “real” dataset.

On the other hand. How much PC CPUs will you have to buy for the 0.7 TFlops that this setup manages when properly programmed… (i.e. using the GPUs… :-) )

(The answer: about 100 PCs: http://wiki.answers.com/Q/How_many_FLOPS_does_the_average_computer_run_at )

When you’re done being a condescending arsehole you might want to look at the documentation of the RPI that allows overclocking to 1000MHz within warranty.

rewolff about ONE 386DX40, because rpi _is_ Broadcom = you wont program ANYTHING GPU related, Its all closed source behind wall of NDAs. Unless of course you are Nokia (they are the only company using Broadcoms GPU to run computation at the moment, they do PureView with it).

Eirinn this is great, now you need to somehow make ARMv6 run more than 2 arithmetic operations per cycle just to get close to x86. Not to mention you can overclock those desktop processors too (both OC to 4.4-5GHz on air cooling).

@rasz

God forbid you stop mathing for five seconds so you can apply some interpersonal skills or, I dunno, read the fucking writeup.

>>On the other hand. How much PC CPUs will you have to buy for the 0.7 TFlops that this setup manages when properly programmed

one four way 16 core opteron server can pump out 640GFLOP w/o “properly programmed” garbage. four way 8 core xeons can hit 677GFLOP, and both are based on linpack. RP@1GH gets, wait for it, 0.06 GFLOP per core, meaning just 1.9GFLOP for the machine! yes, a 10 year old pentium 4 has more power for less cost and electrical power! For what this guy is doing, pi is a complete waste and VM should have been used instead.

Um its equal…The truck and car both take a day to deliver the rocks.

Please explain how it’s slower, I may be missing something. Sure the processor is 700 mhz, but with processing destributed over 33 nodes, how would a $500.00 pc be faster?

Interconnect speed on a single processor is faster than over a gigabit ethernet network (its why people claiming to build ‘super computers’ in cloud environments and out of random hardware have really just built distributed computing environments, interconnect speed is where the true power of a super computer comes from). Really, as with most computing topics, the usefulness of any system depends on what you are trying to do. This would be great for say rebuilding debian packages for the RasPi, but not for running fluid simulations. Rebuilding packages requires lots of independent cores that rarely communicate. Fluid simulations (the parallel kind) need to communicate between each processing instance all the time. Thus a single 8-core(16-thread) proc would most likely out perform this setup from the communication bottle-neck alone.

Look at one post above yours.

Then you have details like memory running at a speed we had 10 years ago in PCs. L2 cache with TWO wait states and so on.

Clock-for-clock, an 8-core CPU at (4×700=) 2.8GHz would be the same.

Except x86 chips do way more per clock cycle than an ARM. Specially cos each core is really 2 threaded doo-dahs with the 2 integer units and 2 floating points and address generators and speculative execution and whatever else they have. All that power in 1 core. Then with 8 of them on a bus a few millimetres long on the same piece of silicon with big caches all over the place.

Basically a PC even with only 4 cores would decimate it. As people have mentioned, the latency of Ethernet is not really any use for supercomputers, they need massive parallellism so data on one node can act on data on another ASAP.

Some problems are more “local”, and can be run in lots of separate chunks across computers. Seti @home or whatever people do now, would be fine, tho that works across the whole Internet. As long as each CPU spends most of it’s time working with data in it’s own local RAM, it’s fine. But then that’s really more of a network than a supercomputer.

This really has no advantages over a network of PCs, and is much worse than getting all the power in 1 PC CPU.

OTOH I don’t suppose he built it to do anything useful. As long as it brings him some pleasure. I can’t really think of a use for it myself.

Even cooler would be to set a stack of jobs, have the first available pi execute one from it and relay output to a central console then get the next job, going at it this way you could have the same image for all cards (with dhcp) except the head and add rpis as needed to the desired throughput

Suggestion: Make the leds of each individual RPi fade according to the its CPU load.

If they transition smoothly from green to yellow to red, it would look like a heat map!

It would make one heck of a robot brain.

I’d sooner have 10,000 Z80s. Just for the coolness.

I want a SOC version of a connection machine, just like Skynet uses.

Does it particularly matter how useful a thing is if it is cool as this is? A cluster is not about speed its about power and volume. A well made cluster can render an image in seconds that would take a modern multi core-computer hours to complete at a fraction of the energy cost for instance.

>A well made cluster can render an image in seconds that

>would take a modern multi core-computer hours to complete

>at a fraction of the energy cost for instance.

Do you have anything to back that claim up?

If anything a cluster would be good at distributing work units that can be worked on standalone without needing to interact too much with other nodes.. i.e. rendering a whole film split into scenes and not a single image.

Either way the Pi is a bad choice for a cluster from the start.. Weak processor, weak local storage, only 100MB ethernet which is crippled by being connected via USB.

The only thing going for the Pi in my opinion is that it’s video processor is apparently pretty fast at encoding h264 but I can’t see how that could be useful for a cluster application.

A cluster based on some imx6 quads might be interesting..

another person failing at logic

ARMv6 is SLOW, its DOG ASS SLOW.

It doesnt matter how many of them you have in one box if their combined horsepower is LOWER than one ~$200 CPU.

It doesnt matter if you run 20 concurrent tasks if they will end later than same 20 tasks run one by one.

I think YOU are failing at logic.

The engineering principles here can be scaled up to platforms with more raw computing power – but if you want to break new ground in distributed computing – whether in terms of parallelising the problem, or in the sphere of inter-processor communications – this is a great, cost effective way to do so. (I’m qualified to make this statement – I have a PhD in parallel computing).

The only case I could make for experimenting with parallelization techniques on this is to simulate worst-case (i.e. sh***y switched 100bT-over-USB interconnect) IPC latency.

Yeah, it’s always amazing to me how the people most interested in showing off how clever they are don’t bother to read or comprehend the writeup.

So everyone that comments here would rather just bitch and hate about something rather than take a minute to read why the thing actually exists?

“Kiepert started his work with the Raspberry Pi (RPi) this spring in response to a need he encountered while working on his doctoral research having to do with data sharing for wireless sensor networks.”

Oh man! The horror the HORROR!!

I’d advise the builder to make a DHCP server with a netboot environment and just use to flash cards for local storage (swap, cruft whatever). Just build your binaries once on your master, boot your nodes of it and let them use AUFS to get their own specific config.

If he wants to update 1 thing now he has to update all 32 sdcards… brrrrr

It’s not as bad as that. I can send the same command to all of them at the same time (tmux with synchronize-panes on). For example, sudo pacman -Syyu, to do a system update across the whole thing. :)

Could this be used to simulate multiple proxies to foul up trackers?

I’d guess not, since they’d all be connected through the same external IP address.

How aboot if they each connect to a different VPN?

You mean like putting TOR on each of them? You’d want a connection to the internet that = 32 X 100 Mbps, or 3200 Mbps. An OC-48 would get you 2.5 Gbps… 6 OC-12’s could handle it.

If an OC-3 is 20k per month… You’d pay about a half a million dollars, a month, to provide the throughput.

I guess if you chose to bottleneck you could bring that cost down…

For that money they spent on these rpis, one can buy a shitload of old opteron K9/K10 servers and have a real supercomputer. Even a 8 laptop c2d cluster would be faster. But I think it’s more about the concept than the speed

He’s doing research for wireless sensor applications. If he’s using 32 nodes it’s because he wants 32! nodes. No point having 4 of the same sensors in one location :P

It’s pretty clear he isn’t doing this for the processing power. The whole video he talks about the synchronization between each node (as it blinks an LED – not cpu intensive).

So there’s a lot of talk about issues and is this worth it, etc. (FYI I’ve had a good amount of academic and professional experience in building/working with clusters, wireless sensor nodes, and all the fun things he’s playing with here)

Key points to keep in mind, this was a learning experience for the person and he had specific type of simulation in mind (and of course a limit budget). He doesn’t need CPU-power, he’s simulating sensor nodes which have at best a 1/10 of what a raspberry pi can do. He doesn’t need a fast, low latency network (he’s only using 100Mbit/s if you read though the write up). For simulating a wireless sensor network, this is a good, low-cost setup. Could one beefy PC using VMs for each node do the same thing or better, yeah likely. However, that would have cost at least as much (like more), and would not have been the same learning experience.

Overall his setup is doing a great job of fulfilling his needs, and in the end that is the final decider if a setup is good. While there were some minor things that he could have done better/differently (I’ll get to that in a moment), and he did go a little over board on the looks and such, he did a great job. Keep in mind if he it wasn’t at least interesting, we wouldn’t be looking at it and talking about.

The things that could be done differently/better (many have been mentioned)

Hard-coded IPs on each node should be replaced with DHCP, or if there a good reason to avoid that, it would be easy to have an identical startup script on each node that checked the host’s MAC address against a table, and lookup the IP for that node. Having different images on each node is just a pain is manage.

Why not netboot? I don’t know offhand what the RaspPis support. Given’t their limited ram, using the sdcards to hold the basic root environment might be a good idea, this would help with changing boot parameters and kernel, which isn’t a common thing for his work, so it makes sense not to work. If he changed to a setup where he had the same image on all his computer nodes, don’t see a big need for this. (I would hope he could string together a multiple sdcard adapter so that he could right multiple cards at once, and not have to write them one at a time).

He is using a RaspPi as his host-node. While this keeps with the ‘theme’ of the cluster, I think this an unlikely limitation. A basic pc (generally something easy to get a spare one to use in a CS department) would handle the host-node functions much better. My only thought as to why he went with this is so that the host-node could have the extra storage and have the full software setup that has on the compute node (with them using NFS to the host-node instead of the extra storage). One big advantage is that the a full PC for the host-node would let me use that as the nfs-server, the dhcp server, and the router all in one. Also, it could provide ldap/yp/whatever login server for the nodes. In general you want the software setup on your compute-nodes to be identical and have as little state as possible.

Using QEMU for compiling packages seems like an easy but inefficient way of going about things. I’m glad to see he’s not building the packages for each node separately (you’d be surprised what people do), but x86/x86_64 machine setup with a cross compiler would build the software much, much faster. This requires working with cross-compilation, which does require some learning (though I’d expect there are a bunch of guides on it for the RaspPi online). Certainly not the setup one would want for the long-term, this is likely a ‘fast-enough-for-the-moment’ decision.

Don’t know if this was done, but a wiki (internal/private) documenting all the setup and config he did as he did it is always helpful any project like this. The final write up is great, but all the little details being stashed away in one place in an organized fashion will serve him well in the future.

Overall, great job! He build a small, relatively slow cluster that is well tailored to his work. The suggestions/ideas above come from a lot of experience with cluster work. Assuming little/no experience beforehand in cluster building, this is a great build. Given the time for extra lights and leds, I’m sure I’d have used them when I could. There’s always way to smooth out and improve setups like this, and at a certain point you have to get back to the work you build it for.

Again, if the builder is reading this, great work. Read all the feedback on here, but remember you did a great bit of work here. Good luck with your dissertation.

The “builder” here…. Thanks for actually reading the write up. ;) I agree that there are a number of things I could have done different, and in hindsight, I might have taken a different path during the build. Overall, my approach has been to go with the path I saw as the most expedient at the time to get this up and going. After all, I’m doing this to facilitate doing actual work on my dissertation!

As for the master node…the RPi usage as a master was a temporary convenience. I recently setup a Chromebook with Arch Linux (Dual-core 1.7GHz Cortex-A15) as the master. The primary reason I wanted to stay with an ARM based master is to avoid having to do cross-compilation for my projects. Not that cross-compiling is a big deal, but there is a convenience factor for being able to compile and run on the same machine. Additionally, as the master is hosting NFS for /usr/local, it is very nice to be able to install applications to /usr/local and have them available for every node in the cluster (including the master).

Awesome project, it gives me several ideas for my Senior Project for Network Security. And with the Raspberry Pi it makes things affordable. Yes to all those critics a PC might be faster, but the learning and the coolness. Thanks for sharing!!

I can certainly understand avoid cross-compilation. I was on the team that working on porting IBM’s K42 OS from ppc64->x86_64, and just getting the toolchains for cross-complication working took months (you ever see a release version of gcc crash while compiling?), and never worked right.

Well said. I found the dismissive nature of some of the comments quite depressing. If I understand his write up, this project is analogous to the Parallella project – Another “supercomputer” that’s outperformed by commodity PCs. But that really isn’t the point…

Great project, but I can’t help myself to correct the author about his assertion that Raspbian is based on Ubuntu : It’s not. Raspbian is based on Debian, hence the name. Ubuntu is also based on Debian and if there was a Ubuntu based distro for the RPi, it would be called Raspbuntu. There, I said it. Back to reading the pdf now.

You are correct! I’ll try to get that fixed soon in the document.

Here’s something we made at Sandia about 3 years ago: http://farm4.staticflickr.com/3538/5702439502_b6448467b8_o.jpg

The box has 7 Gumstix “Stagecoach” motherboards, each loaded with 7 Gumstix Overo boards for a total of 49 ARM processors in the box. There is an Ethernet switch mounted in the bottom and a power supply at the back. We made 4 of these and stacked them up for a total of 196 CPUs. You can check out some action shots in this video: http://www.youtube.com/watch?v=UPyn9krjIRc&feature=youtu.be&t=50s (that is not me in the video)

Oh, and you know how you make a Beowulf cluster? You install Linux on every node, then “apt-get install mpi”. That’s it. A Beowulf cluster is just a bunch of networked Linux boxes with MPI installed. Oh, and a really spiffy name.

A phrase comes to mind: “A zombie-hunting Beowulf”. Sounds interesting, though unfortunately way over my head.

Would it be fesible to run http://folding.stanford.edu/ on this setup?

This guy Josh manages to find and purchase 30 some odd Raspberry PIs and build a cluster out of them…. and all you guys can do is give him grief for using statis IPs?? JFC >.< *facepalm*

Well, I for one think that this is pretty cool.

Sure it’s not useful, but neither is sitting at home criticising.

At least he’s doing something!

Good lord – This is the most arrogant thread ever posted @ Hackaday. The critics here are real assholes.

Considering a single two way server would have been able to do the same in virtual machines (two 16 core opterons so you can assign a bit less than a core per VM) for less cost (in the long term especially), makes you wonder if this guy really needed to do this or just wanted an excuse to waste money.

I love the creative side to this project… Waste money, would be great to run each through vpns in a work place or college.. bristol UK.. their interactive tech program is using as “concept”,using Pico projectors set up as multutouch surfaces, !!around day to day life” something like like this node set up would be great for being admin across lots of similar networks. Run crap load of harddrive less multi multi-touch kids school computers.. lots of different schools! Live to see this Beowulf done on the beagle xm… That funds xp! V

Or get a few of these around the globe vpn’in!

yeah that thing looks really cool for sure. Is it going to have a specific application? If the cards dont the fans, why add them?

Is it possible to emulate intel cpu or amd on arm cluster.I want to run Ansys Maxwell or Jmag software?

This would make a great media server or a setop box replacement. It’d have to be scaled down it used as a setop box. The pi is optimized for media. The Arm processor has way less overhead too. I could see this being used as a host too. For hosting like an IRC bot. IT would be excellent for that.