A lot of awesome stuff happened up in [Bruce Land]’s lab at Cornell this last semester. Three students – [Pat], [Ed], and [Hanna] put in hours of work to come up with a few algorithms that are able to simulate stereo audio with monophonic sound. It’s enough work for three semesters of [Dr. Land]’s ECE 5030 class, and while it’s impossible to truly appreciate this project with a YouTube video, we’re assuming it’s an awesome piece of work.

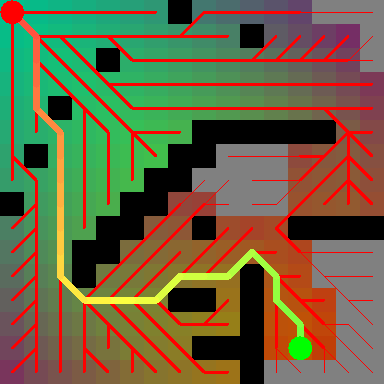

The first part of the team’s project was to gather data about how the human ear hears in 3D space. To do this, they mounted microphones in a team member’s ear, sat them down on a rotating stool, and played a series of clicks. Tons of MATLAB later, the team had an average of how their team member’s heads heard sound. Basically, they created an algorithm of how binarual recording works.

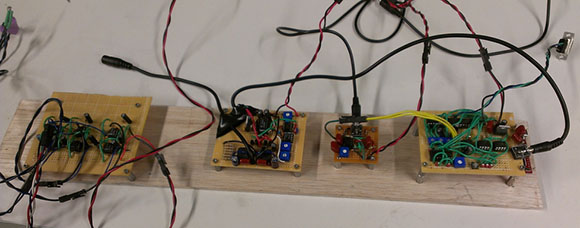

To prove their algorithm worked, the team took a piece of music, squashed it down to mono, and played it through an MSP430 microcontroller. With a good pair of headphones, they’re able to virtually place the music in a stereo space.

The video below covers the basics of their build but because of the limitations of [Bruce]’s camera and YouTube you won’t be able to experience the team’s virtual stereo for yourself. You can, however, put on a pair of headphones and listen to this, a good example of what can be done with this sort of setup.

[youtube=http://www.youtube.com/watch?v=tzuu5gRPN50&w=580]

anyone want to make millions? use this algorithm to decode dolby 5.1 into a stereo binaural sound in realtime. just give me 1 of them :)

Exists. Look up “Head-Related Transfer Function”

Some old sound cards had this downmixing ability in the drivers.

There is a Dolby product that does what you want, something about headphones in the name of it’s function. It’s in some hardware as a switchable option.

I can’t dis 5.1 enough. Show me my center ear! Holeywood trash. Just make the movie in binaural sound. Nothing to fake, pure reality. Since 1881, it’s about time. I would like to have live concerts in binaural with the option of hearing the fake channeling and pan pot positioning mixup.

The issue with binaural audio is that it doesn’t properly work unless you’re wearing headphones.

In a movie theater or your living room, you use multiple speakers to replicate the entire soundfield. Sure, it *can* be done with only 2, but when you factor in room acoustics and other factors, it’s far simpler to have the sound actually come from the general direction of where it originates in the soundfield. You can get “virtual surround” from 2 speakers, but it’s far too dependent on the room acoustics.

Creating binaural audio for movies, you’d set up a recording head in a studio with a crazy surround setup, and use that to mix down to your binaural stereo. For music it would be the same, or record with a binaural head live.

Live binaural recording brings its own problems, you lose the instrument isolation for fine mixing and EQ, and you’re completely dependent on the recording room’s acoustics. It’s generally the only way binaural music is recorded though, and it’s commonly limited to classical or similar instrumental performance for such reasons.

tl;dr, reality isn’t stereo. Reality is a ton of mono sources, just like your N.1 surround system is.

the purpose of the center-speaker in theatres is only to deliver dialogue center-screen, so that audience on the far left/right seats still hear the voice from where they see the actor (and not from the sidespeakers in front of them, while seeing the picture sideways).

it is usually obsolete in home-applications where the viewer is sitting far behind relative to the L-R width, so that the L and R can create a nice “phantom centre” (basic theory of stereo sound reinforcement).

it still gets sold in 5.1 homesystems because there is a market for it, no other reason.

(also, in real 5.1 systems for theatres, the .1 channel is _not_ a subwoofer, as sold for home-applications. in a 5.1 theatre all the channels are fullranfge, there’s no need for subwoofers. the .1 channel is called LFE (low frequency emmitter) and its purpose is to create low-frequency shaking effects (explosions, gunfire, crashes etc.)

i have to stop now, because the half of me that is an audio-engineer wants to rip this topic apart ,-)

LFE stand for low frequency effects, and it’s technically still a sub-woofer (below woofer frequencies) that creates them.

Technically, in Dolby Digital: LFE is a channel that can have information up to 120Hz, which is well within the realm of any speaker that is capable of being invisibly and properly mated with a real subwoofer. It is therefore not (technically) a “sub-woofer” channel.

(80Hz is the common crossover point for making subs blend in, but I myself have a very big head and find that sound is easily pinpointed down to around 70Hz. Please note that neither of these frequencies are the 120Hz that Dolby recommends, and please remember that it is their spec that we follow, for good or for bad.)

Okay, I’m confused. They have some pretty interesting plots of power vs. frequency for each ear… yet the end result appears to be simply frequency independent power vs. angle and delay vs. angle transforms. Speaking from ignorance and I’m sure I’m somehow oversimplifying… but you’d think that time delay and power transforms could be worked out to a pretty decent approximation simply based on distance from the source to the individual ears. At first I was thinking they actually compensated for frequency (just intuitively you can imagine that sounds forward or behind attenuate the frequency spectrum slightly differently based on if the earlobe is in the way, etc)… but it appears they ignore all the frequency results?

“Finally, if the angle to be emulated fell within the region where the head acts as somewhat of a lowpass filter, we applied the simple averaging filter to produce the output sample.”

This was under the Software Design section under System Implementation. An averaging filter is a simple type of low pass filter.

Ah, thanks. I didn’t see that part. An averaging filter is a terrible kind of low pass filter (check out it’s filter response sometime), but it seems to work well enough and the processing power they have is very limited. In other words… a successful hack. :)

I thought the same, the frequency dependent differences were the only reason I could think of to do these measurements rather than just working it out. I guess if you’re trying to factor in the differences between behind and in front of the head due to the tissue of the ear, or things like hair blocking sound (which seems kinda pointless…), maybe.

I was also kinda wondering how we are able to differentiate sounds coming from behind or in front of us, or if we’re able to tell differences in height. It doesn’t seem like we’d be able to, other than with visual cues. I could probably look this up but I’m feeling lazy atm.

There is a test sound file towards the last third of the original publication. Also, i won’t say that they simulate stereo : they DO stereo, as the left and right signals are not identical. On the other hand, they simulate the sound source’s position in 2D space (well, 1D actually because they did not simulate a varying distance).

http://en.wikipedia.org/wiki/Head-related_transfer_function

examining the source of their webpage, we see the following quote at the bottom commented out; [i]We have attempted to give due credit to all those helped in the construction and design elements of our project, and we are releasing the entirety of the source code for our project under the GNU GPL with the hopes that it might be of use to anyone else attempting to construct a similar system.[/i]

I think we’d all love to see this.

I’m glad I’m not the only one regularly looking at the HTML code of random pages I visit.

Binaural != stereo

Been done before. Of course, I couldn’t do it *again*, and they could, so I am impressed nonetheless. Most of the paper went right over my head.

The gem for me was the class D mosfet headphone amp, implemented using only jellybean op-amps and comparators plus discretes, and no specialty ICs. I enjoy stuff like that, and like the way they clearly showed the functional blocks in the schematic. May actually try building it later.

Oh, nice project. I did similar stuff almost 15 years ago in my masters project. Still interesting stuff.