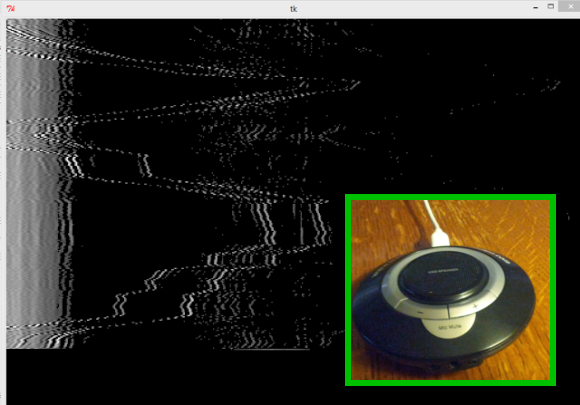

[Jason] just tipped us off about his recent experiment, in which he creates a sonar system using standard audio equipment and a custom Python program. In case some of our readers don’t already know it, Sonar is a technique that uses sound propagation to detect objects on or under the surface of the water. It is commonly used in submarines and boats for navigation. [Jason]’s project uses active sonar, which consists in sending short audio bursts (chirps) and listening for echoes. The longer it takes for the echo to return, the further the object is. Though his proof of concept is not used underwater, that may change if he continues the project.

The audio editing software Audacity was used to make a fast frequency changing chirp, along with PyAudio libraries for the main Python program. Exact time of arrival is detected by correlating the microphone output with the transmitted signal. Given that [Jason] uses audible frequencies, we think that the final result shown in the video embedded below is quite nice.

Study: echolocation algorithm maps cathedral in 3D to the millimetre:

http://www.wired.co.uk/news/archive/2013-06/18/echolocation-app

Computer scientists have developed an algorithm that uses echolocation to build an accurate 3D replica of any space with just four microphones. … The algorithm was successfully tested in a room where the wall was moved, but more impressively in Lausanne Cathedral’s side portal, which is as intricate as a building comes. The region was accurately mapped in 3D … The idea behind the technology is not to help train us all in echolocation — which researchers believe can be done — but apply it to forensic science, architecture planning and even the consumer space.

Reminds me of using the speaker and mic in a laptop to detect when someone was sitting in front of the machine.

http://www.mccormick.northwestern.edu/news/archives/589

I always wanted to try doing some sound holography. Zuccarelli (http://en.wikipedia.org/wiki/Holophonics) initiated the hypothesis that the human hearing is an holographic process. I strongly believe in that fact, except I disagree that the human hearing produces any reference wave. Instead I believe that our ears are made to diffract sound waves. The brain can then decode phase differences (therefore individual wave directions) from the superposition of the diffracted waves.

Dolphins are able to echolocate because their teeth work as a diffraction grating, I believe we perform the same process but in a less precise maner.

reminds me of this great hack.

http://hackaday.com/2011/02/17/motion-tracking-prop-from-alien-movie/

Are you trying to imply that sonar only works effectively on or underwater? If so, then most ultrasonic sensor systems (such as in robotic navigation) would beg to differ.

Well to be pedantic sonar is only underwater, in the air it’s echolocation, and in space it’s ineffective.

Actually, SONAR (it’s an acronym: SOnic Navigation And Ranging) is a process/device built and used by humans – in air or water – to duplicate the process of echolocation observed to be used by certain animals (again, in either air or water, depending on species).

In space, no one can hear you ping.

New ultra high def soundcard ?

“Therefore, 44100 Mhz should be able to perfectly reproduce the signal up to 22050 Mhz, which I figured was the limit of my laptop’s audio hardware.”

Great project!

Nice piece of work. One thing: You must sample at greater-than the Nyquist rate. If you look at a plot of a sin(t), you will see that sampling at exactly the Nyquist rate will give you a constant value. Output will be zero if the sampling and signal have no phase difference. This is a common error, probably because it is stated incorrectly in so many text books. Wikepedia has it right http://en.wikipedia.org/wiki/Nyquist_rate You need to band-limit to the Nyquist rate and sample at greater-than. A lot greater-than unless you want a slowly varying result. 8 times faster is a good place to start.

Take a look at synthetic aperture methods. You can get resolution that is independent of range – with a lot more computing. If you find an affordable way to chirp in water with reasonable power, I would love to hear about it. I have been trying various things for years.