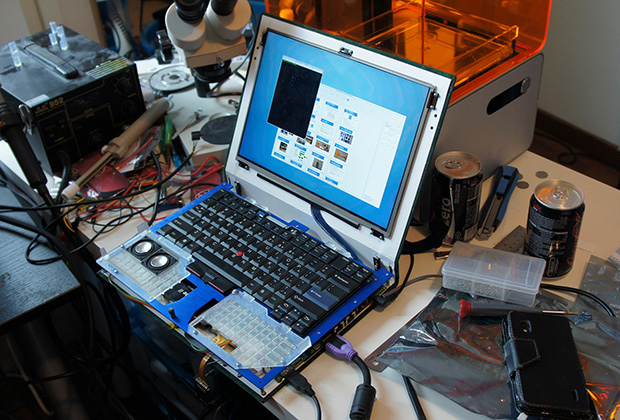

Just over a year ago, [Bunnie Huang] announced he was working on a very ambitious personal project: a completely open source laptop. Now, with help from his hardware hacker compatriot [xobs], this laptop named Novena is nearly complete.

Before setting out on this project, [Bunnie] had some must-have requirements for the design. Most importantly, all the components should be free of NDA encumbrances. This isn’t an easy task; an SoC vendor with documentation sitting around on their servers is rare as hen’s teeth, and Freescale was the only vendor that fit the bill. Secondly, the entire laptop should be entirely open source. [Bunnie] wasn’t able to find an open source GPU, so using hardware video decoding on his laptop requires a binary blob. Software decoding works just fine, though.

Furthermore, this laptop is designed for both security and hardware hacking. Two Ethernet ports (one 1Gbit and the second 100Mbit), a USB OTG port, and a Spartan 6 FPGA put this laptop in a class all by itself. The main board includes 8x analog inputs, 8x digital I/O ports, 8 PWM pins, and a Raspberry Pi-compatible header for some real hardware hackery.

As for the specs of the laptop, they’re respectable for a high-end tablet. The CPU is a Freescale iMX6, a quad-core ARM Cortex-A9 running at 1.2 GHz. The RAM is upgradeable to 4GB, an internal SATA-II port will easily accommodate a huge SSD, the ability to use an LCD adapter board to run the 13-inch 2560×1700 LED panel [Bunnie] is using. The power system is intended to be modular, with batteries provided by run-of-the-mill RC Lipo packs. For complete specs, check out the wiki.

Despite the high price and relatively low performance (compared to i7 laptop) of [Bunnie]’s laptop, there has been a lot of interest in spinning a few thousand boards and sending them off to be pick and placed. There’s going to be a crowd funding campaign for Novena sometime in late February or March based around an “all-in-one PC with a battery” form factor. There’s no exact figure on what the price of a Novena will be, but it goes without saying a lot will be sold regardless.

If you want the latest updates, the best place to go would be the official Novena twitter: @novenakosagi

What price will the first run be ??

I should read the dam post before replying but I saw the pic and instantly needed one.

Is that a ring binder being used as the case? That alone makes it worthy of hackaday

Yeah, this is so cool!

I’m having a “Why didn’t I think of that” moment right now.

There is a blog post from July last year that indicates the RPi header and low speed analog I/O has been replaced with a high-speed header, a 2GBit DDR3 buffer, and a faster FPGA. The wiki is out of date.

“Two Ethernet ports (one 1Gbit and the second 100Mbit), a USB OTG port, and a Spartan 6 FPGA put this laptop in a class all by itself. The main board includes 8x analog inputs, 8x digital I/O ports, 8 PWM pins, and a Raspberry Pi-compatible header for some real hardware hackery.”

I want this…

not entirely sure it does put it in a class by itself, pretty sure I’ve seen fpga strapped to an arm before, otg port is nothing new.

It’s good to see that he’s got the project this far (didn’t doubt it for a moment) however I’m not entirely convinced of the overall usefulness of a (mostly) open laptop, however, it was very interesting to note that he’s hit the same issue as every other board manufacturer out there which is…. the gpu binary blob, which is probably the loudest complaint shouted by people buying dev boards, personally I think the fact that the gpu’s are all closed source isn’t the issue, the issue is access to up to date blobs for modern kernels.

“pretty sure I’ve seen fpga strapped to an arm before, otg port is nothing new”

probably here:

http://www.parallella.org/board/

also has usb-otg

Xilinx sells a product called Zynq which is essentially a pair of Arm Cortex-A9 processors coupled with an FPGA, all in one package.

GPU blobs are a bummer, but by no means a dealbreaker in this case. As bunnie mentioned, the i.MX6 is able to run console and w/ software framebuffer completely open source.

For those that are less militant and like having decent graphics performance, Freescale has some of the best Linux BSP support of the common ARM SoCs out there, and there are blobs for their 3.0.35 and 3.10.9 kernels that generally work well (some caveats w/ Xorg versions and Xrandr features). Looks like xobs has a stub for compiling in the interfaces for the Novena kernel: https://github.com/xobs/gpu-viv and they’ve tested up to 3.11, which isn’t bad.

The Vivante GPUs the i.MX6 uses also has some sweet VPU functionality that can be accessed via gstreamer (that is much pickier about the versions being used). The reality of working w/ ARM SoCs is a lot of times you’re going to be at the mercy of the BSPs, but the i.MX6 is … well, the best of a bad bunch at least.

bunny couldnt you just buy the patent from ati or nvidia and then open the source?.

Buying patents from either of those would be mindnumbingly expensive, and clean-room reverse engineering it all would take a lifetime.

In general forget everything about x86 architecture based processors if you want anything truly open.

i think you mean license a patent. anyway, patents get you no closer to having an open source driver.

im pretty sure ive seen a few attempts at open source fpga based video cards that were opengl compliant. maybe one of those?

I had a university class where the semester project was a complete OpenGL video card on a Xilinx Virtex-5 FPGA. It ran over an Ethernet port and could play Quake at a few FPS. Not a high performance unit by any means, but neat to see what you can do with programmable logic.

Actually all the code was on Google Code, lemme see if I can dredge up the link.

Hm, very cool! Can’t help wondering why you didn’t at least do PCI though. You can get free code modules for that. Then again if it supported ordinary 2D graphics too, then you’ve got half a remote workstation which is kinda clever.

The repository is here: http://code.google.com/p/cpre480x/ … unfortunately much of the documentation was on the (private) class website and I didn’t make a backup copy before I lost access.

The reason for Ethernet was I suspect partially due to ease of implementation and partially to keep the development boards outside of the host machines… not only were they too tall to fit in the case (I think they were Digilent XUPV5 boards, or at least similar), but we were (rarely) required to physically interact with them. And as for ease of implementation, the only available interface was PCI Express, which in addition to requiring extra complexity and configuration area of the FPGA, would have also required much more complex drivers on the host.

Those GPU’s are rather different from mobile ones (exception, Tegra K1 uses kepler cores). Not so useful. Besides if you were to try and buy them, they are valued in the millions/billions of dollars.

“couldnt you just buy the patent from ati or nvidia and then open the source?.”

If he had that much money he could just go out and buy his very own laptop factory

Holy Frankenstein…

Given the goals of the design why isn’t it using a SoC FPGA from either Altera or Xilinx? Then one would have two ARM Cortex A9 processors and space to add open source graphics hardware.

I know he’s a clever guy and he gets a lot of love, but he doesn’t half build some impractical things.

Why would that be impractical? Creating a 2D core is trivial and that is enough for most things.

Speed wise Xilinx claim to have up to 1GHz ARM cores which should be enough speed wise, and if not I don’t think 1.2GHz would be any better.

” Creating a 2D core is trivial and that is enough for most things.”

If you are playing nintendo games, maybe

if you want to play full screen compressed video, not so much

I was talking about Bunnie, not you. Yeah your idea’s probably a good one. Although his design has 4 cores, if that matters.

there are many reasons:

– cost: the i.MX6 CPU plus a Startan6 LX9 FPGA are cheaper than the large Zynq parts with integrated CPU (which is only available in larger parts as I recall)

– time to market: the i.MX6 comes with many things ready, like GPU, SATA, MIPI and is a powerful quad core, linux firmware mostly ready. It would take quite a lot of time to write that code yourself for an FPGA and then do the linux drivers

– power: if you would implement the IO functions/GPU/… in your FPGA, you would need a large FPGA and this would waste a lot of power. A custom made chip is much less power if it dows the job you want to be done.

overall this is a really nice project! I’d love to see some updated documentation on bom, cost, battery runtime (does this work on battery for 1 week as intended?)…

I suppose people would put up with a closed-source graphics chip, as long as it supported OpenGL or whatever standard. It’s not certain that one generation of GPUs will run software from previous ones anyway. Nobody’s supposed to write directly to graphics chips, that’s the whole reason the tech’s been able to advance without being locked in a compatibility trap like x86 CPUs have. You’re not supposed to care about the silicon, just the library. Thank the gods for OpenGL.

So in that case I dunno if people would mind too much having a blob driver for a GPU. Then again who’s he aiming this thing at anyway? Being as ideologically “pure” as he can be isn’t worth much in real cash to me. Proving he can do it for 4x the money isn’t much good either.

I wonder what the point is? But I know there’s a lot of geeks and scientists and engineers and the like who are well-paid and like grown-up toys. Perhaps they’re the market. As opposed to just sticking Linux on a netbook, or one of those Chinese Android $80 laptops.

Actually when I looked at the battery board and it said PANEL METER driver, I admit I moistened a little. All laptops should have panel meters, probably several. Including the edge-on horizontal ones, on the small front surface of the machine. And then several more next to the screen, and a few by the keyboard. For monitoring. And there’s plenty of space on laptop keyboards nowadays, since the screens got so big. Nobody needs a num pad anyway, or at least not alongside the arrow / pgup etc block.

Hell I’d sacrifice the space bar for a few more panel meters. Just needs a few banana-plug sockets and an electric bell now. To let you if know the Jacob’s Ladder

overheats.

Some of the reasons for this build include: (IMO)

-building a laptop from freaking scratch (Duh!)

-Owning what is probably the most secure – as in least-likely spied upon – laptop in the world.

-Some people do hold openness as a valuable quality, whether for moral reasons, or a practical realisation that at some point they very well may want to modify some low level piece of firmware. I recently bought [this wireless card](https://www.thinkpenguin.com/gnu-linux/penguin-wireless-n-half-height-mini-pcie-card) because my default wireless card made it a pain in the ass to use certain GNU-Linux distributions I wanted to use. I’m a bit of a distro hopper.

-seeing people’s reactions at a coffee shop when they look up from their pristine milled aluminum i-thing at your box full of computational manliness.

Those nipple mice were one of the worst pointing devices ever invented.

I disagree, I loved mine before I replaced the laptop that had it. It was nice to be able to navigate without moving my wrist around, and for that reason it was also probably less likely to cause carpal tunnel, etc.

you’ve decided to use the mouse as your straw man while neglecting the trackpad

Those pads are much worse than mice. That’s why laptop owners buy mice, but nobody buys the USB trackpads for PCs. And my IBM Thinkpad’s nipple was a joy, the best pointer-moving device I ever had. Takes a few days maybe to get the knack, but then as Mike says, no wrist movement, just the tiniest of the index finger. I wish PC keyboards came with them by default, or at least inexpensively.

Maybe I’ll treat myself to one of the expensive ones, the amount of mousing I do it’s prob worth it.

“Those pads are much worse than mice.”

Well if you put your LAPTOP in your LAP then WHERE is the mouse supposed to go?

On a TABLE I suppose, genius.

You have a table in your lap? Genius

No but there’s one in my house, and most buildings. If I didn’t have one, I’d probably just use the horrible pad thing. In the absence of a nipple, which is all I ever used on my old Thinkpad. But there’s usually enough tables about for a mouse, s’why people buy them.

> Then again who’s he aiming this thing at anyway?

He was always aiming it himself, exclusively. It was only fairly far into the process that, based on feedback / entreaties, that Bunnie even started considering making them available to others. And the great news is, if you dislike choices he’s made, you can always fork the project..!

I’m in the minority in that I wish he’d left out the closed GPU entirely.

the joys of open source hardware and the maker revolution. Don’t like it, change it or make your own.

The GPU seems to be optionally enabled. There is the ability to do software decoding with full open source code, or hardware decoding that requires the binary blob. Avoid the blob, avoid the GPU.

Fair enough, if people want it. In a situation like that tho I’d want the money first!

“an internal SATA-II port will easily accommodate a huge SSD”

Huh? Why would anyone waste a SSD on a Sata-2 port?

Because a SSD on a SATA-II port is easily three or four times as fast as a notebook-grade rotational drive on a SATA-II port, and draws a tenth of the power?

I think vonskippy wants a SATA3 interface.

why is it a waste to upgrade an older computer?

Been following this via Bunnie’s blog, but I never understood the drive for open source hardware drivers when he’s tying himself to the terrible, completely closed and buggy Xilinx tools. And worse, they’re x86 only (64-bit for Spartan-6 I think) so synthesizing stuff for it locally is just not going to be practical.

I used to carry a small Spartan3 board in the bag with my old Asus EEE for hacking on. Once I upgraded it to 2 gig of RAM, building FPGA images in the field was a practical proposition. Obviously host bandwidth was limited to the 40MB/s or so you get with USB 2 though.

That’s actually kindof a big deal, now you mention it. A lovely FPGA in a machine that can’t program it! Not completely, at least.

Maybe Xilinx’s next-gen tools could be Java based, they could run it from their website which I’m sure would provide the same maniacal feeling of control. I suppose a quad-core ARM could emulate a PC enough to run Windows a little, if you can use ARM tools to do most of the work, and just leave the final synthesis to “Windows”. XP’s a lot faster than it’s successors, if Xilinx tools still support it.

Well yes. Actually ISE runs under Linux too, so theoretically qemu would be viable. I don’t know how well a 64 bit x86 could be emulated by a 32 bit arm though. Speed would be an issue I guess.

Both Xilinx and Altera were talking about P&R as a service about a decade ago but it turned out noone wanted to trust their IP to a web service. Nothing to stop you setting up your own internet server with the Xilinx tools, but then you have the hassle of synchronisation. And bandwidth issues – FPGA image files can be pretty big.

What’s the point of having two speakers for stereo sound when they’re placed right next to each other?

As for closed and NDA wrapped GPUs, are there any older designs that might be available and portable to an FPGA? Perhaps from defunct companies? Ask Micron if they’d give up the Rendition designs they bought then did absolutely nothing with. That was back in 1998. Rendition’s GPUs had some features superior to what nVidia and ATi and 3Dfx’s chips were doing, and their 3rd generation was looking like it would stomp all over the competition – except for a pesky lack of funds to put it into production. Micron bought Rendition, added a sub-site for it to the Micron site… then nothing more ever happened, not even full OpenGL support for the second generation chip – which Micron had said they’d get right on to finishing.

If the Rendition GPU designs were made open source, that would be a base to start from to build a GPU capable of handling higher resolutions and more polygons etc.

“What’s the point of having two speakers for stereo sound when they’re placed right next to each other?”

The developer can make sure that the stereo functionality actually works before committing the design.

DREAM ON about opening up old proprietary code. This stuff was written under contract by third party consultants who would rather poison their own children than give up their lucrative careers.

You could in principle implement OpenGL functions in an FPGA designed from scratch. The specs are open, would just need a hell of a lot of effort for the hardware and driver design. It wouldn’t be much compared to the competition, which is full-custom silicon. It depends on need, you might find it’s as easy to get one of the Cortex’s cores to do the rendering, for comparable performance and without the horrendous effort of developing an open-source graphics chip.

Even if you did develop it, it’d be expensive, but worst of all obsolete in 6 months. You need constant development to do it. Even using an FPGA you’d need a team comparable to Nvidia’s, I’d guess.

As FPGAs get better, graphics chips soar ahead of them, might never be practical to do, except for certain specialised functions.

For the other point, I dunno that Micron would happily give away something they paid for, just on principle. Perhaps it’ll find it’s way into some phone chip of the future. And 1998 isn’t even the Stone Age, graphics-card wise. More like early Big Bang.

Still, a look around the web says that OpenGL ES has been put on an FPGA. Meant for in-car use and consumer stuff. Still if you really want good graphics for games, buy a Playstation.

“You could in principle implement OpenGL functions in an FPGA designed from scratch. ”

Only if that principle happens to involve human beings with lifespans measured in centuries

No, you’d use several humans in parallel.

Note a few dozen posts above, I had a university class that did exactly that (implement OpenGL on a FPGA). It’s very doable (i.e. has been done), and if I recall correctly it certainly didn’t take more than a year or so. It’s just not very fast, because let’s face it, a GPU is a pretty specialized piece of hardware and it’s hard for run of the mill programmable logic to get anywhere close to it.

How much of it ended up in software, on an FPGA-CPU? Did you use Microblaze or NIOS or whatever? Or a custom thing? And how much implemented direct in hardware? Did you use parallel identical units much for speed? Was the point more of getting it working than fast?

It was full hardware, no emulated CPU included. Have a look at the project repository, I posted the link above. Kind of a cheat though I guess, as it did involve a mod to OpenGL to get it to spit the data out an Ethernet port.

It’s been a few years and I’d have to look back at the design, but I believe there was only one execution unit in the final product. It was an exercise in design of graphics processing hardware, more a proof of concept than anything. But it did work, and it did handle at least the more basic OpenGL features, so conceptually it was a success. The early days of the class did have us experimenting with parallel processing, but at the end of the day even a Virtex5 with a billion transistors doesn’t really have enough guts to approximate a proper GPU.

I think the research team that wrote the backend may have continued to work on it in the years after I took the course. They’re offering it again this semester so I wonder how much better it runs now. At the time they were frantically trying to get all of Quake’s required OpenGL calls to work – there were still some texture and shading issues that were not yet fixed.

this has potential, but as of now i would never use one. Firstly the screen has no hinges you would need to prop it up to keep it from falling backwards. Secondly the case has no edges, sand, dust and rain can easy get on the motherboard, sure you can take it apart to clean it but why not just get a laptop with an actual case not everything has to look like a L337 h4x0r uses it. 2 speakers right next to each other would get annoying when its right by your left ear and nowhere near you right ear.

“[Bunnie] wasn’t able to find an open source GPU”

Guess Intel’s were out of the question?

Actually THERE IS Vivante open source driver, and it does run Quake

https://blog.visucore.com/

https://github.com/laanwj/etna_viv

https://www.youtube.com/watch?v=kkpowIf5edU