The Raspberry Pi has a port for a camera connector, allowing it to capture 1080p video and stream it to a network without having to deal with the craziness of webcams and the improbability of capturing 1080p video over USB. The Raspberry Pi compute module is a little more advanced; it breaks out two camera connectors, theoretically giving the Raspberry Pi stereo vision and depth mapping. [David Barker] put a compute module and two cameras together making this build a reality.

The use of stereo vision for computer vision and robotics research has been around much longer than other methods of depth mapping like a repurposed Kinect, but so far the hardware to do this has been a little hard to come by. You need two cameras, obviously, but the software techniques are well understood in the relevant literature.

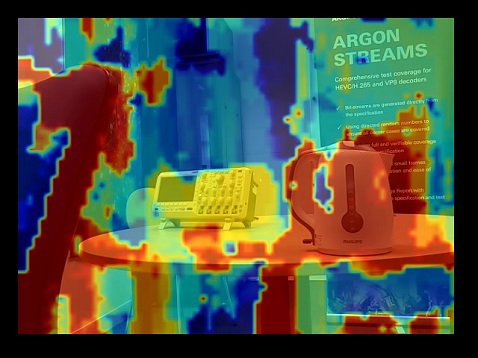

[David] connected two cameras to a Pi compute module and implemented three different versions of the software techniques: one in Python and NumPy, running on an 3GHz x86 box, a version in C, running on x86 and the Pi’s ARM core, and another in assembler for the VideoCore on the Pi. Assembly is the way to go here – on the x86 platform, Python could do the parallax computations in 63 seconds, and C could manage it in 56 milliseconds. On the Pi, C took 1 second, and the VideoCore took 90 milliseconds. This translates to a frame rate of about 12FPS on the Pi, more than enough for some very, very interesting robotics work.

There are some better pictures of what this setup can do over on the Raspi blog. We couldn’t find a link to the software that made this possible, so if anyone has a link, drop it in the comments.

Nice to see they’ve actually got both Cameras running. The 2nd Cam input was totally useless until now. It’s a nice demo but the time to process the image is really impractical.

haha you sucked, author of this was an intern at a company with Broadcom NDA, NOT A SINGLE byle of source code will ever see a light of day

^^^^^sucker

He’s unfortunately right. I was originally a critic of the Raspberry Pi before falling in love with mine, however I’ve come to terms with the fact that if I want GPU number crunching I’m going to have to look at a more modern ARM chip because Broadcom is not going to swoop in to save the day wearing a cape. Their policy on this issue has had enough time and public pressure to crystalize in to it’s final state.

I would be thrilled if I was wrong but lets get serious here: the Raspberry Pi is already obsolete as a field-able product in any arena where Ghz/$ is a selling point.

It’s training wheels for people getting started in developing for Cortex A15 or whatever. Beyond that, it’s just reference hardware you use to prove something has made the jump from x86/x64 to ARM. Even for that purpose, I’ve started to move to the Samsung Chromebook Crouton community(which actually has working OpenCL accelerated GPU drivers working).

The Raspberry Pi still fills a huge niche in the disposable 32 bit HDMI/RCA Video out game. For everything else: Qualcomm is putting their money where their mouth is with the Freedreno project, and NVIDIA continues to focus on their core product(CUDA support for developers) with the TEGRA K1. This is where the action is for people who are serious business and don’t have time or patience for Broadcom’s VideoCore nonsense. You’re better off waiting for the Jetson TK1 to hit $70/board than you are crossing your fingers you’ll ever get serious number crunching done on the Raspberry Pi.

Even as a High Performance Computng playpen, you have to ask what benefit a fleet of Raspberry Pi’s offers that it’s more cost effective to get from a bunch of virtual machines?

The whole point is for education – not the latest and greatest hardware.

It is even more than that. They’re very familiar with the VideoCore architecture as they’re part of the original team that designed it in the first place when they were AlphaMosiac.

He only used openly available tools to do this. If you read the comments on the raspberry pi blog article someone from the company offers information on how to do this yourself, provides links to tools and documentation and his email address to offer assistance.

hahaha yes

and intern at Intel used only openly available tools to rewrite microcode …

Actually, for this application you should be able to manage purely with the reverse engineered videocore docs. It is basically a kernel that is run on the image, how to do this is known (there even is a simple alpha blend hello world thing somewhere)

“The use of stereo vision for computer vision and robotics research has been around much longer than other methods of depth mapping like a repurposed Kinect, but so far the hardware to do this has been a little hard to come by.”

A PC motherboard and two webcams aren’t exactly hard to come by. Haven’t been for at least a decade. A Pi will take up less space and use less power, but that has nothing to do with the availability of capable hardware. Even going back two decades, you had robots doing this with analog cameras and video digitizer cards, the latter being somewhat harder to come by… so your statement is about 20 years out of date. ;)

But comparing results from 20 years ago, and more current examples including the sample depth map posted here, it seems that the accuracy of the algorithms for reconstructing depth from stereo images hasn’t improved much. So I wonder, is this even still a relevant technique? Or has it been supplanted by Kinects, single camera setups with structure-from-motion algorithms, etc?

good luck with this setup, i tired it once…

even if you manage to get both working at the same time (which is quite hard with windows, if both have the same hardware-ID) the performance was not the yellow from the egg :/

No kidding it hasn’t improved. That depth map sucks and it’s basically useless for any modern robotics application.

The only way I could see it being useful is if it produced at 12fps, then the composite data over time could give you a pretty good understanding of the area around you. Not sure of the accuracy but when using the combined data you can significantly improve the accuracy compared to a single measurement

Depth processing be damned, can a pi compute module produce a 3D (stereo) h.264 stream from the two cameras and cram it down a network socket? If so, that would be a Most Excellent telepresence tool, think of flying a quadcopter in with stereo vision with playback on a 3D HDTV, Oculus headset or similar.

Augmented Reality too if the rpi has any time left over for object tracking in realtime, but I think slightly more-grunty hardware would be required.

From what I understand, Stereo vision is by far the most processor intensive method of capturing 3D data. I don’t think a RPI would be able to do anything else whilst running this software, however, if you could turn this into an embedded system and create some form of data output much like the kinect, well that would be VERY useful for my work.

Some background from the inside. These were some of the motivations for this project:

– to explore stereo depth algorithms and their practical implementation on embedded platforms with limited resources

– to investigate the capabilities and limitations of the new Raspberry Pi Compute Module – in particular, how well can it handle two camera inputs

– to give David, our intern, some experience of the trials and tribulations of real project work

All of this is against of background of some much bigger R&D and customer projects in related areas such as 3D mapping using SLAM techniques, and depth-dependent video stitching – we were not trying to come up with the finished article on this particular project. Some related links:

http://www.argondesign.com/case-studies/2014/oct/21/stereo-depth-perception-raspberry-pi/

http://www.argondesign.com/case-studies/2014/feb/10/3d-image-processing/

http://www.raspberrypi.org/real-time-depth-perception-with-the-compute-module/ (particularly read the comments and links from our CTO)