Some guys build hot rods in their garage. Some guys overclock their PCs to ridiculously high clock frequencies (ahem… we might occasionally be guilty of this). [Nerd Ralph] decided to push an ATTiny13a to over twice its rated frequency.

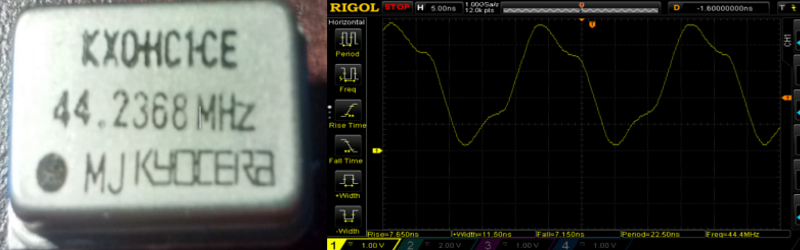

It didn’t seem very difficult. [Ralph] used a 44.2 MHz can oscillator and set the device to use an external clock. He tested with a bit-banged UART and it worked as long as he kept the supply voltage at 5V. He also talks about some other ways to hack out an external oscillator to get higher than stock frequencies.

We wouldn’t suggest depending on an overclock on an important or commercial project. There could be long term effects or subtle issues. Naturally, you can’t depend on every part working the same at an untested frequency, either. But we’d be really interested in hear how you would test this overclocked chip for adverse effects.

Now, if you are just doing this for sport, a little liquid nitrogen will push your Arduino to 65 MHz (see the video after the break). We’ve covered pushing a 20MHz AVR to 30MHz before, but that’s a little less ratio than [Ralph] achieved.

Oh and here is another “overclocking” tip: 1N4001, rated to 50V of reverse voltage can work OK at 100V. No kidding, just try it. Such as magic in single PN junction, now imagine tens thousands of them in single package.

Pushing the MCU out of specs doesn’t mean it will crash completely. Various parts of the MCU and peripherals may stop working (that is the simple, but uncommon scenario) or may work funky (that is the more common scenario), especially outside the room temperature, One can find out the EEPROM data doesn’t have the expected endurance due to wrong write timing (write the data and wait 20 years, or perform accelerated aging tests), timers may miss a tick (say, one per million, something that is hard to capture by looking at blinking LED), ADC may be out of spec or reading the program FLASH may be erratic.

Though it may look like the ATtiny13A is doing fine at 44MHz, it may skip or mangle single instruction per ten millions (yes, it can happen unnoticed with such as trivial test) and all peripherals may be half-dead, but the author wouldn’t know. Does it still count as “working”?

Is there a WinPi alternative for AVR? :)

The tiny13a has no SPI, UART, or even USI, so there is little that can fail.

If you read my blog post you’ll see the main point was about external clocking, and the overclocking was an aside.

I like your blog, but this is Hackaday comment to article on Hackaday, in which Al Williams asked Hackaday readers to write their opinions about failing overclocking chips (pointing at your blog as example), so I did.

By the way, there is no coincidence I mentioned timer, EEPROM, ADC or program FLASH – available on tiny13a – which can fail.

I remember back in the days when I overclock my mc68882 math coprocessor. The normal calculations came back correctly, but the corner cases when they use a different logic/algorithm. I can easily tell by looking at the mandelbrot. Those answers may exercise the hardware with a more complex logic path that fails the setup/hold time requirements and comes back with incorrect answers. It is like a box of $1 chocolate and can be full of unpleasant surprises.

While that’s true some overclocking for a certain task tend to be okay, but one should test the configuration as (as you say) some things may be marginal.

I used overclocked AVR chips for software USB a long time ago, running at 24MHz meant that one needs much less code and can be closer to the USB specs. A 20MHz speced chip with a stable 5V source can run that task without any problem. And for that task any instruction skipping would be obvious ;)

Well, 24MHz is not that much over the specs, I would be surprised if it wouldn’t work. For hobby use, 20% overclocking, why not.

By the way, are you sure it was 5V? ;-) AFAIK USB 2.0 specs allow as low as 4,2V on the device end (variations on host power supply plus voltage drop on cable). Assuming marginal situations is good practice. Yep, again, one can cover it by using really good and tested cable, as well as known USB host.

You are right, going out of the specs means you aren’t any longer on the solid ground where most of the variables are known and granted; you need to test the particular configuration and any change – supply voltage, temperature and even code (look above what tekkieneet wrote) – can ruin the project in unpredictable way.

Diodes can typically handle far higher reverse voltage (PIV) than advertised. 3 to 5 times higher is normal, 10-15 times is possible.

I guess guys at Atmel did a bit more testing when they specified 20MHz as maximum reliable frequency of that chip. I don’t really see the point in overclocking such chips to achieve performance of chip that costs few cents more. Except for fun, then it’s okay.

Can you imagine how many different things you’d need to test to make a uC? Max frequency would be an easy one to design for I would think!

There are PVT (Process, Voltage Temperature) variations that can affect the speed, so the datasheet has to CYA for the environment: commercial vs industrial temp, voltage range. So no it is not easy to control. By playing with the latter two, you can get some gain on the speed.

Process is luck of the draw. There are known cases where a certain batch of Intel processor from a certain fab can run faster. In order to get good yields, the vendor has to be a bit conservative. They also play games on what performance that they selling. The high end processor vendors like to do speed binning.

reference: http://asic-soc.blogspot.ca/2008/03/process-variations-and-static-timing.html

20Mhz is the highest speed rating for the tiny & mega AVRs. For a cheap part like the t13a, they are unlikely to make a special test to see if they all pass at higher than 20Mhz.

The tinyx5 series are also well known for their overclockability; I even know a commercial product from Adafruit that does/did overclock a t85. The 3.3V trinket bootloader ran at 16.5Mhz, which is outside the rated voltage/frequency rating.

Some circuits fail at hot, others run into issues at cold.

If you’re at 25degC and not at the low end of the operating voltage range the specs are usually very conservative.

The cost of testing (Time is a big factor) specs adds up and means that sometimes the part is tested to a limit that is good in comparison to designers needs rather than the max it can achieve. Also spec’ing too aggressively and you’re going to reduce yield, raising the cost of devices and possibly affecting availability, assuming 100% of devices are tested.

I talk about general chip manufacturing, hardware and overclocking. I don’t really don’t care about Atmel chips and their marketing.

I personally think that when designing the atmega8 they had to put in some effort to achieve 16MHz. Later when they were designing the newer ‘168 family of chips, 20MHz was easily achieved. Nowadays with the ‘p variations, their process is so much better that MARKETING decided that they wouldn’t upgrade the published clock speeds.

Kids don’t do this at home! Don’t drink liquid nitrogen like he does at 8:39

Why not?

This is why:

http://www.bbc.co.uk/newsbeat/article/19866191/teenagers-stomach-removed-after-drinking-cocktail

I’ll leave this here. http://www.youtube.com/watch?v=9j9YIKMYgUw

I don’t think he is drinking any liquid nitrogen (which would be dumb to do) but something like coffee ?

It’s quite obvious he’s drinking from a different container (some kind of cup) instead of the thermos of N2. The video also has some kind of artifect shortly after that looks like he edited it, which is probably where he grabs the thermos.

Absolutely everything is scientific using liquid nitrogen!!!

He didn’t drink it. He just peeked to see if his flask was empty. Super conducting Tiny 10 anyone?

Then you should turn up your volume and you will hear him swallow it!

i used an NXP LPC2214 overclocked @ 100MHz (from 60MHz max) in a commercial product once.

No heat sink or anything, that was about 8 years ago, and not a single board has ever come back.

ancient post, but i’m surprised no one here ever mentioned the SwinSID, a physical SID chip replacement for the C64 which runs at 32MHz on the Atmega88. this is a commercial product & is not subject to any major stability issues, at least as far as I am aware. i had always assumed the max. clock listed in the DS was more of a soft-stop – a point at which the chip may still “work”, but would likely behave unpredictably in practice. since it appears that this is not always the case (at least for the 88), overclocking could open doors for improving any AVR-based project doing DSP at audio rates. faster clock == reduced aliasing & simplified output circuitry, especially when using the PWM outputs for D/A conversion. pretty neat!