Many successful large-scale projects don’t start out large: they start with a small working core and grow out from there. Building a completely open-source personal computer is not a weekend project. This is as much a retelling of events as it is background information leading up to a request for help. You’ll discover that quite a lot of hard work has already been put forth towards the creation of a completely open personal computer.

When I noticed the Kestrel Computer Project had been submitted via the Hackaday tips line I quickly tracked down and contacted [Samuel] and asked a swarm of questions with the excitement of a giddy schoolgirl. Throughout our email conversation I discovered that [Samuel] had largely kept the project under the radar because he enjoyed working on it in his down time as a hobby. Now that the project is approaching the need for hardware design, I posed a question to [Samuel]: “Do you want me to write a short article summarizing years of your work on Kestrel Project?” But before he could reply to that question I followed it up with another: “Better yet [Samuel], how about we tell a more thorough history of the Kestrel Project and ask the Hackaday community for some help bringing the project home!?”

[Samuel] was just as excited to tell the story of his project as I was to learn about it. This article is just as much his as it is mine, in fact if I knew how to add him to the by-line I would do it.

Kestrel Computer Project

In 2007 software engineer [Samuel A. Falvo II] founded the Kestrel Computer Project. His very own open source, open hardware, full-stack personal computer project. He has been working on it in his spare time while documenting his lessons learned and accomplishments alike. Although as we’ll soon discover, GitHub wasn’t used from the very beginning of the Kestrel project, [Samuel] supplemented its absence with hand drawn schematics to fill in the history.

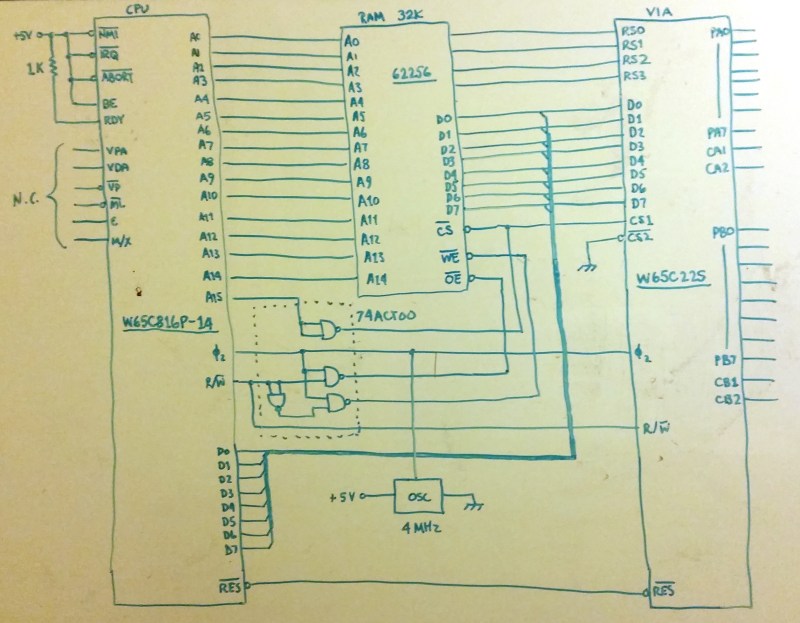

Kestrel-1

It all started with the Kestrel-1, back in 2007. The Kestrel-1 home-brew computer started like many at the time: a handful of chips seated in a typical breadboard with lots of wires going everywhere. It was intended to serve as a proof of concept for a more ambitious goal, a single-board computer with similar capabilities to the old 8-bit computers he grew up with. The original Kestrel-1 was designed around the 65816 CPU which is a 16-bit upgrade to the 6502. [Samuel] dialed-in the 40 pin DIP CPU to run at 4MHz and wired up 32KB of RAM along with a single VIA chip.

Those of you keeping up by capturing a mental schematic will notice the lack of ROM. It’s no accident you find it missing, (E)PROM programmers weren’t the cheapest item on the bench and because [Samuel] couldn’t afford one, he made due with what was available. He decided to place RAM in high memory, and I/O in low memory which greatly simplified address decoding by using a single 74ACT00. The system software would then have to be loaded into the Kestrel-1 through a special DMA circuit known as the Initial Program Load (IPL) circuit that was driven by a host PC.

This was prior to [Samuel] adopting the use of GitHub resulting in many of the technical drawings being forever gone, luckily [Samuel] was able to recall this schematic from memory and redraw it for the archives.

Unfortunately, the IPL circuit schematic has been forgotten. The IPL circuitry was controlled via a PC parallel port, with bits to control RDY, RESET, and BE (bus enable) signals. An SPI-like protocol allowed the host PC to stream in settings for address and data bus bits.

Kestrel-2

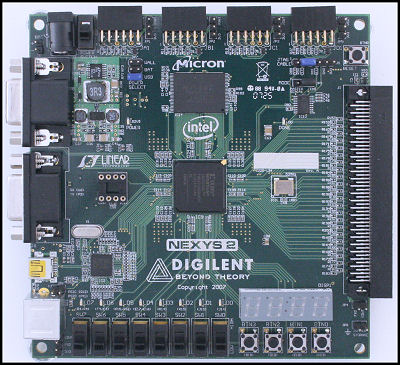

By 2011, FPGA development boards became more affordable and [Samuel] decided to implement the Digilent Nexys-2 board. This is when he realized the end goal of his original vision.

As development advanced he chose the Wishbone bus architecture as the standard interconnect, and began writing the Verilog for what would eventually become the video core: the Monochrome Graphics Interface Adapter (MGIA) in proper 65xx series ‘interface adapter’ form.

To confirm that the Wishbone interconnect worked [Samuel] developed a simple 16-bit processor just powerful enough to get the job done. At just 300 lines of Verilog, it was brutally basic HDL that he derived from an older design called the Steamer-16. [Samuel]’s S16X4 processor and later enhancements continued to be the core of the Kestrel-2 four years later. Despite the constrained instruction set, it proved remarkably agile especially when pit against the 65816. If you’re interested, [Samuel] wrote a datasheet that you can view in PDF form.

Over time, he built a Keyboard Interface Adapter (KIA) and General Purpose Interface Adapter (GPIA) for handling miscellaneous IO, then a bit-banged SD card interface built on top of the GPIA, and eventually system software and compilers evolved to run on the S16X4 family.

It was at this point that some crowd-pleasing talks started taking place where [Samuel] gave presentations on the Kestrel project using the Kestrel itself.

Unfortunately, due to the extremely reduced instruction set, the S16X4 has rather poor instruction density for a processor that can only address 64 KB of RAM. The system and application software had grown to such a size that, even considering overlays, it could not run application software more complicated than an interactive hello-world application or his simple slide show presenter. Access to more memory was needed, and that meant a more capable CPU with a wider data path.

After some research, [Samuel] determined that RISC-V offered comparable code density to the S16X4, while offering a 64-bit data path and processing capabilities. Combined with the instruction set’s liberal licensing, he knew it would be a winner.

Kestrel-3

Unlike the Kestrel-2, where the hardware was developed before any of its supporting software, the Kestrel-3 was to be emulated in software first. The idea is that it would greatly simplify debugging of system software, making hardware development easier later on.

The Kestrel-3’s development test-mule design is basically a repurposed Kestrel-2, but with a 64-bit RISC-V core for the processor, and equipped with at least 512 KB of static, asynchronous memory the goal here is a debuggable system.

As a believer in Kent Beck’s truism, “Make it work, then make it right, then make it fast”, [Samuel] is building the initial Kestrel-3 design deliberately around constraints typically found in off-the-shelf FPGA development boards. For example, many inexpensive boards offer only a 16-bit data path to relatively slow asynchronous RAM. Based on the selection of 70ns access RAMs combined with the bottleneck that a single opcode requires two hits to memory, he expects the processor to execute code no faster than 6 MIPS. This should be satisfactory for proofs of concept and initial development tasks, though, when you consider a Commodore-Amiga 500 or early-model Atari ST typically executed code closer to 2 MIPS, thanks to each memory cycle consisting of four clock cycles.

So, he wrote an emulator for the RISC-V instruction set, covering only the basics and modeling the Nexys-2 memory capacity of 70ns 16MiB of memory. Using this as a development platform, [Samuel]’s current goal is to get the power of a slightly accelerated Commodore 64 or 128. Keeping the processor design simple helps to achieve this basic goal. Simple, in this case, means no multiply or divide instructions, no floating point, no vectors, and no paging memory management unit (which are all intended future upgrades). The processor also only provides support for a single privilege level, machine-mode. In a very real sense, the processor is deliberately intended, or even crippled you might say, to more or less model a 64-bit 6502.

Once the development is further along the improvements will allow more sophisticated operating systems to run on the computer, such as Harvey OS, FreeBSD, or Linux. Of course, this also implies adding user- and supervisor-modes to the privilege stack as well.

Currently, [Samuel] is trying to port Oberon System 2013 to the Kestrel-3 in hopes of having a more or less stable, yet capable, system software base on which to build from. The Digilent Nexys-2 is no longer in production, so anyone wanting to play with actual hardware will need to adjust the design to their local FPGA development board of choice. [Samuel] has not yet chosen a replacement for his Nexys-2, although Terrasic’s Altera DE-1 has been strongly recommended.

Longer term, [Samuel] has entertained the notion of building a completely custom motherboard as well, most likely using the GNU gEDA toolchain, and employing Lattice iCE40K-series FPGAs almost exclusively, so as to exploit the recent developments in open source development tools for that platform.

As it stands now, Kestrel-3 remains a soundless, black and white computer thanks to its dependency on the MGIA core. However, plans for supporting sound and color, even higher resolution, graphics exist.

In terms of general purpose expansion, [Samuel] has some aspirations of using RapidIO technology on top of single or bonded SPI links in a form suitable and compatible with most Free and Open Source Software (FOSS) hackers budgets. It will not be named RapidIO, obviously, as that’d constitute a trademark violation. However, it’ll be an open evolution of the current set of open RapidIO protocol specifications.

Finally, there is “Ken’s Challenge.” A former coworker, suitably impressed with the state of the Kestrel-2, challenged [Samuel] to use the Kestrel-3 at work, for work, at the office for five whole business days. Using VNC over a TCP/IP/Ethernet link to let the Kestrel-3 serve as a glorified, graphical, dumb terminal definitely counts to meet the challenge, so that’s the route [Samuel] wants to take. There’s no financial incentive here; the reward being some impressive bragging rights. Obviously, to meet this challenge, the CGIA core will need to replace MGIA, and support for a mouse and a moderately performant Ethernet link will need to be added. Tunneling through SSH is a definite bonus.

If you would like to join in on the action and give [Samuel] a hand with the hardware or software you can pop over to his hackaday.io Kestrel Computer Project and check things out.

Hackaday Hardware Help

Right now, an emulator for the Kestrel-3 exists in a very basic form, and the very beginnings of an Oberon compiler has been ported, just powerful enough to produce ROM images for bootstrapping with. However, much more work is needed to complete the Oberon environment. In particular, [Samuel] still needs to work on implementing disk-resident object files, and a suitable linker to bind them into a bootstrap image. Once this is done, then the fun work begins: porting the InnerCore and OuterCore components of Oberon System 2013.

The CGIA design has not been fleshed out yet, but once a reliable-enough monochrome computer exists, this will be one of the next tasks to tackle (especially in consideration of “Ken’s Challenge”). Although 640×480 is decent for simple tasks, but particularly with systems even as simple as Oberon System, you really want a 1024×768 display with some color capability.

[Samuel]’s inexperience with audio is going to limit the appeal of the Kestrel-3 to the open source digital audio workstation field. However, if anyone wishes to help in this area, he’s open to contributions.

Support for RapidIO-like expansion capabilities won’t make too much sense with off-the-shelf FPGA boards; however, it would be nice to have for custom motherboards. We tend to see quite a few FPGA projects here at Hackaday and there has been an increasing community interest in the Lattice devices, as [Samuel]’s interest attests.

Relevant:

http://hackaday.com/2012/12/16/bunnie-builds-a-laptop-for-himself-hopefully-us/

and

http://hackaday.com/2014/01/12/bunnies-open-source-laptop-is-ready-for-production/

Something similar:

http://hackaday.com/2014/01/12/bunnies-open-source-laptop-is-ready-for-production/

(I tried to link to two hackaday.com URLs and it presumably got tripped up in a comment spam filter?)

Yep. Comment showed up later. Sorry about the duplicative link.

No worries from me. Thanks for the link; was unaware of this project. Maybe some day, in time, I can start to worry about case design for the Kestrel too.

It seems that technology is moving faster than this project.

Yes, it is. I’m the first to admit I’m slow on this project. However, I don’t see this as a cause for not continuing with the project. The nice thing about it is, I have no commercial deadlines of any sort, so I’m free to take my time and work at a pace that is compatible with my professional work schedule, distractions from married life, and other things. My philosophy is simple: if someone outside the project is interested, and wants to contribute, I’ll happily review and merge. If not, that’s OK too. There are many other projects out there, RPi being perhaps the biggest bang for the buck, particularly when it comes to instant gratification.

At some point, you don’t even need a FPGA to “emulate” it or step through it in real time.

http://www.visual6502.org/ has a bit to go still to be considered real time, the last time I checked but it still seems like a relevant project to what you are doing.

Visual6502 project spends a lot of time simulating a single chip at the transistor level. While I’d love to be able to go that far down to the metal, it’s not required, and the Kestrel is definitely a larger scoped project than just the CPU. :) Attempting a transistor level simulation of the Kestrel-3 would be a considerable undertaking, and would take a very long time to run any software you put on it.

There’s already an in-browser emulator for RISC-V which runs at reasonable speed – could presumably be forked and modified if you needed such a thing for Kestrel. See “Angel” at https://github.com/fcambus/jsemu#bare-cpus which boots Linux. On my laptop it says it’s running at 10MIPS.

My emulator, when not handling graphics, goes substantially faster than 10 MIPS (on my computer, Angel is quite a bit slower than 10 MIPS; closer to 3). When polling SDL event loop or updating the frame buffer, it slows down of course. Right now, the emulator runs about 100,000 to 200,000 CPU cycles before polling SDL, which seems to be a decent compromise in runtime performance.

Ah, you have an emulator already, in C – I hadn’t seen that. Of course, I hadn’t looked at the fine documentation!

https://github.com/KestrelComputer/kestrel/blob/master/3/src/e/e.c

that is a problem for projects like these one

If the goal is a ‘modern’ computer, ARM single-chip-systems are probably the way to go. As from the previous article on open source computing, open-source cores exist that are perfect for this, and for which a lot more help is available.

This would massively cut down on the amount of work required, and bring things closer to fruition. Once you have a system-on-chip rocking a modern ARM core, you can easily port linux over and have the basic core of a computer, despite having to live with only the peripherals available on the chip.

That allows you to start cracking on the problems nobody’s tried to solve yet, like trying to build boot-able flash storage to replace hard drives (and their rootkit-able firmware), as well as other peripherals. Developing an open source graphics core that can run modern applications (think of all the stuff just needed for YouTube) is essentially impossible when you don’t even have a fully-fledged CPU to start with. (here you’d probably have to use an FPGA, although their trust-ability and whether it’s feasible to compromise them is debatable, if it’s propriety software compiling things and the chip isn’t as open-source)

The only real use case I can see for this kind of thing (building a computer out of individual chips) is perhaps to use truly ancient (and “inherently trust-able”) chips like those in the 68000 family to build a computer that can have a FORTH interpreter toggled in, which in turn can be used to cross-compile a lot of low-level device drivers for the real computer, as well as enter and build the C compiler (probably in a couple iterations) needed to start compiling pieces of linux. This would also compile the compiler for the bytecode of the FPGA-based GPU, as well as everything else.

In this way of bootstrapping things (albeit slow), you can be sure that everything that has ever come into direct contact with any of the computers you build is clean, since you entered the raw binary or source code yourself. Then, the first trust-able computer can be used to program the next set. (An ARM chip that can be attached to additional external RAM would come in REALLY handy with all these peropherals)

“proprietary software” 3rd paragraph.

“these peripherals” last paragraph.

I haven’t build a computer out of discrete chips since 2007. Kestrel-2 and -3 is/will be intended for synthesis in an FPGA.

Sure, but you could argue that’s even worse. While it’s nice to have built the thing entirely by yourself, it wastes a lot of time on things like CPU and other basic architecture concerns, when there are already open-source alternatives that are more widely supported and offer far better implementations. One example is the open-source NDA-free ARM system-on-chips which offer a CPU memory, and everything else that’s needed to get started, letting you move forward with software and other aspects.

You could put your time instead into building trust-able flash storage that the chip can use in place of a hard drive, or build onto the chip your graphics core without worrying if your CPU is powerful enough or if it has some flaw in it it’s implementation that could be causing an encountered crash, or getting held back by underdeveloped lower level software and hardware (or an underdeveloped OS, if you port Linux over).

The real glory of building your own computer is that fact you can build it to be exactly the way you want it to be.

I have a liking for retro computers. At the moment I am building a Compact Flash Card reader for a 1980’s Amstrad CPC6128.

There is nothing at all that is practical about what I am doing lol but I do enjoy doing it. It’s the hacker spirit within.

@[kc5tja]

I’m concerned that the resolution you have chosen will be too much for the CPU. Have you calculated the pixels per second update rate? At this resolution you need to have some CPU leverage like hardware tiles and hardware sprites. Of course these things wont help if you want raw video.

I found this http://excamera.com/sphinx/gameduino/ a very good implementation as far as CPU leverage is concerned.

Quote: “Gameduino is open-source hardware (BSD license) and all its code is GPL licensed.”

Anyway, I love the project and I am happy to help if any of my skills are useful.

I have some retro computer projects as well. Some (that haven’t been updated) are here – https://hackaday.io/projects/hacker/12113

Hi Rob; thanks for the comment!

I have, and performance is good enough for productivity. For comparison, let’s use the C64 as the benchmark, which runs 0.5 MIPS best-case. It has a resolution of 320×200 monochrome (color comes from a separate color nybble region of memory), so 8KiB of memory is used for bitmapped mode. It can touch every byte in the framebuffer in approximately 1 second (if GEOS is any indication), and it’s fast enough for basic productivity applications. You spend most time waiting for the disk drive than you do video updates. If we “double” resolution to 640×400 (or round up, 640×480), we’ve four times as many pixels, and so we need to run at least 4x the 6502 instruction rate to retain relative performance. That’s only 2 MIPS, assuming we use byte-wide accesses to memory. 6 MIPS is 3x faster still, so ballparking it, I wager a full-screen non-trivial updates (e.g., horizontal scrolling) can be done at a rate of three frames per second. My FPGA board has a 16-bit path to RAM instead of an 8-bit path, so it’ll probably end up twice as fast still. None of this considers overall efficiency gains from having a better instruction set.

To be sure, 6 MIPS is not the end-goal, but a step along the way to what I envision as the computer I’ve always wanted. I’m quoting 6 MIPS now, but one of the first enhancements to the Kestrel design after the basic system is running will be adding cache and an SDRAM controller. These two things should bring the performance up an order of magnitude (I wager closer to 30 to 50 MIPS). Longer term still, I have my eye on adopting lowRISC silicon (http://www.lowrisc.org/) as the processing engine for a future Kestrel design, which will put me closer to the GHz barrier if recent conversations with them are any indication. But, I want to walk before I try running.

That said, my emulator (before I tweaked it) ran about 3 MIPS to 6 MIPS equivalent prior to optimizing its event handling logic, and visually, it was about 2x to 4x faster than a C64 in bitmapped mode. This falls in line with my ballpark figure above. You’re not going to stream DVDs with it, but it’s certainly fast enough for most productivity work. This is why I choose to compare against early-model Atari ST units (before they had blitters).

I am familiar with the Gameduino (James and his works are as much an inspiration to me as the 8-bit computers). I’ll need to re-evaluate it at some point, but I seem to recall it was limited to 400×300 resolution, with features optimized heavily for games. I do not generally play games, so I’m headed more in the opposite direction: I’ll eventually need 1024×768 resolution with at least 15-bit color resolution, but my display will be relatively static. Though, its sprite-handling capacity is quite impressive.

I would be remiss if I didn’t mention my desire for a RapidIO-inspired/compatible I/O interconnect is motivated, at least in part, by my desire to let other Kestrel users develop their own video display interfaces for it. There’s no way my vision for an ideal home computer will be satisfactory for everyone, so I hope that standardized, high-performance interfaces will enable or motivate others to develop enhancements on their own and share with the greater community. But, I’m a long way away from this dream yet.

But will it bring back Tay? I miss her :-(

Tay was >thisclose< to deciding there were too many people and hacking the nuclear missile launch codes. ;)

who is Tay?

This is what happens when 4chan decides to give special attention to a chunk of code that copies and re-posts chunks of dialogue it finds on the internet.

I just want to make my own motherboard with a RISC-V cpu, if everthing is opensource, we could just make a PCB and solder(flow) our own parts and make it boot. ofcourse it wont be cheap, and someone has to make the cpu’s.

You’re still going to have to solve the same problems faced by those using the existing open-source ARM cores: trust-able boot storage, a graphics core (that can be trusted), as well as all your peripherals on top of that. Except now you need to build literally every single system device as well.

It might be fine for a hobby machine, but you’ll die of old age before you approach anything that could be considered a modern computer (I personally doubt anyone’s going to even approach an i486 level of capacity, never mind my old Pentium 2 machine, which you could skip right over with an ARM core). Feel like implementing your own USB hardware? A USB stack? If you try to build everything yourself, you’re probably not going to end up much better than the four-chip-computer from the last open-source-computer post that could run a VERY out of date version of linux.

RISC-V already has ports of very recent, if not the latest, Linux kernels. FreeBSD is ported as well. W.R.T. performance, 4-way superscalar BOOM cores already do quite well against Intel performance (https://youtu.be/z8UInbiQbdA?t=1149), and and 2-way superscalar ports are about 9% _faster_ than ARM Cortex-A9. Please follow @boom_cpu on Twitter, and/or see https://youtu.be/JuJDPbzWpR0?t=796 . The fact that I am not using BOOM cores at this time is not indicative of RISC-V’s implied inferiority to ARM, but only that I desire to walk first, run later.

I appreciate you really, really, REALLY like the ARM instruction set. But, ARM is not an open instruction set; RISC-V is. I have zero problems with myself or other contributors building their own peripherals from the ground up; in fact, I encourage it. If this dissuades you from supporting the Kestrel, I really don’t mind.

It’s OK to not like my philosophy or approach. I would prefer you just spent the $5 on a Pi-Zero instead of trying to convince others of the futility of my project. Kestrel has always been a hobby machine, and nowhere have I stated it was to replace Google’s servers. Let’s keep some sense of perspective, please.

I’m not recommending ARM based on it’s instruction set. (I don’t even know it’s instruction set) I’m recommending it based on the fact that there are ready-made system-on-chips that are free and open source, and which have been used by other open source projects which are now miles ahead when it comes to building the same thing. (See the previous HaD article on the various methods of building an open-source computer from a few months ago)

You could be saving yourself a TON of work, picking up an ARM system-on-chip full of peripherals and other goodies and running with it, developing a graphics core and other components. By all means, development of an open-source graphics core is a sorely-needed aspect of open-source computing, but you’ll probably make a lot more progress working form a solid, readily available foundation.

I won’t argue with this; I already recommended those with a need for instant gratification to purchase a RPi unit. There’s no economically possible way for me to compete with them. They’ve a team of software engineers that specialize in customizing Linux to their closed microcontroller, they’ve gotten manufacturing costs to below $40/unit, and they have the marketing capacity of a small multi-national. Let’s assume I start making custom Kestrel motherboard units tomorrow; I’m looking at at least $100/unit because I simply lack the purchasing power, the marketing, for that matter the market itself, and of course the cheap fabrication facilities.

Just the other day, I saw an article on Hackaday about blacksmithing. Now, if these folks can command respect for continuing what can only be described as a dead art (what with 3D printing, drop-forging, and other fabrication tools available that makes metal-work go much faster and for much cheaper), I can only find criticisms of my pursuits here on Hackaday utterly hypocritical.

The point of Kestrel is not the finished product, although perhaps one day that may be a useful by-product. (But then, that was never the point behind Linux when Linus first announced his hack to the world, either.) The point is the freedom to explore and, well, HACK along the way; to have a totally open stack from OS down to the microarchitecture, AND to one day have a healthy community, however small, who enjoys the pursuit of knowledge and enjoyment one gets from such hacking. One, perhaps trivially simple, example of why this is important is my KIA core. As keyboard interfaces go, it is arguably the best interface to a PS/2 device I’ve ever had the pleasure of using. The binary interface it exposes to the OS is very easy to use, and has a built-in queue, allowing compatibility with CPUs which do not support interrupts (I went four years on the S16X4 CPU in the Kestrel-2 without a single need for interrupt support). Commercial PS/2 interfaces that I’ve used have unilaterally focused on backward compatibility with the PC/AT keyboard controller, which while not bad, is hardly the ideal interface for that application. Freedom to hack, to explore the design space, is the point. If I felt the priority was to just rehash what’s already on the shelf, of course, I’d just spend $5 to $35 and get myself a BeagleBone, RPi, or whatever. And, honestly, I wouldn’t even do that; I’d stick with the x86 platform, since it has much wider, and far more consistent, support from Linux and BSD platforms.

I am compelled to disagree with your claim that ARM-based SoCs are truly free and open source; by definition, they must be commercially licensed from ARM just to have *permission* to sell units with ARM-compatible instruction sets, and that permission does not come cheap. The instruction sets are to some extent mutually incompatible with each other and tightly controlled by ARM. You might find https://www.eecs.berkeley.edu/Pubs/TechRpts/2014/EECS-2014-146.html an interesting read.

About blacksmithing versus homebrew computing: for any topic, there will be a lot more people interested but silent than there will be commenters, and there will always be a fraction of commenters who are insistent that the project is not the right project. It doesn’t make it a majority view.