If you’ve read through the comments on Hackaday, you’ve doubtless felt the fires of one of our classic flame-wars. Any project done with a 32-bit chip could have been done on something smaller and cheaper, if only the developer weren’t so lazy. And any project that’s squeezes the last cycles of performance out of an 8-bit processor could have been done faster and more appropriately with a 32-bit chip.

Of course, the reality for any given project is between these two comic-book extremes. There’s a range of capabilities in both camps. (And of course, there are 16-bit chips…) The 32-bit chips tend to have richer peripherals and run at higher speeds — anything you can do with an 8-bitter can be done with its fancier cousin. Conversely, comparatively few microcontroller applications outgrow even the cheapest 8-bitters out there. So, which to choose, and when?

Eight Bits are Great Bits

The case that [Mike] makes for an 8-bit microcontroller is that it’s masterable because it’s a limited playground. It’s a lot easier to get through the whole toolchain because it’s a lot shorter. In terms of debugging, there’s (often) a lot less that can go wrong, letting you learn the easy debugging lessons first before moving on to the truly devilish. You can understand the hardware peripherals because they’re limited.

And then there’s the datasheets. The datasheet for a chip like the Atmel ATMega168 is not something you’d want to print out, at around 660 pages long. But it’s complete. [Mike] contrasts with the STM32F405 which has a datasheet that’s only 200 pages long, but that’s just going over the functions in principle. To actually get down to the registers, you need to look at the programming manual, which is 1,731 pages long. (And that doesn’t even cover the various support libraries that you might want to use, which add even more to the documentation burden.) The point is, simpler is simpler. And if you’re getting started, simpler is better.

32 Bit Rules!

Don’t get the wrong opinion of his talk — [Mike] is completely appreciative of many of the advanced features of the 32-bit chips. He mentions how the set/reset register on ARM architectures avoids the read-modify-write cycle that you have to go through on an 8-bitter. He mentions a problem he had with an old phone screen that needed a 9-bit SPI signal, ruling out using the hardware SPI on his 8-bit microprocessor. That STM32 chip has configurable hardware SPI that includes a 9-bit option.

There are a lot of problems that you’ll run into with simpler, smaller chips. And for many of these problems, there’s a simpler solution on a fancier chip. But knowing when you’re going to run into these pitfalls is a lesson best learned through experience. You can’t really appreciate how nice it is to have more UARTs than you need until you run out of them. Flexible pin-mapping seems unnecessary until you’ve spent enough time working around difficult layout problems. In short, [Mike] learned to appreciate the bigger chips for what they could do when he needed it.

The (relatively) unified and sophisticated toolchain available for programming ARM chips is a definite plus, once you know how to use it. Adding JTAG and real debugging capabilities into the project makes your life a lot easier. And the ARM core itself, providing a single CPU baseline that all of the chip producers then elaborate with their peripheral choices, reduces vendor lock-in and time spent re-learning if you need to switch. Try moving back and forth between PIC and AVR and you’ll know what he means.

The End of the Holy War?

[Mike] ends the 8-bit vs 32-bit war by saying you need to learn both, because only then will you understand which is the appropriate tool for the job. Sometimes you need a circular saw, and sometimes you need a hand saw. On the surface they both cut, but you can’t really internalize the different applications until you’re familiar with both tools.

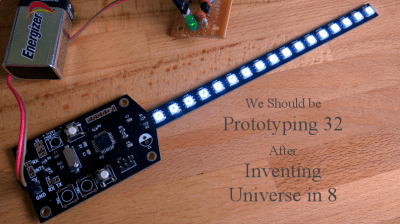

And he ends with a cautionary tale, and one that’s painfully familiar. He designed a POV display board for a class demonstration, and outfitted it with a just-right 8-bit microcontroller. Once the PCB was fabricated and populated and he started programming, he realized that he was going to run out of RAM at the desired display resolution. There are all sorts of fixes and kludges and workarounds possible, but if he had started out with a larger chip, at least for the prototypes, he would have had a lot more leeway to experiment. Instead, he’s got a short run of oddly-shaped, copper-clad coasters.

In the end, [Mike] comes up with his simple rule for making the 8-bit versus 32-bit decision. If you’re learning microcontroller applications, and looking to understand the universe, you should grab an 8-bit chip because it’s easier to fully explore its limited possibilities. But if you’re prototyping a (complicated) project, you don’t want to be limited by the choice of chips. You want to free up your mind to focus on the maximum capabilities of the system as a whole. A little bit more money for a 32-bit microcontroller that has simply too many features is well spent. You can always look into trimming it down once you’ve got everything working.

In the end, [Mike] comes up with his simple rule for making the 8-bit versus 32-bit decision. If you’re learning microcontroller applications, and looking to understand the universe, you should grab an 8-bit chip because it’s easier to fully explore its limited possibilities. But if you’re prototyping a (complicated) project, you don’t want to be limited by the choice of chips. You want to free up your mind to focus on the maximum capabilities of the system as a whole. A little bit more money for a 32-bit microcontroller that has simply too many features is well spent. You can always look into trimming it down once you’ve got everything working.

A 32-bit chip isn’t necessarily going to be more expensive than a 8-bit one. Since the 32-bit one is built with smaller geometries, you’ll find that you get more RAM and FLASH per $$$ over the older 8-bit. There are already ARM chips that are priced around a dollar and change for QTY 1.

The nice part about ARM chips is that if you ever need more memory or speed, there are upgrade paths. Once you get over the initial learn curve, switching to a different series or a different vendor isn’t that difficult.

This exact subject came up a few days ago in a lengthy forum thread. The poster wanted to make a custom USB-to-something interface, presumably at some low under 100 qty volume, of course at minimal cost. He really wanted to use the ancient code I wrote for Teensy 1.0, based entirely on his assumption that could be the cheapest chip. FWIW, much of that ancient code and info still exists online, but it’s poorly maintained.

Turns out, an Atmel AT90USB162 (or ATMEGA16U2) is now $4.35 (qty 1) at Digikey. The dramatically more capable 32 bit ARM chip we now use on Teensy LC, part number MKL26Z64VTF4, now sells at $2.74 (qty 1) at Digikey. Prices scale similarly up to qty 100. The 32 bit chip, with four times as much flash, 16 times the RAM, efficient DMA-based USB, 3 hardware serial and other peripherals, analog pins (many ADC and 1 true DAC pin), and an easier architecture which doesn’t require PROGMEM hacks…. is actually much less expensive than the old 8 bit AVR part.

Even after you buy or build a programmer to get your final .HEX file onto the chips, and even after you buy one Teensy LC for development (full disclosure – I’m the guy who designed it), it’s pretty easy to recoup those costs at even modest volume. The more powerful 32 bit part simply costs much less, at least in this case. Many other cases exist now as well, with newer low-end 32 bit chips appearing on the market. Long-term, the conventional wisdom that 8 bit chips are less expensive is becoming obsolete.

Yep. I did a board using an ATTiny. In retrospect (and the next version), it should’ve been a Cortex M0 part. About 75% of the cost, more capable, and just as easily programmed in assembler or C.

Cortex M0, assembly? Not a chance. Using C without a HAL is painful enough

Is Teensy still dependant on a strictly proprietary bootloader? If so, you’re hooking your cart to a one trick pony with no independent second source.

and both need a package and die area for connecting pads, with smaller geometries there is a point when the logic for the cpu isn’t what drives the cost

dollar per chip? You can get complete STM32f103 dev board for a dollar INCLUDING SHIPPING

ok, $1.99, but thats still a dollar :P

The situation is eerily similar of assembler vs. C. Often an assembler program ends up easier to maintain. The limits and cost of work means that many not-so-important features get rejected and the project stays nice and simple. While when working in C for a 32-bit controller, there isn’t any good reason not to add just one more thing..

You’re the first person I’ve EVER seen saying assembler and easier to maintain in the same sentence. And I’m not sure what you are smoking.

After that you say that it has extra limits and costs more to work on. That’s not easier to maintain, that’s harder to maintain. I hope I never come across you in a professional environment.

There’s an old joke that: “C combines the power of assembly language with the readability and maintainability of assembly language.”

Quite true. C allows bad and lazy programmers to write really unreadable and unmaintainable code. Sometimes they code in a real crazy way to impress other geeks with their ability to write unreadable code.

Now if the programmer is disciplined and documents his code, it’s a different story. I don’t see that much with hackers.

What I’ve been told is that assembler is easier to maintain on code which runs on bare metal, where cycle counting is important, and where the C compiler does weird things because of the CPU architecture (6502, some ARM).

That’s just using the right tool for the job. Any sane person will agree that C is more maintainable for non-trivial projects compared to plain assembler.

I know someone who insists that assembly is easy and everything else is hard. Of course she has know assembly since she was a kid and never attempted to learn anything else… Oh well.. I gues if the only tool you have is a hammer…

huh?

I’ll just ask: isn’t the language called assembly and not assembler?

For the rest, I (‘d like to) think you just wrote something differente than what you meant.

This is probably just a bad (or non-) translation by a native German speaker :-) In German it is called “Assembler”.

Your comment is so incoherent and wrong I’m just going to report it instead of writing a rebuttal.

Da fuq are you talking about?

You just got disassembled….but interesting thought for the day even if I don’t agree

I’ll buck the trend and agree with [jpa]. Disregard the entire C vs assembly question for a moment, and look at it this way: would you rather maintain a large, complex program with lots of bells and whistles, or a small, tight program that does just what it must and nothing more? Now, which if those is likely to be written in C and which in assembly? I posit that there is a point where the difficulty inherent to assembly programming balances against the bloat and feature creep much more likely in higher level language work.

Nice to see that atleast someone got my point :)

I know that it is not an immediately obvious result, considering how annoying assembly code can be to work with. But take a moment to think back to assembly language programs you have seen, and whether any of them match the worst C-mess you have seen.

One product in our company, from the past someone implemented something in all assembly, and we needed to upgrade some feature, and the original author was either gone or didn’t know how to do it…one software engineer had to convert it all to C (and I’m even skeptical b/c this individual writes pretty terse C, as if intentionally hard to read for job security) and it ended up working out. Assembly only works these days for the simplest products where you’re just interfacing something like a motor or other simple protocol. Otherwise it will take longer, I don’t care who you think you are. But I do agree that if you have C built into an assembly project, the C will look pretty gnarly compared to the assembly (you’ll be able to follow assembly better for smaller tasks). But if you don’t have smaller tasks, then it’s harder in every way.

Do you build the project you want to maintain or do you build the project you want to have, own and use? Your thinking is a bit foreign to me. When I build something it’s usually because I want it.

I’m going to try to decipher the logic:

-Assembly is so difficult to work with, that features are left out and updates not performed

-Lack of updates and features means less work invested

Thusly:

-less work = easier?

I think that’s what you’re saying. I think? It’s so much more difficult that it becomes easier?

Got it. Welp, I’m done with the internet for tonight.

Right, that is why we make metal parts using stone hammers instead of a CNC mill, it keeps those pesky programmers in check.

I think Mike hit the nail on the head. I don’t think Mike stressed enough that the number of gotchas in the 32-bit families is generally greater than the number of gotchas in the 8-bit families. And, I mean that across the board. Like it or not, you WILL spend more time chasing down unexpected details (hardware and software) with the 32-bit than you will an 8-bit, especially if you’re willing to just bit bang things.

Whelp, forgot to comment “as me.” Can’t edit it. I should have said [almost]. I will acknowledge that I have my handful of “whoops” moments where I should have used an ARM.

That’s funny. My experience is the opposite.

Whenever I load existing C code into a 32 bit platform, it “just works”. I almost never get that with an 8-bit platform, even with very simple projects.

I’m not saying you’re wrong, only that it’s odd we see the same thing so differently.

16-bit, best of both. ^.^

W00t MSP430 >:]

Can I get some love for the 68hcs12? No? I’ll go back to my corner then.

Of course it is “horses for courses” and 8 bit devices are still a good way to perform simple tasks. Having said that, 32 bit devices such as the Teensy LC are providing 32 bit speed and power for a cost close to 8 bit Atmel devices. The complexity starts when you introduce an OS like Linux but the capabilities also increase. Nowadays I generally use Arduino mini pro boards for simple stuff, ESP8266 when I need wifi and where the libraries I need are available and usually RPi when I need capabilities such as pattern recognition. I really like the CHIP $9 computer but it still has limited availability. I have also played with Edison and Samsung Artik. Although the specifications are good on paper I have yet to find a project where they win out over A.E.R. (Arduino/ESP8266/RPi) but their small size may make them a good choice for some uses. What really matters are the libraries and toolchain support.

Well, in my case, writing code for embedded chips isn’t my day job, just a hobby, and there simply isn’t enough time to learn both. Så I’ve chosen an ARM chip that is versatile enough to be used in most situations (NXP LPC) and focus on understanding and learning how to make it behave well enough for my use cases. It certainly is overkill for most applications, but it is powerful and scalable enough to serve most purposes, and it saves me shitloads of brainpower having to learn to understand just one toolchain instead of 2-3.

I climb it because it’s there. I did VGA with an 8-Bit because it’s there…………………..

I dislike people who’s only comment to a project is ‘why you use that?’ which is used as a derogatory slight against the author.

+1

Personally, the more ways people try to do things, the more I can learn from them (assuming they document it online). Doesn’t matter if its an overkill RPi used to blink an LED or complex project built with discrete transistors, I will probably gain SOME sort of knowledge from their endeavor, and for that I am grateful

+10

Doing crazy stuff that is not supposed to be possible is the holy grail of hackering.

To do so, you really have to know something deep about the part , and have some creative intuition to realize that by “connecting these bits in unusual ways” you’ll be able to accomplish a task well beyond the part’s abilities.

I also find being able to broadcast radio signals with zero support parts attached to the chip-leg antenna to be impressive and amusing.

It’s one thing I find to be common and painful – a huge number of snide comments by people who haven’t done the project about how it SHOULD have been done differently. There are ways to discuss this productively but too often it’s done derisively and without any real thought as to why engineering decisions were made.

As an old-school programmer who bit his teeth on punchcards and core memory I find that i actually like working within the confines of a “simple” 8 bit MCU and limited RAM. Really takes me back to the good old days

I have been punching cards too, but i am really happy that we have move further.

Things like ESP8266 are not possible with 8 bits, and if you are really in need, you can still emulate a 6800.

Don’t get me wrong, i love progress and am very happy with the awesome computing power on (well, under really) my desktop and all the changes i’ve seen in my profession. But as a hobby, 8 bit programming is a lot of fun.

I love assembly language. I like having the illusion of control. I love making direct connections between the code and the response.

C is not too bad, but a lot depends on the design methodology. Errors in larger systems are accumulative. I believe Edward Yourdon pointed out that most things in code development are new because once a problem has been solved in one language it can be ported to another. So I see a lot of programmers just re-implementing the same solutions based on algorithms developed back in the ’60’s and ’70’s by me and my contemporaries. Because the systems are larger and the context is different, they are introducing code that is not suitably bug free, and those bugs accumulate. Add to that the bugs and distortions introduced by compilers, and you have a recipe for disaster. (Think Siemens and SCADA if you need an example.)

We need reliable code in some projects, and so the argument for total understanding of the tool has a lot of support from me. If I ever build a robotic Nursing Assistant, I do not want it to drop a patient because the code was buggy. Ken Orr (of Warnier-Orr diagram fame) wrote in his books that software only does three things, and those three things have analogous constructs in Mathematical Logic.By using Warnier-Orr diagrams, Karnaugh Maps and decision tables as design tools (before coding!) you can create programs with a high degree of reliability and lower your bug count. Another surprise is that the actual time spent on the project is often shorter than the “think-a-little, code-a-little” approach to software development.

I have been using 8 bit AVRs for years, and have frequently been pushing them to the limit. In my current project (a micro sized multicopter) I started with an AVR (which worked), and then decided to move to an ARM (more as an excuse to learn ARM than anything else). I like both chips, and as Mike says, they each have pros and cons. Even though I suspect that I will be playing with more ARMs in the future, I will always have a soft place in my heart for AVRs (especially the 32u4, which is probably my favourite AVR chip).

Something that I found interesting from Mike’s video is where he says most people don’t use a text editor + makefile for ARM… I played around with some IDEs briefly, but couldn’t stand to use them. I then spent a bit of time hacking together a Makefile, and now I have a build environment very similar to my AVR build environment consisting of Kate + make. (Incidently, I use the same thing, again with a slightly modified makefile, for Teensyduino programming. One approach to rule them all!)

I agree with this.

The first ARM device I got to work with was a BeagleBone Black. I didn’t really want to use BoneScript or anything that was browser-based or too high-level as I didn’t feel I would learn anything about ARM programming and debugging that way. I spent two entire days unsuccessfully trying to read bad tutorials written by people who barely seemed to have a grip on setting up the IDE of their choice, let alone in-depth knowledge of the device itself. Every single one of them used Keil, Eclipse or some other large and feature-rich IDE.

After finally getting eclipse to compile my code on the second day’s try, the next part of a tutorial I was reading covered setup of ssh/sftp to link the IDE with the device. It failed miserably and would not work.

After the growing frustration of doing things the “easy way” through online tutorials, I dug up the name of the toolchain itself.

I downloaded it with apt-get.

I compiled my hello world program and then manually uploaded it through sftp.

All within less than 5 minutes.

Don’t get me wrong, I love using Code::Blocks IDE for some C/C++ coding projects, but after that experience I will always prefer a text editor and a console with minimal build scripts / tools.

Sometimes attempts at simplification end up over-complicating things.

Bonescript is such a train wreck. Yes, give me makefiles and my favorite text editor that I can use instinctively any day.

So far, the best ARM video tutorial:

https://www.youtube.com/playlist?list=PLPW8O6W-1chwyTzI3BHwBLbGQoPFxPAPM

They are both complete overkill. Naturally, anything that someone has used any processor for could much more easily have been done with a 555.

+1!

God bless the 555!

Let me ask this, does everyone use just ONE screwdriver to fix everything…? I doubt it. You pick the right tool for the job. Same goes for micros.

Jokes aside, in my case I pick the tool that can do the job for me most quickly and efficiently, at least in a work situation. This may not be the most elegant one, but might be the one that comes with a handy library that saves days of programming, for instance.

Doctor Who?

You may jest, but Big Clive recently took apart a USB soldering iron (which turned out to be unexpectedly usable, but that’s not the point) which had a PCB with a few passives, a mosfet and the token 8-pin chip which everyone naturally assumed to be the cheapest 8-bit micro the Chinese could find. Well, it was a 555 – all that was needed to trigger the iron for a set time after picking it up (tilt switch) or pushing its button…

Big Clive missed some important conclusion in his teardown of that solderin iron imho:

Does it melt solder: YES

Would any sane person use an underpowered, not temperature controlled soldering iron just to save a few $, and then risk to spend hours finding that cold solder joint that is bound to fail a few days/weeks/months later: NO

Of course we would! :) My only mains-powered iron is definitely not temperature controlled yet that doesn’t make any noticeable difference. As for the USB one, portability is a nice thing when you need it (I’m partial towards gas-powered ones but those need refuelling) and I don’t remember seeing any cold joints in that video – if you try soldering in a heatsink with it, that’s not the iron’s fault…

555? Amateur! Two transistors and a pair of capacitors gets you a bistable multivibrator. What do you need a big o’le 555 for?

There’s no reason why ARM manufacturers can’t attach a number of 8-bit MCU style peripherals to a Cortex M0 core combining the best of both worlds. Although the Cores themselves are pretty well specified by ARM, the peripherals are bespoke. And in fact 8-bit style peripherals on a 32-bit MCU would be even simpler. For example, a 32-bit simple UART would just contain:

DATA

CTRL

STATUS

And the CTRL port would contain all the required control bits in one go:

[Buffer:1][Protocol:2][TxIntEn][RxIntEn][Start][Stop][Parity:2][Bits:2][Prescalar:4][Baud:12]

(where Buffer=1 for a 16 byte buffer and Protocol is: 00=UART, 01=SPI, 10=I2C, 11=QuadSpi) . I would map some of the address space to a QuadSpi interface – just jumping to it would cause it to be used in QuadSpi mode.

Similarly Status would contain the normal status bits as well as interrupt set/clear bits.

Thus setting up a Uart would just involve one write to the control register. I would support 4 Uarts as standard.

An 8-bit peripheralised Cortex M0 of course doesn’t need DMA or an Event mechanism, but probably does need a wake-up register. So, we’d probably want a single GPIO register (with half-word and byte access so you could pretend it was two half-word GPIOs):

PORTA: Bits 31..0. {output port à la AVR}

DDRA: Bits 31..0.

PINA: Bits 31..0.

WAKEA: Bits 31..0 (makes the pin an input, DDRA controls rise/fall, PINA bits for wakeup sources are only latched on wake)

The right way to make alt functionality simple to access and program is to define it as part of the GPIO control, because that’s what you see on a datasheet:

ALT[4]: 4x 32-bit registers, each nybble=1 pin with 16 alt options.

Again, Adcs would have a DATA/CTRL/STATUS organisation.

I prefer simple free-running 32-bit timers with one or two compare registers for PWM features. This gives a 5 register peripheral:

COUNT:

COMP0:

COMP1:

CTRL:

STATUS:

One thing I would ensure: USB is so ubiquitous I would build an avrdude compatible USB bootloader into the firmware and have a simple USB device interface. Maybe something as simple as an early EZ-USB chip supporting perhaps as little as two endpoints.

I would definitely have some LUT-based CCBs on the chip as an introduction to hardware design: the SDK would expect a hdl folder containing Verilog or VHDL.

I would campaign for a hobby-level HDMI protocol. C’mon, people campaign and kickstart for all sorts of things, why not a hobby-level HDMI protocol limited to 1-bit/2-bit/4-bit/8-bit [R:3,G:3,B:2] colour and resolutions up to 320×200 at 25Hz and 30Hz? HHDMI (Hobby HDMI) would use an SPI or QuadSpi interface. 320x200x8-bit@30Hz is perfectly doable in an ARM, it’s a bandwidth of: 64Kbx30 = 2Mb/s, just 0.5Mwords per second, a breeze for an ARM.

..there are many other criteria that could be supported whilst giving the microcontroller 8-bit peripheral simplicity.

However, this doesn’t matter much if interfacing and the chips themselves aren’t available in a narrow DIP format. I want to see HDMI sockets, micro-USB B sockets etc all available with veroboard pin spacings. Is this too much to ask to lower the barrier for the next generation of engineers? It’s like – the LPC1114FN28 is great, but why oh why did they give a wide 28-pin package? Especially as it’s possible to actually shave off the edges and create a narrow 28-pin package from it.

[From Julian Skidmore, the designer of the 8-bit hobby computer, FIGnition]

https://sites.google.com/site/libby8dev/fignition

320x200x8-bit@30Hz HDMI not possible. HDMI specifies a 25MHz minimum pixel clock.

And correspondingly, nothing longer than 4ns per bit.

Actually 25MHz corresponds to 40ns, not 4ns.

But, this figure is due to an arbitrary specification, it’s not a natural law. Their specification chose to exclude legacy resolutions, frame rates and thereby exclude hobby development. But there’s no reason why we can’t campaign to change it. If the industry decides to raise the barrier for hobbyists, you don’t have to take it lying down: campaign.

320×200 has no use for video (any more), it looks crappy on any decent sized monitor. This resolution is just sufficient for a control LCD which you never connect via HDMI. Normally this is a CMOS parallel or SPI interface, there is no need for fancy LVDS signalling.

If you want something DIP like with an ARM and an easy access to the peripherals you could use a Teensy module. There is an Arduino library for it.

I notice you use FORTH. This is an awesome solution to control and flexibility. Again, it takes more thinking than C, but the results are worth it.

Indeed, forth is a far more powerful language than ‘C’ and because it can be implemented or retargetted by a single person, it’ll never die out :-)

Ohhh FORTH! seems familiar to me

http://www.absolutelyautomation.com/articles/2016/05/02/may-forth-be-opto22-pac-and-linux

Good luck getting impedance controlled layouts for HDMI or USB within specified tolerances on a veroboard and 0.1″ pin spacing and DIP parts…

You use the cheapest MCU that gets the job done, period.

If the “job” is a small production run of 1000 boards, would you spend an extra few weeks of dev time for a chip that costs $1 less? How about risking programming like reusing the same memory for different variables at different time?

How about a “job” that’s likely to start at even smaller volumes and might maybe someday grow to larger quantity? Usually the best path involves getting started selling sooner with a viable plan to later reduce costs. By then, you actually know what the sales history looks like and maybe you can even forecast future sales and growth, so you’re making cost/benefit decisions based on actual information instead of sheer guesswork and wishful thinking.

There are a *lot* of difficult, time-consuming ways to use cheaper parts. I’ve personally done many of them. Almost all take expensive dev time and most add significant risk. Often that cost and risk greatly outweighs the actual savings you’ll reap from spending less on a cheaper part. Sometimes the cheapest parts are penny-wise and pound-foolish.

remember that it’s not cheaper because it’s cutting corners, it’s cheaper because the technology is simply better. it shouldn’t take extra time unless your engineer hasn’t ever worked with 32 bit before.

You are assuming that in the process doing things the hard way you didn’t learn anything from the experience or can put down something on your resume.

For me, I have signed up for the high risk assignments whenever I can and have benefit greatly from them. The best way to obsolete yourself in the tech sector is to stay complacency and keep on doing the same old thing that you are doing for the last 10 years.

“How about risking programming like reusing the same memory for different variables at different time?”

Trash collection?

malloc() ?

Did I misunderstand what you’re talking about?

Do you declare all of your variables as static?

I think 32-bit is easier to learn on, if you are a total beginner.

I think 8 bit is good for moving from beginner to intermediate, since you have to learn more of the inner-workings. But ultimately, you go back to 32-bit and master that.

Honestly, the only reason I ever see for using a 8 bit is cost. Even then it’s only on a mass-produced scale. For most projects, engineering time is more valuable than the extra cost of a 32-bit chip. Doubly so if you end up redoing it when 8-bit turns out to be inadequate.

You need customers that pay for your precious engineering time?

And C or C++ code dont mind the underlaying hardware, only the speed.

And only very few projects ar dependent on speed?

I do most of my MCU (musical) projects on AVR 8-bit even thoug I started my development career on ARM 32-bit.

Why? Because it’s simpler, 5 volt and customer friendly.

The code is the same, just cheaper and more simple.

Why is 5V an advantage? It’s more of a pain to find other 5V devices than it is to find 3.3V devices.

There are 5V ARM Cortex-M 32-bit chips too, if you really need it. And i’m not talking about 5V-tolerant IOs only. Just look at the NXP/Freescale Kinetis KE0x family, to give one example (2.7…5.5V).

What about differences in temperature ranges for reliable operation, and heat production from the MCU itself, is this worth considering?

Seems worth considering to me! Ruggedness and power consumption are pretty important criteria in portable applications. Have the 32-bit chips caught up to the 8-bitters in these regards like they apparently have in price?

Yes, modern 32 bit ARM microcontrollers are at least on par with 8 bit ones for power consumption, and some are far superior. MPS430 is probably still the king of ultra low power MCUs, especially when programmed in assembly. But many of the latest Cortex-M0+ parts have excellent low power modes, and active/idle power similar to 8 bit processors, when running code much faster… and you can sometimes leverage the faster performance to spend less time in active mode. Many have far more options to disable parts of the chip, like running code from RAM and completely powering down the flash memory. Some chips even have very innovative low power features, like asynchronous DMA which can allow peripherals to automatically move data to/from memory while the CPU sleeps. Ability to run (at full speed) with only 1.8 volts is also common on many 32 bit parts, which you can leverage for power savings.

Yes they have very much caught up. I agree with Paul that most new low-power 32-bit ARM chips are on-par or even better than the “holy grail” MSP430. It all depends on the application though.. like is it doing any heavy CPU lifting, maybe some AES encryption (think IoT security), what is the sleep/run ratio, what peripherals can save CPU time or reduce number of wake-ups, how fast can the MCU wake-up, etc.

MSP430 is still a nice start though, you can do much worse than that. However most MSP430 series that can run from a coin cell seem to be stuck at 150-250uA/MHz, which is even higher than the current consumption of a 48+ MHz cortex m4 with FPU these days. And with such ARM chip you get a multi-fold of RAM/FLASH, DMA and large amount of timers/peripherals.

The sleep current on most low-power 32bit chips is still a bit higher than on 8/16-bit, but I think it will catch up. Some 8/16-bit chips advertise with nA deep-sleep currents, but forget that any sane application will probably want a RTCC/WDT wake-up. At that point both series of chips are in the 0.5uA – 1uA range.

if he uses a single color “bitmap”, it would only use 1/24 of the 18kb.

and then cycle the color*, to make full use of the 24bit rgb leds.

*maybe in a strobe, pulsing, lava, fire or rainbow pattern.

if the hardware is not capable, fake it in software.

32-bit is the current standard and will probably continue to be the standard for the foreseeable future. Moving to 64-bit doesn’t make much sense in the non-mmu based, non cooperative OS microcontroller world……………But I’ve been wrong before.

Managing hardware complexity on a 32-bit micro is usually accomplished via the use of peripheral libraries (middleware) such as STM32Cube HAL, STM32’s SPL or the amazing libopencm3. These require some understanding of the peripheral and its registers..but not a complete understanding. e.g. to configure Baud rate on an stm32f4, simply specify the Baud rate value in a struct. The alternative register based C coding approach would require the user to figure out the fractional baud rate calculations him/herself. Which would be a total pain in the a** .

I get it. register-based C coding on an 8-bit micro is way easier than on a 32-bit microcontroller. Those who got into microcontrollers with 8-bit micros and used a lot of register-based C code love it and find it hard to get their heads around a microcontroller without it. But register-based C coding is quickly becoming the new ‘assembly language’. Sure its fundamental, and one probably ought to know a thing or two about it, but in most cases, 90% of the time, the average embedded programmer will working at the higher level of abstraction; the peripheral library instead.

Total noobs to embedded programming or those that want to get things done even faster can move to an even higher level of abstraction with libraries like mbed or arduino that are usually built (but not always) on top of of the peripheral library.

8-bit will probably be around for a long time due to the fact that many people are already so familiar with them and would rather use the parts that they know and have invested time and effort in, but not due to any technical virtue.

I guess what I’m saying is that embedded systems development has changed radically in the last 15-20 years. If you are new to microcontrollers, my humble advice is to forget the ‘start first with the easy 8-bit then move on to the 32-bit’ adage. Just move straight to 32-bit.

Using 8-bit is sorta like watching TV in 2016. It is still a thing simply because older generations got used to it.

Start with something like mbed and a 32-bit ST-nucleo board. Once you master these libraries and start noticing their limitations, move on to the peripheral library level. Once you’re comfortable at the peripheral library level, start dabbling at the register-based C, but don’t feel that you absolutely have to master it right away. Chances are you’ll rarely need to do serious coding at that level anyway.

So many people choose controllers based on silly hardware considerations. The best choice for most projects is the controller you used on the last project. You know it, you know the toolchain, you can just bang out the next project. This is true primarily for one-offs, which are what Hackaday is all about. If you are into production, other factors come into play of course.

I hate PIC, I can endure AVR. I am fond of ARM, I am enchanted with the ESP8266. I see much more 32 bit in my future than 8 bit. ARM is so cheap, why use anything else? And this is twice as true with the ESP. But fundamentally, as long I gcc is up and running on it, I don’t care much what is down below.

Well, then tell me what cheaper-than-AVR ARM comes in DIP and can be programmed with four resistors on a parallel port under Linux.

An AVR can be used with just the chip and some connectors out of your junkbox, for an ARM i need an expensive programmer first and can’t roll my own.

Most if not all of the ARMs you can get have build in bootloaders, all you need to program them is a serial cable like ftdi many of them can even boot on USB so all you need is an old USB cable. And good luck finding a PC with a parallel port these days.

Linux or not, I don’t have any PC with a parallel port left. And DIP is similar. PC boards are so cheap these days, think e.g. OSHpark, that I do not want to use perf-board anyway. On the area a controller uses in DIP you can build the whole project, using modern components.

That’s a rather relative concept. Sure, if you throw around 30€ Arduinos by the fistful in your every project you may not care – but if one plans to do something simple with a $2-3 DIP AVR then ~$50 for a modest 5x10cm PCB (actually three, out of which you probably need just the ONE but you have to pay for three anyway) is nothing short of a princely sum and a king’s ransom. What should I get – a new 1TB HDD or a puny credit-card sized PCB…? Choices, choices…

the stm32f030/stm32f042 (with USB) both come in 20-pin ssop packages that can be easily soldered onto a 20-ssop to dip adapter or better yet you can build your own board

https://pbs.twimg.com/media/CUeBfZPUwAAhE1e.jpg:large

The part costs just as much as the attiny parts, is 32-bit, runs at up to 48MHz, comes with 32KB flash 6KB SRAM and with all the usual suspects i.e. ADC, USART, I2C, SPI e.t.c. the f042 also has USB device.

The part comes with a serial on chip bootloader and the USB capable device (f042) also comes with with a dfu USB bootloader. You can purchase an stlink debugger/programmer clone for $2-3 from aliexpress. The official one (non-isolated) costs $15. You can also use the STLink V2-1 on any of the nucleo-64 boards. It really doesn’t get any better

I highly recommend using either the STM32CUBE HAL or libopencm3 with it however. Mbed is too bloated for 32KB of flash..It takes 12KB just to link in the mbed libs and use printf.

You comment seem to reflect that you don’t know that most ARM have built-in bootloader which allows you to upload either from serial (2 pins + GND) or even thru USB using DFU protocol.

Oh sigh. Yet again, the concept of selecting a an embedded processor based upon the peripherals it provides is completely absent.

That’s because it is sorta irrelevant 90% of the time. Sure there maybe a time when I need to use a 5MSPS 12-bit ADC or a CAN capable UART but most of the time a generic 12-bit 0.5-1MSPS and a simple uart capable of 57600 baud rate will do.

Don’t get me wrong. The peripherals are important but it’s also crucial to have a competent CPU.

To become one with the chip, one must have programmed it by bit-banging pure machine code into it…

Oh! I get it! By banging your head against it, it becomes one with your forehead!

B^)

bit-banging? I hope you mean fat-fingering. With real toggle switches!!!

“The (relatively) unified and sophisticated toolchain available for programming ARM chips is a definite plus”

Maybe the ARM toolchain is somewhat unified, but that’s certainly not a plus compared to AVRs. avr-gcc has the -mmcu parameter, the ARM pendant not.

Actually I consider the toolchain to be one of the weakest spots of the ARM architecture. Dozens of slightly different peripherals for doing the same tasks (GPIO, UART, SPI, …). ARM, the company, has decided to go with complex, commercial IDEs as well as a webapp-like environment.

ARM puts emphasis on entire boards (distinct library for each board), but what if one wants to create a new board, like every hardware developer does? Then one finds almost no library support and has to start with bare hands.

MBED is very complex. Just toggling a pin using MBED maxes out at some 200 kHz, while the same task done AVR-style can achieve 5 MHz on the same chip (LPC1114 @ 48 MHz).

If one wants to actually take advantage of all these speed improvements over AVRs one has to start very very low level and mostly rewrite avr-libc. One has to go through these 1000+ page manuals page by page. Granted, it can be done, but ARM’s toolchain isn’t exactly helpful, except that it provides a compiler and linker (arm-none-eabi) at all.

Although I mostly agree with Mike, here’s my methodology:

Hackers, hobbyists and fast proof of concepts => 32bit copy/paste/modify code (Arduino)

Developing a tight profit margin commercial product, safety critical system, or learning how things work = Based on the design requirements… 16, 8 or even 4 bit, typically written in assembly.

lots of misunderstandings.

-mmcu for arm would be crazy, there are hundreds if not thousands of ARM ic variants and more coming all the time

gcc can’t maintain all that, and no one would want to wait for the next gcc release to use a new ic variant. It would be like asking gcc to have defines for every dell/hp/acer pc made and every possible configuration of memory and peripherals

you don’t have any interaction with ARM, ARM sells cpu cores to companies that build ICs

libraries follow the manufacturers and the ICs, you can makes as many different board you like, if it is the same IC it is the same library

complaining that MBED is very complex and slow is like complaining that an arduino sketch is slow

Ah, “misunderstandings”. Are you sure you understood what’s going on yourself?

Asking for ARM -mmcu is actually more like having support for all the CPUs these Dells/HPs/Acers are using and that’s what gcc actually does.

ARM maintains arm-none-eabi and MBED, so one does have interaction with them. ARM does have the means to ask chip manufacturers to have unified interfaces for basic peripherals, they just happen to not do it.

And there is (intentionally!) no arm-libc, so MBED is all we have and could call a “library”.

A piece of cake on AVR, writing a bare-metal “Hello World” for the serial line, took months of learning on ARM. Others following up on similar ARM chips took several weeks, too. That’s why I call the library situation a weak spot. One won’t recognize this if one is into blinking a few LEDs, though.

Learning to program bare metal for serial doesn’t take months. I wrote VGA terminal program for a new part in a few weeks including doing 640×480 using DMA SPI using nothing but the hardware reference manual and wrote all the code myself (except the fonts). The compiled code is less than 7kB and only uses nothing but standard C library.

My interrupt driven serial code was done in a couple of hours from scratch.

https://hackaday.io/project/9992-low-cost-vga-terminal-module

arm-none-eabi knows about cpus and fpus, it doesn’t know about ICs

cmsis, but apart from the CPU peripherals that are common to all ICs who would use something with a the overhead of having to support all manner of different peripherals from different manufacturers?

why use mbed in the first place? ignore that what ever IC you use contains an ARM core, if you use an IC from say ST go to ST and download their library it supports their ICs and peripheral just like Atmel supports AVR

“arm-none-eabi knows about cpus and fpus, it doesn’t know about ICs”

Yes, that’s exactly the problem and the regression compared to AVRs. :-)

Reasons to use MBED include:

– Projects which want to run on many boards of various MCU vendors, not just a single one. MBED at least collects all the CMSIS flavors (except Atmel, IIRC).

– Developers which don’t want to start from scratch on each new board (and different MCU vendor).

– Having some visible/measurable output without weeks of coding. Without being able to at least toggle a pin, hacking an MCU is pretty much stepping in the dark. One doesn’t even know wether it runs at all.

(Off topic: next time, please hit the “Reply” link before answering.)

you are comparing apples to bananas, AVR is a family of mcus with the same instructionset and similar peripherals from a single manufacturer.

ARM is a multitude of different MCUs from a multitude of manufacturers with different sets of and implementations of peripherals. They all share instructionset and a few basic peripherals (basically interrupt controller and systick) but the rest can be totally different from manufacturer to manufacturer

a more fair comparision would be something like ST to Atmel and STM32xxx to the AVR family

and in that case you download STs tools (gcc+eclipse) and STs library that cover the whole family and you have blinky and “hello world” running in minutes

Good luck explaining this apple-banana thing to hackers! Wherever one reads about ARMs, ARM is ARM :-)

Can ANYONE describe how to pronounce that last name?

Mike “I’d like to buy a vowel” Szczys?

It sort of sounds like stish…