For her Hackaday Prize entry, [ThunderSqueak] is building an artificial intelligence. P.A.L., the Self-Programming AI Robot, is building on the intelligence displayed by Amazon’s Alexa, Apple’s Siri, and whatever the Google thing is called, to build a robot that’s able to learn from its environment, track objects, judge distances, and perform simple tasks.

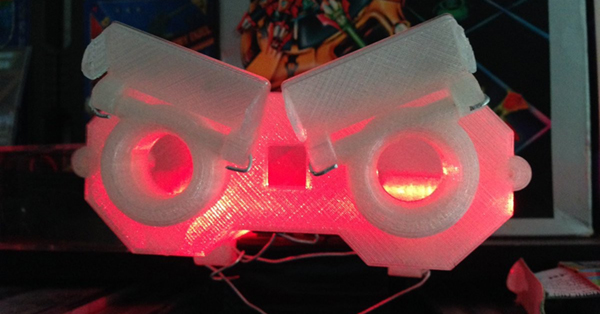

As with any robotic intelligence, the first question that comes to mind is, ‘what does it look like’. The answer here is, ‘a little bit like Johnny Five.’ [ThunderSqueak] has designed a robotic chassis using treads for locomotion and a head that can emote by moving its eyebrows. Those treads are not a trivial engineering task – the tracks are 3D printed and bolted onto a chain – and building them has been a very, very annoying part of the build.

But no advanced intelligent robot is based on how it moves. The real trick here is the software, and for this [ThunderSqueak] has a few tricks up her sleeve. She’s doing voice recognition through a microcontroller, correlating phonemes to the spectral signature without using much power.

The purpose of P.A.L. isn’t to have a conversation with a robotic friend in a weird 80s escapade. The purpose of P.A.L. is to build a machine that can learn from its mistakes and learn just a little bit about its environment. This is where the really cool stuff happens in artificial intelligence and makes for an excellent entry for the Hackaday Prize.

I want to build an AI robot, let’s focus on how it looks. *facepalm*

it might not be as crazy as it sounds, plenty of more serious projects use a similar approach under the assumption that the shape of a body greatly affects how an intelligence associated with it evolves, tool use probably isnt that relevant to an AI with no arms or legs and if it cant interact beyond talking then surely that would affect what has what value.

Sure, but it would be much simpler to build the robot’s body in a VR enviroment. That way changes are easy and instantaneous. The hard part is building the AI in software, the hardware is secondary (still hard, but it has been done before, building an AI such as described hasn’t been fully realized). The body in the VR can be modeled in such a way that building the hardware is feasible. For example: this also allows for sensor input which is hard or even unobtainable in real life, but simplifies the process greatly, the software can focus on handling the perfect input and then later be adapted to work with real life input. Doing these two steps as one makes for a very though project.

yet that is exactly what is being done on almost all serious university robots, simulation only gets you so far.

you are right that software is by far the most important part but your original sentiment was that starting with the body wasn’t the best idea, that doesn’t seem to be true , it might even be necessary, be it in a simulation or not.

i remember some of the software we used to learn about machine learning, most were simplified 2d abstractions but some did function in 3d, some of them were based around a generic blob with code attached and others actually had functioning bodies, technically i think it was called artificial life but that might just have been some of the software.

anyway the point is that the simulations with functioning bodies usually showed far more complexity faster and with less error than the same or similar code put into an abstracted entity.

Ai can be developed, with a lot of difficulty. Complete VR world: never. It is essential to have a body interacting with the real world for proper AI development. Ask yourself this: how intelligent would you be as a brain sitting in a jar for your whole life?

How do you know you haven’t been spending your life as a brain sitting in a jar?

Very intelligent. Feed me information from the Internet and give me VR simulations, and I can learn quite a lot. The brain doesn’t need the body if fed information in a similar manner. And please, stop using the fucking “brain in a jar” argument. It has no logical basis whatsoever.

Any idea about who can give any professional Virtual Reality environment simulation software with a cheap price for personal/hobby use?

Gotcha!

Let’s not forget this project is made with @[ThunderSqueak] personal finance resources, which are… let’s see, most of the time oscillating around 0 USD, most of the parts recovered from discarded equipment and all investments are exchanged for 3d printer filaments.

So no more jokes about her work.

Her original hardware approach is as great as the 3d-printed enclosure.

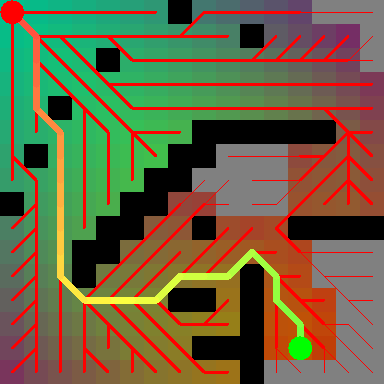

Did anyone bother to follow the schematics and read those project logs? Did anyone understand her AI-related documentation?

Or is it simpler to joke about how the robot looks?

ROS.

The body is a changing thing, and is nothing more than a platform to use. While waiting for parts to come in, I am focusing on the parts that I can create with what I have on hand. That way I always feel like I am making progress on the build. :) I have also been working on the software side of things in the background. Work just has me so busy right now that I have not had time to write up the logs for those tasks. Sure, the form is important, as it will dictate the ways in which PAL can interact with its environment, deciding this sort of thing early on helps me think about the underlying AI code that needs to be implemented. Whatever the case, it is a build I am having a lot of fun with :D

the funny thing is a “smart” body can make dumb A.I. code look and act smart.

body and mind are more connected then just being a platform.

I saw this project page the other day and like the idea. It reminded me of an article which could maybe help with the project;

https://www.damninteresting.com/on-the-origin-of-circuits/

There’s a lot of comments on there which might also be helpful to read.

Here’s the wikipedia article in case it helps;

https://en.wikipedia.org/wiki/Evolvable_hardware

Number 5 is alive!

Indeed Janiel Dackson