It sounds like something out of a sci-fi or horror movie: people suffering from complete locked-in state (CLIS) have lost all motor control, but their brains are otherwise functioning normally. This can result from spinal cord injuries or anyotrophic lateral sclerosis (ALS). Patients who are only partially locked in can often blink to signal yes or no. CLIS patients don’t even have this option. So researchers are trying to literally read their minds.

Neuroelectrical technologies, like the EEG, haven’t been successful so far, so the scientists took another tack: using near-infrared light to detect the oxygenation of blood in the forehead. The results are promising, but we’re not there yet. The system detected answers correctly during training sessions about 70% of the time, where the upper bound for random chance is around 65% — varying from trial to trial. This may not seem overwhelmingly significant, but repeating the question many times can help improve confidence in the answer, and these are people with no means of communicating with the outside world. Anything is better than nothing?

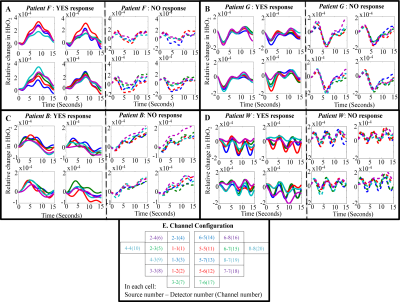

It’s noteworthy that the blood oxygen curves over time vary significantly from patient to patient, but seem roughly consistent within a single patient. Some people simply have patterns that are easier to read. You can see all the data in the paper.

It’s noteworthy that the blood oxygen curves over time vary significantly from patient to patient, but seem roughly consistent within a single patient. Some people simply have patterns that are easier to read. You can see all the data in the paper.

They go into the methodology as well, which is not straightforward either. How would you design a test for a person who you can’t even tell if they are awake, for instance? They ask complementary questions (“Paris is the capital of France”, “Berlin is the capital of Germany”, “Paris is the capital of Germany”, and “Berlin is the capital of France”) to be absolutely sure they’re getting the classifications right.

It’s interesting science, and for a good cause: improving the quality of life for people who have lost all contact with their bodies. (Most of whom answered “yes” to the statement “I am happy.” Food for thought.)

Via Science-Based Medicine, and thanks to [gippgig] for the unintentional tip! Photo from the Wyss Center, one of the research institutes involved in the study.

Yes, but we all know the real target for this technology will be gaming.

If the monetary reward of the gaming industry makes research like this possible, great!

Sadly it seems that gaming rides the back of other research, and always has. Even now better GPUs are being driven by the monetary returns from applications other than games. If gaming was a primary driver we would have had usable VR before the year 2000, but we still don’t have VR in every home because parts of it still suck.

What’s bigger than gaming for 3D? I would have said simulation back in the early days of hardware accelerated 3D, but not anymore. You have the entire console marketplace plus gaming PCs. For a company like NVidia researching their next xx80 video card, that cards purpose is gaming. For tablets and smartphones, the 3D chip is for gaming.

We didn’t have useable VR back then because EVERYTHING was too slow. CPU, GPU, sensors for input, RAM. Another big issue was the expense of LCD. It took the smartphone revolution to bring high-res small screens into the market (cheap!), which caused Occulus to take another look at bringing it back. You could say VR was reliant on other tech, but VR is not all of gaming.

> We didn’t have useable VR back then because EVERYTHING was too slow. CPU, GPU, sensors for input, RAM

Gaming PCs were used to drive those HMDs, so they suffered from the same hardware limit. VR added some restrictions (263×230 resolution vs 320×240, x2 performance requirement for stereoscopic rendering, balanced with the lower resolution), but the latency was not that bad. The Forte VFX1 had a dedicated DSP for that for example and software was closer to the metal at those times, providing inherently less latency.

If you compare VR to PC gaming today you’ll see the same gap (1080×1200 vs 1080p or more, x3 performance requirement for stereoscopy/undistortion) and yet it did succeed, even with the DK1 and its much lower resolution (640×800) and higher latency (30-50 ms).

I think the reason why VR failed in the consumer market until now is because the FOV was not immersive (~50° at most when 80° or more is required for immersion) and content was not created for VR, it was just ports of existing games that didn’t add much to the 2D experience.

I don’t what world you are living in but it sure isn’t the same as mine. BTW there were usable VR before aimed at consumers…

There was terrible, awful, laggy, low-res VR before. It cost thousands of currency units and was pretty terrible. It was before 3D rendering cards were available (well, outside of places like Evans & Sutherland) so was stuck with 486-era PC hardware, just about capable of rendering a few textured triangles at 15fps.

I remember the first coming of VR. It floated on hype alone, really. That and curiosity I suppose.

I did use a primitive VR setup in a branch of Maplin, running Doom with a headset. It was fantastic, amazing at the time, but then there was nothing better to compare it to. But there’s no chance anyone apart from game-obsessed millionaires would’ve shelled out for it. You’d have got bored of it after a few hours.

It was a proof of principle. Hype, curiosity, and bloody-mindedness on the developers’ part. It could never have been a success.

But now mobile phone technology, hi-res displays and motion sensors, as well as super-high-power CPU / GPU combos, mean VR is inevitable. Just a case with lenses to strap your phone to your head will work.

Motion sensors are an interesting market, actually. Mobile phones all have them, but they don’t really use them. There’s no real need for them. I wonder why they include them? AFAIK the Nintendo Wii was what created the first mass market demand for IMUs. Then from there to phones, for some reason.

I don’t get that actually, I can’t think of anyone who’s glad to have motion trackers on their phone. Maybe it’s for some future plan to spy on us. Maybe militaries use lots of them, which makes them cheap. Maybe it’s aliens.

@Greenaum

> There was terrible, awful, laggy, low-res VR before

It’s still very low-res today compared to standard gaming, 1080 pixels over a 90° FOV vs 1920 pixels over a ~40° FOV. That’s 1/4 the resolution per degree, even worse than in the 90s, you can discern the subpixels and see the screen door effect.

And the performance impact is x3, so graphically it’s way less detailed than gaming on monitors.

> It cost thousands of currency units

Consumer headsets in the 90s were under $1000 ($799 for the Virtual IO i-glasses, $995 for the Forte VFX1) and at the times PCs were comparatively much more expensive than today. You can buy a VR ready PC for $500 today, at the times an entry-level gaming PC was at least $1500, $3000 or more for a good Pentium.

> But there’s no chance anyone apart from game-obsessed millionaires would’ve shelled out for it

You may not have known this period, but at the times you could easily find HMDs on the shelves, consumers headsets were reviewed in all the major magazines dedicated to gaming, and not as luxury devices.

> I can’t think of anyone who’s glad to have motion trackers on their phone.

Magnetometer : used as a compass, used by Google Maps and similar apps

Accelerometer : used to know the orientation between landscape/portrait, also used in cameras, used for gestures (shake to undo on the iPhone for example)

Gyroscope : added precision to the accelerometer, also used for racing, shooting games, etc. and older AR apps (Peak AR, Wikitude) before they became popular again recently (Pokemon Go)

And flying drones into the lounge rooms of Mexico / Middle East, anyone that disagrees.

It is interesting to see that the graphs particularly also show inversion of the curves between Yes and No responses. This means that physiologically yes and no are also opposites. I always thought as an engineer that the brain signals would still be similar and not opposite, as brain tries to decipher and is excited driving the signal. It means some areas of the brain evolve counter signals, which are also negative in the vector vs magnitude sense as well.

Actually, they are not at all sensing the classical brain signals that you’re thinking about.

“Most of whom answered “yes” to the statement “I am happy.” Food for thought”

Well, if I was living in what would be the worst possible hell and suddenly I gained even the smallest relief, I would be overcome with joy at least temporarily.

I also can’t imagine what such a condition would do to your sanity.

Chew some more on that food for thought. Because you didn’t get it.

I guess I need to chew some more as well, because I have the same interpretation as Junk Collector.

Or do you mean that most of us are biased by the mental picture of a person lying in some bed, without stopping to think about what the experience is for the locked-in person? Because I can definitely imagine you have loads of time to re-evaluate all the events in your life, without being micromanaged with the economy’s business therapy (“so you have concrete specific problems? like housing/education/… here is our solution to your problem: forget about your real problems, here is our proposed middle-problem: a lack of money, and now you see you should work to solve our problems in return for money, not that it will solve our real problems…”, just keep suggesting to people to push new problems on their personal problem-stack so that the original true real problems no longer appear on the top of the stack, that is, until they have all the time in the world and suddenly their original problems and goals re-emerge at the top of the stack! “crisis” as some call it, the healthy process of rediscovering your goals and troubles in life)

I can also imagine locked-in state to be quite dreamlike (because just as during dreams, our brains are rather awake, but lack of interactivity decouples your experience from the outside world… So while it might be fun in the oneironaut sense, it is like reporting that addicts in some druggy squat, right after consuming another hit of heroin, are in fact leading surprisingly happy lives…

The photo shows hair. This just CANNOT work with hair. Where do you got the photo from ?

EEG requires wet skin contact. NIR sensors require skin contact.

EEG requires a conductive path to the skin. Most systems I’ve used these days have electrodes that sit above the skin in the cap, but use conductive gel to create a path through the hair to the skin. They work fine. Not sure about the specific system the researchers used, but it’s pretty easy to get fNIRS optodes through the hair to get skin contact, and they are frequently designed specifically to best go through the hair, using a brush type optode like this paper: https://www.ncbi.nlm.nih.gov/pubmed/22567582

“(Most of whom answered “yes” to the statement “I am happy.” Food for thought.)”

After prolonged period of locked-in state, I predict most patients would agree with “I am happy” when they come to realize people are trying to establish contact in a fashion to which the patient can somehow respond. In the local newspapers here this was reported with interpretation along the lines of “as amazing as it sounds, locked-in people are having happy lives”… which I find rather perverse interpretation of “I am happy” in the context of the patient probably realizing people are finally establishing *some* contact after all. But hey feel-good news sells better I guess, and now relatives and friends can finally continue working, paying taxes and consuming (whatever products were advertised in the newspaper)…

i’m hoping those that reply with “I am happy” do so because of the efforts of those around them to communicate have been successful. And not because “If i say no, they might euthanize me”.

I have a friend who was in a cycling accident, he was in a (medically induced) coma for 4 weeks. He said he thought he was in hell and could hear and understand those around him but not respond; even though in his head he was screaming (he did successfully identify those who had visited him and did not mention any that hadn’t).

It does indeed sound like hell.