Every semester at one of [Bruce Land]’s electronics labs at Cornell, students team up, and pitch a few ideas on what they’d like to build for the final project. Invariably, the students will pick what they think is cool. The only thing we know about [Ian], [Joval] and [Balazs] is that one of them is a synth head. How do we know this? They built a programmable, sequenced, wavetable synthesizer for their final project in ECE4760.

First things first — what’s a wavetable synthesizer? It’s not adding, subtracting, and modulating sine, triangle, and square waves. That, we assume, is the domain of the analog senior lab. A wavetable synth isn’t a deep application of a weird reverse FFT — that’s FM synthesis. Wavetable synthesis is simply playing a single waveform — one arbitrary wave — at different speeds. It was popular in the 80s and 90s, so it makes for a great application of modern microcontrollers.

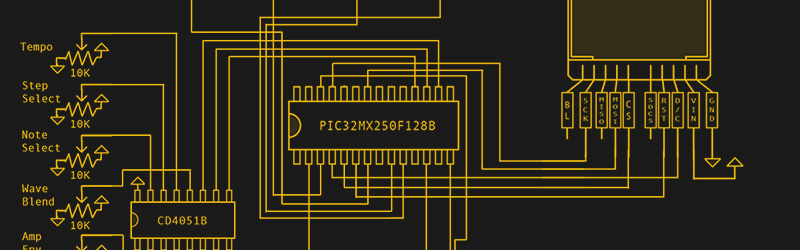

The difficult part of the build was, of course, getting waveforms out of a microcontroller, mixing them, and modulating them. This is a lab course, so a few of the techniques learned earlier in the semester when playing with DTMF tones came in very useful. The microcontroller used in the project is a PIC32, and does all the arithmetic in 32-bit fixed point. Even though the final audio output is at 12-bit resolution, the difference between doing the math at 16-bit and 32-bit was obvious.

A synthesizer isn’t useful unless it has a user interface of some kind, and for this the guys turned to a small TFT display, a few pots, and a couple of buttons. This is a complete GUI to set all the parameters, waveforms, tempo, and notes played by the sequencer. From the video of the project (below), this thing sounds pretty good for a machine that generates bleeps and bloops.

I’ve got tentative plans to do an FPGA FM synth commanded over MIDI for a class. MIDI is actually pretty reasonable as an interface: serial 31.25kbaud, opto-isolated with a few resistors, small commands made of a handful of bytes, free to ignore unsupported commands.

Actually there were two different “definitions” of the term “wavetable synthesis”. Apart from sample players, which are now commonly called “romplers”, the Waldorf company had developed morphing algorithms adressing several different sound producing methods. That was back in the 90’s and AFAIK the common synthesizer market does not offer those features as specifically as Waldorf did. The concept was kind of this: Pick a sound and define a curve for every single parameter it contains. Then while playing you can address every possible position on your morphing curve. You could use steps instead of curves and define what we nowaday call “tweening” methods, aka interpolation. It’s indeed a bit like css3 animations and transitions, just for sound. The underlying sound producing engine employed any of the common synthesis principles: Additive, substractive, FM and later on samples. It was merily a concept for core sound production, it was all about modifying such sounds in the time domain.

The audible result was that of an always changing sound. This might be hard to integrate in a pop song production, maybe one of the reasons this concept never reached a considerable market share.

Jaja, Opa erzählt vom Krieg…

I like how simple the schematic is, would look great as a Manhattan construction design.

Also, GO PATRIOTS!!!