One way to run a compute-intensive neural network on a hack has been to put a decent laptop onboard. But wouldn’t it be great if you could go smaller and cheaper by using a phone instead? If your neural network was written using Google’s TensorFlow framework then you’ve had the option of using TensorFlow Mobile, but it doesn’t use any of the phone’s accelerated hardware, and so it might not have been fast enough.

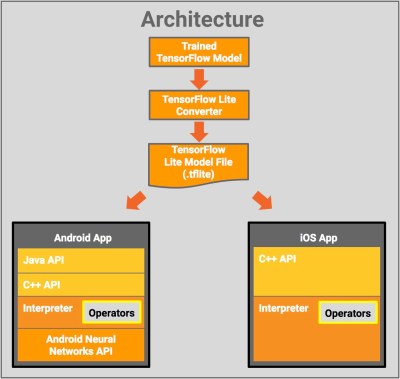

Google has just released a new solution, the developer preview of TensofFlow Lite for iOS and Android and announced plans to support Raspberry Pi 3. On Android, the bottom layer is the Android Neural Networks API which makes use of the phone’s DSP, GPU and/or any other specialized hardware to speed up computations. Failing that, it falls back on the CPU.

Currently, fewer operators are supported than with TensforFlor Mobile, but more will be added. (Most of what you do in TensorFlow is done through operators, or ops. See our introduction to TensorFlow article if you need a refresher on how TensorFlow works.) The Lite version is intended to be the successor to Mobile. As with Mobile, you’d only do inference on the device. That means you’d train the neural network elsewhere, perhaps on a GPU-rich desktop or on a GPU farm over the network, and then make use of the trained network on your device.

What are we envisioning here? How about replacing the MacBook Pro on the self-driving RC cars we’ve talked about with a much smaller, lighter and less power-hungry Android phone? The phone even has a camera and an IMU built-in, though you’d need a way to talk to the rest of the hardware in lieu of GPIO.

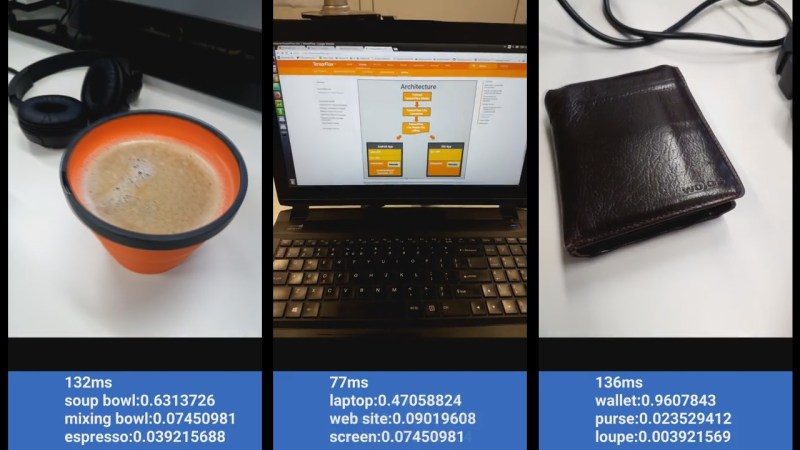

You can try out TensorFlow Lite fairly easily by going to their GitHub and downloading a pre-built binary. We suspect that’s what was done to produce the first of the demonstration videos below.

Assuming the I/O bandwidth is fast enough, there’s always the Google IOIO for… I/O.

Or, if your application can tolerate the latency, you can also use the wifi/bluetooth peripherals integrated in the phone.

Why bother offering this for iOS, though? CoreML imports and runs models generated by TensorFlow and many others, is easy to use, and takes advantage of hardware access in ways third party developers can’t. If efficiency and performance are truly a concern, Google’s offering is a step backward in its attempt to be platform-agnostic. It’s like offering a slightly skinnier Java environment and claiming it makes any sense at all as an “efficient” alternative to native iOS apps. It’s just slightly less inefficient. “Look! Now you only have to light 1.5 barrels of oil on fire instead of 2 to run your flashlight! See? We’re an efficient alternative to a AA battery!”

It’s not much additional work to offer it on iOS, because all the optimized kernels for arm already exist. That being said, we plan on continuing to improve our support for each platform we support, including any improvements to those APIs. There are several acceleration frameworks for ML on iOS already. Many models map just fine to CPU, and the additional latency to startup an accelerator may not be worth it. There is also the metal ML primitives to use GPUs and then there is CoreML.

For GPIO access on Android, there’s Android Things: https://developer.android.com/things/index.html

Good point. I wonder how well TensorFlow Lite runs on those?

This is awesome! Steven, how long might it take for them to release TensofFlow for Raspberry Pi 3 has Google gave any estimation? I am really excited to use it.

David

https://dltutuapp.com/

David, I don’t know about the schedule for TensorFlow for Raspberry Pi 3 that Google’s talking about here but you can already install TensorFlow on the Pi 3. I did it a long time ago for TensorFlow 1.1 using the instructions here https://github.com/samjabrahams/tensorflow-on-raspberry-pi. If you want a more recent version of TensorFlow then you might try compiling the latest from scratch — perhaps these instructions will help with that https://github.com/samjabrahams/tensorflow-on-raspberry-pi/blob/master/GUIDE.md. If you want to see it in action on the Pi then I’ve used it for object recognition here http://hackaday.com/2017/06/14/diy-raspberry-neural-network-sees-all-recognizes-some/.

I don’t think the last sentence is fair to trensor flow. Torch has been around for ~15 years compared to the 3 of TF. You’d expect TF to catch up in terms of performance in the future.

dixit

https://tutuappapk.vip https://emus4u.co

This is really amazing for everyone.

This is really good