Some friends of mine are designing a new board around the STM32F103 microcontroller, the commodity ARM chip that you’ll find in numerous projects and on plenty of development boards. When the time came to order the parts for the prototype, they were surprised to find that the usual stockholders don’t have any of these chips in stock, and more surprisingly, even the Chinese pin-compatible clones couldn’t be found. The astute among you may by now have guessed that the culprit behind such a commodity part’s curious lack of availability lies in the global semiconductor shortage.

A perfect storm of political unintended consequences, climate-related crises throttling Taiwanese chip foundries and shutting down those in the USA, and faulty pandemic recovery planning, has left the chipmakers unable to keep up with the demand from industries on the rebound from their COVID-induced slump. Particularly mentioned in this context is the automotive industry, which has seen plants closing for lack of chips and even models ditching digital dashboards for their analogue predecessors.

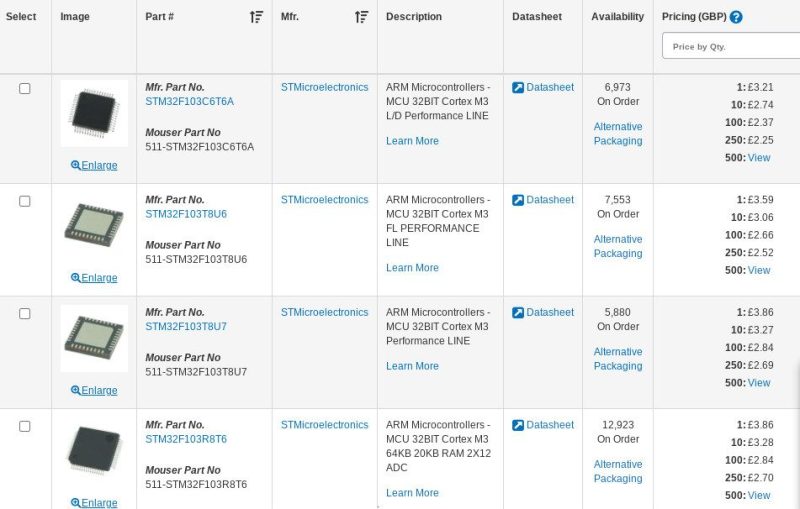

The fall-out from all this drama in the world’s car factories has filtered down through all levels that depend upon semiconductors; as the carmakers bag every scrap of chip fab capacity that they can, so in turn have other chip customers scrambled to keep their own supply lines in place. A quick scan for microcontrollers through distributors like Mouser or Digi-Key finds pages and pages of lines on back-order or out of stock, with those lines still available being largely either for niche applications, unusual package options, or from extremely outdated product lines. The chances of scoring your chosen chip seem remote and most designers would probably baulk at trying to redesign around an ancient 8-bit part from the 1990s, so what’s to be done?

Such things typically involve commercially sensitive information so we understand not all readers will be able to respond, but we’d like to ask the question: how has the semiconductor shortage affected you? We’ve heard tales of unusual choices being made to ship a product with any microcontroller that works, of hugely overpowered chips replacing commodity devices, and even of specialist systems-on-chip being drafted in to fill the gap. In a few years maybe we’ll feature a teardown whose author wonders why a Bluetooth SoC is present without using the radio functions and with a 50R resistor replacing the antenna, and we’ll recognise it as a desperate measure from an engineer caught up in 2021’s chip shortage.

So tell us your tales from the coalface in the comments below. Are you that desperate engineer scouring the distributors’ stock lists for any microcontroller you can find, or has your chosen device remained in production? Whatever your experience we’d like to know what the real state of the semiconductor market is, so over to you!