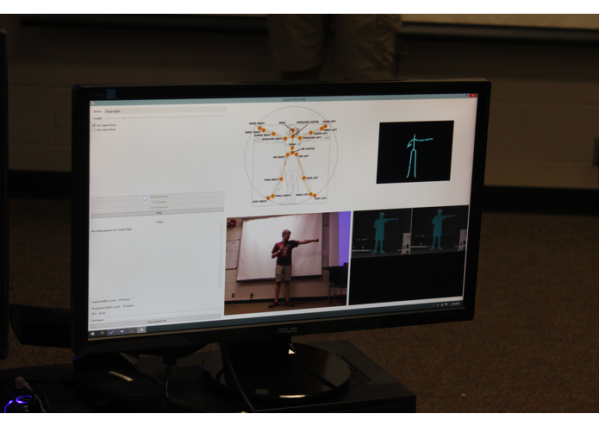

[Matt], [Andrew], [Noah], and [Tim] have a pretty interesting build for their capstone project at Ohio Northern University. They’re using a Microsoft Kinect, and a Leap Motion to create a natural user interface for controlling humanoid robots.

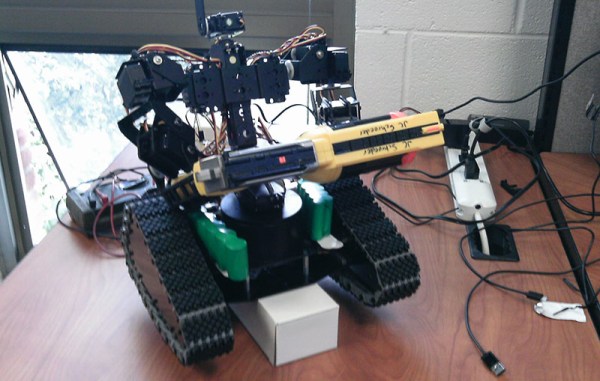

The robot the team is using for this project is a tracked humanoid robot they’ve affectionately come to call Johnny Five. Johnny takes commands from a computer, Kinect, and Leap motion to move the chassis, arm, and gripper around in a way that’s somewhat natural, and surely a lot easier than controlling a humanoid robot with a keyboard.

The team has also released all their software onto Github under an open source license. You can grab that over on the Gits, or take a look at some of the pics and videos from the Columbus Mini Maker Faire.