Sometimes when you walk into a hackerspace you will see somebody’s project on the table that stands so far above the norm of a run-of-the-mill open night on a damp winter’s evening, that you have to know more. If you are a Hackaday scribe you have to know more, and you ask the person behind it if they have something online about it to share with the readership.

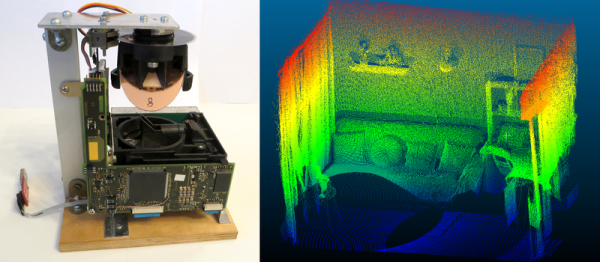

[Jolar] was working on his 3D scanner project on just such an evening in Oxford Hackspace. It’s a neatly self-contained unit in the form of a triangular frame made of aluminium extrusions, into which are placed a stack of Raspberry Pi Zeros with attached cameras, and a very small projector which needed an extra lens from a pair of reading glasses to help it project so closely.

[Jolar] was working on his 3D scanner project on just such an evening in Oxford Hackspace. It’s a neatly self-contained unit in the form of a triangular frame made of aluminium extrusions, into which are placed a stack of Raspberry Pi Zeros with attached cameras, and a very small projector which needed an extra lens from a pair of reading glasses to help it project so closely.

The cameras are arranged to have differing views of the object to be scanned, and the projector casts an array of randomly created dots onto it to aid triangulation from the images. A press of a button, and the four images are taken and, uploaded to a cloud drive in this case, and then picked up by his laptop for processing.

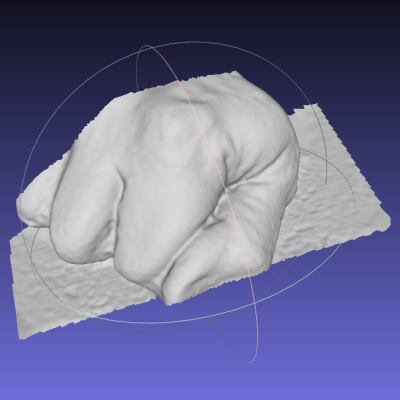

A Multi-view Stereo (MVS) algorithm does the processing work, and creates a 3D model. Doing the processing is VisualSFM, and the resulting files can then be viewed in MeshLab or imported into a CAD package. Seeing it in action the whole process is quick and seamless, and could easily be something you’d see on a commercial product. There is more to come from this project, so it is definitely one to watch.

Four Pi boards may seem a lot, but it is nothing to this scanner with 39 of them.

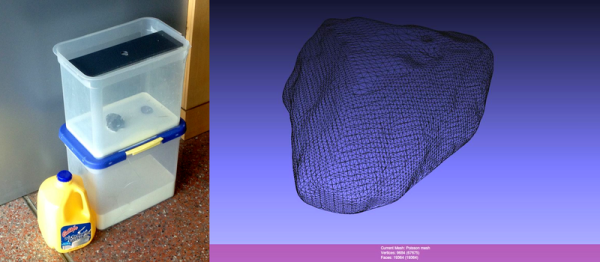

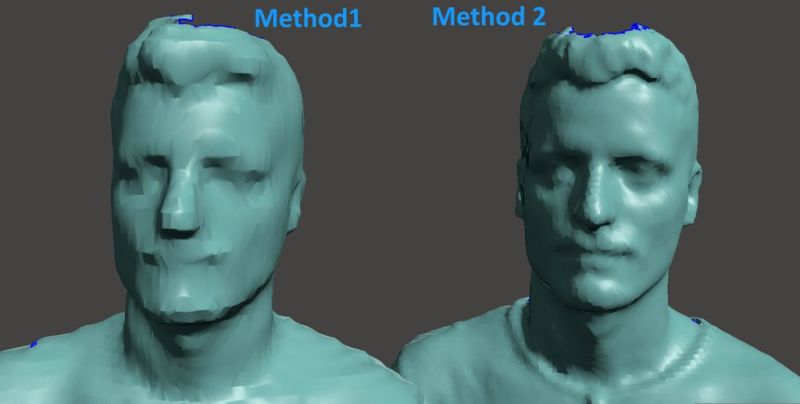

scans of large objects (including people) come out looking noticeably faceted. [Luis] came up with a work-around that results in a much finer detailed scan. Instead of scanning an entire person with one scan, he would do 4 separate scans. Since each individual scan can support 5,000 faces, the resulting merged model can be up to 20,000 faces. Check out the comparison, the difference between the two scanning methods is quite noticeable.

scans of large objects (including people) come out looking noticeably faceted. [Luis] came up with a work-around that results in a much finer detailed scan. Instead of scanning an entire person with one scan, he would do 4 separate scans. Since each individual scan can support 5,000 faces, the resulting merged model can be up to 20,000 faces. Check out the comparison, the difference between the two scanning methods is quite noticeable.