Currently underway is the DARPA Subterranean Challenge (SubT) systems competition for urban circuits streamed live on YouTube now through Wednesday, February 26th.

The DARPA Grand Challenge of 2004 kicked research and development of autonomous vehicles into high gear. Many components on today’s self-driving vehicles can be traced back to systems developed for that competition. Hoping to spur further development, DARPA has since held several more challenges focused moving the state of the art in autonomous robotics ahead.

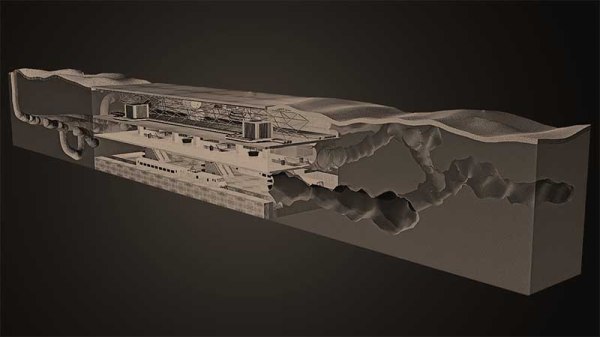

To succeed in this challenge, robots must handle terrain that would confuse today’s self-driving cars. Cluttered environments, uneven surfaces of different materials, even the occasional flooded section are fair game. These robots also lose access to some of the tools previously available, such as GPS. The “systems track” denotes teams building physical robot systems versus a separate “virtual track” for simulation robots. “Urban circuit” is the second of four phases in this competition, environments of this phase are focused on man-made underground structures. (Think subway station.) For more details on this competition as well as description of various phases, see our introductory post or the competition site.

Those who rather not watch robots tentatively exploring unknown territory (and occasionally failing) may choose to wait for summaries published after competition rounds are complete. The first phase (tunnel circuit) from August-October 2019 was summarized by IEEE Spectrum here. Or you can go straight to DARPA for details on the systems track and virtual track with overall results posted on the competition site.

Continue reading “DARPA Subterranean Challenge Urban Circuit Now Livestreaming”