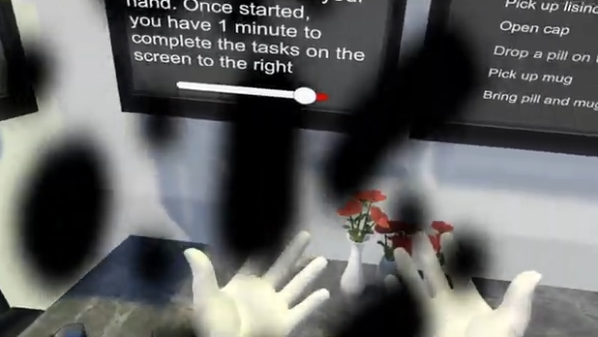

Researchers presented an interesting project at the 2024 IEEE Conference on Virtual Reality and 3D User Interfaces: it uses VR and eye tracking to simulate visual deficits such as macular degeneration, diabetic retinopathy, and other visual diseases and impairments.

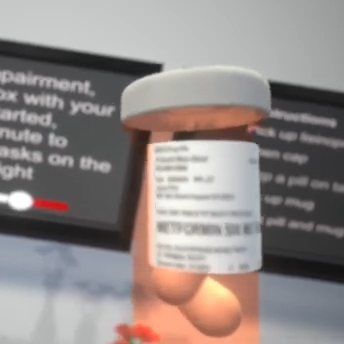

VR offers a unique method of allowing people to experience the impact of living with such conditions, a point driven home particularly well by having the user see for themselves the effect on simple real-world tasks such as choosing a pill bottle, or picking up a mug. Conditions like macular degeneration (which causes loss of central vision) are more accurately simulated by using eye tracking, a technology much more mature nowadays than it was even just a few years ago.

The abstract for the presentation is available here, and if you have some time be sure to check out the main index for all of the VR research demos because there are some neat ones there, including a method of manipulating a user’s perception of the shape of the ground under their feet by electrically-stimulating the tendons of the ankle.

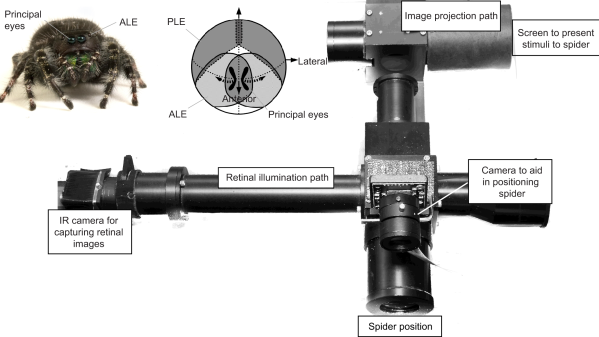

Eye tracking is in a few consumer VR products nowadays, but it’s also perfectly feasible to roll your own in a surprisingly slick way. It’s even been used on jumping spiders to gain insights into the fascinating and surprisingly deep perceptual reality these creatures inhabit.