[Myrijam Stoetzer] and her friend [Paul Foltin], 14 and 15 years old kids from Duisburg, Germany are working on a eye movement controller wheel chair. They were inspired by the Eyewriter Project which we’ve been following for a long time. Eyewriter was built for Tony Quan a.k.a Tempt1 by his friends. In 2003, Tempt1 was diagnosed with the degenerative nerve disorder ALS and is now fully paralyzed except for his eyes, but has been able to use the EyeWriter to continue his art.

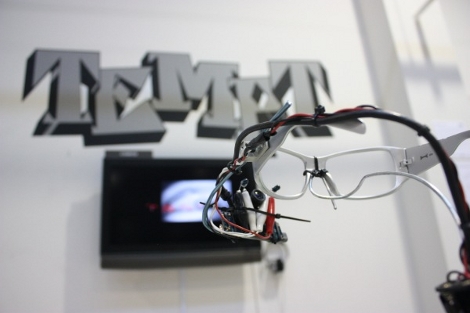

This is their first big leap moving up from Lego Mindstorms. The eye tracker part consists of a safety glass frame, a regular webcam, and IR SMD LEDs. They removed the IR blocking filter from the webcam to make it work in all lighting conditions. The image processing is handled by an Odroid U3 – a compact, low cost ARM Quad Core SBC capable of running Ubuntu, Android, and other Linux OS systems. They initially tried the Raspberry Pi which managed to do just about 3fps, compared to 13~15fps from the Odroid. The code is written in Python and uses OpenCV libraries. They are learning Python on the go. An Arduino is used to control the motor via an H-bridge controller, and also to calibrate the eye tracker. Potentiometers connected to the Arduino’s analog ports allow adjusting the tracker to individual requirements.

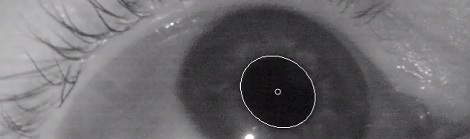

The web cam video stream is filtered to obtain the pupil position, and this is compared to four presets for forward, reverse, left and right. The presets can be adjusted using the potentiometers. An enable switch, manually activated at present is used to ensure the wheel chair moves only when commanded. Their plan is to later replace this switch with tongue activation or maybe cheek muscle twitch detection.

First tests were on a small mockup robotic platform. After winning a local competition, they bought a second-hand wheel chair and started all over again. This time, they tried the Raspberry Pi 2 model B, and it was able to work at about 8~9fps. Not as well as the Odroid, but at half the cost, it seemed like a workable solution since their aim is to make it as cheap as possible. They would appreciate receiving any help to improve the performance – maybe improving their code or utilising all the four cores more efficiently. For the bigger wheelchair, they used recycled car windshield wiper motors and some relays to switch them. They also used a 3D printer to print an enclosure for the camera and wheels to help turn the wheelchair. Further details are also available on [Myrijam]’s blog. They documented their build (German, pdf) and have their sights set on the German National Science Fair. The team is working on English translation of the documentation and will release all design files and source code under a CC by NC license soon.