One of the more interesting cultural phenomena is the ‘bleep’ that replaces certain words in broadcasts, something primarily observed in the US. Although ostensibly applied to prevent susceptible minds from being exposed to the unspeakable horrors of naughty words, the applied 1 kHz censoring tone is decidedly loud and obnoxious enough that its entertainment level falls somewhere between ‘truck backing up’ and ‘loud claxon in busy traffic’. There is thus a definite argument to be made to censor the censoring beep to preserve one’s sanity, which is the goal of [Oona Räisänen]’s Bleep-be-gone project on GitHub.

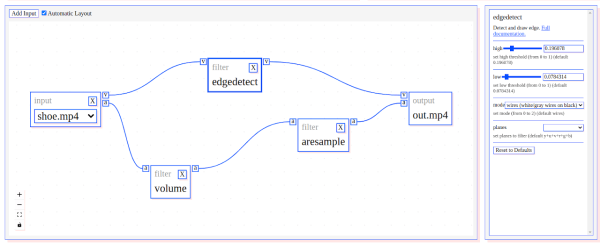

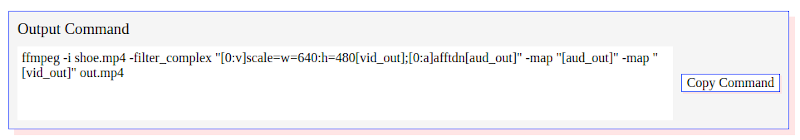

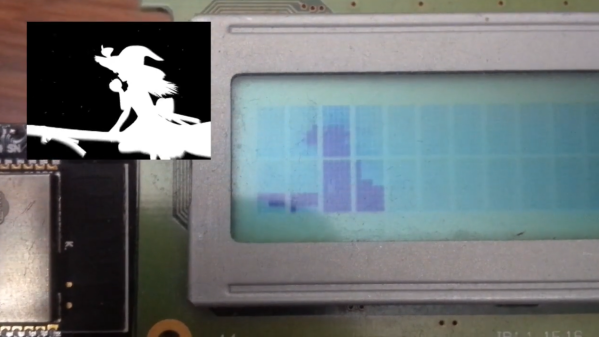

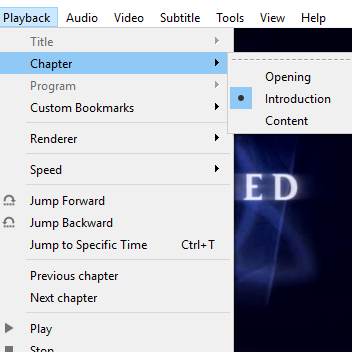

Using a Perl-based wrapper, the versatile ffmpeg framework is used to filter a provided video that was afflicted with bleepitus, before outputting a pristine version where the infernal noise is replaced with blissful silence. This use of silence for censoring naughty words is incidentally becoming more commonplace over an ear-piercing beep, but a tool like Bleep-be-gone can be used to hasten the demise of its terror. Considering that the point of the 1 kHz back-up alarm beep is to draw a person’s attention to a piece of heavy equipment moving about, there is clearly no good reason why the replacement of a naughty word should warrant a similar drawing of attention.