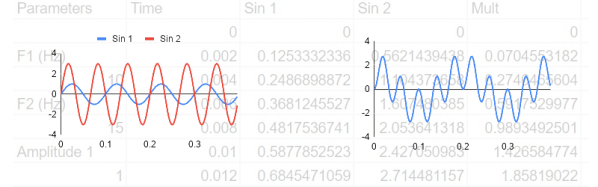

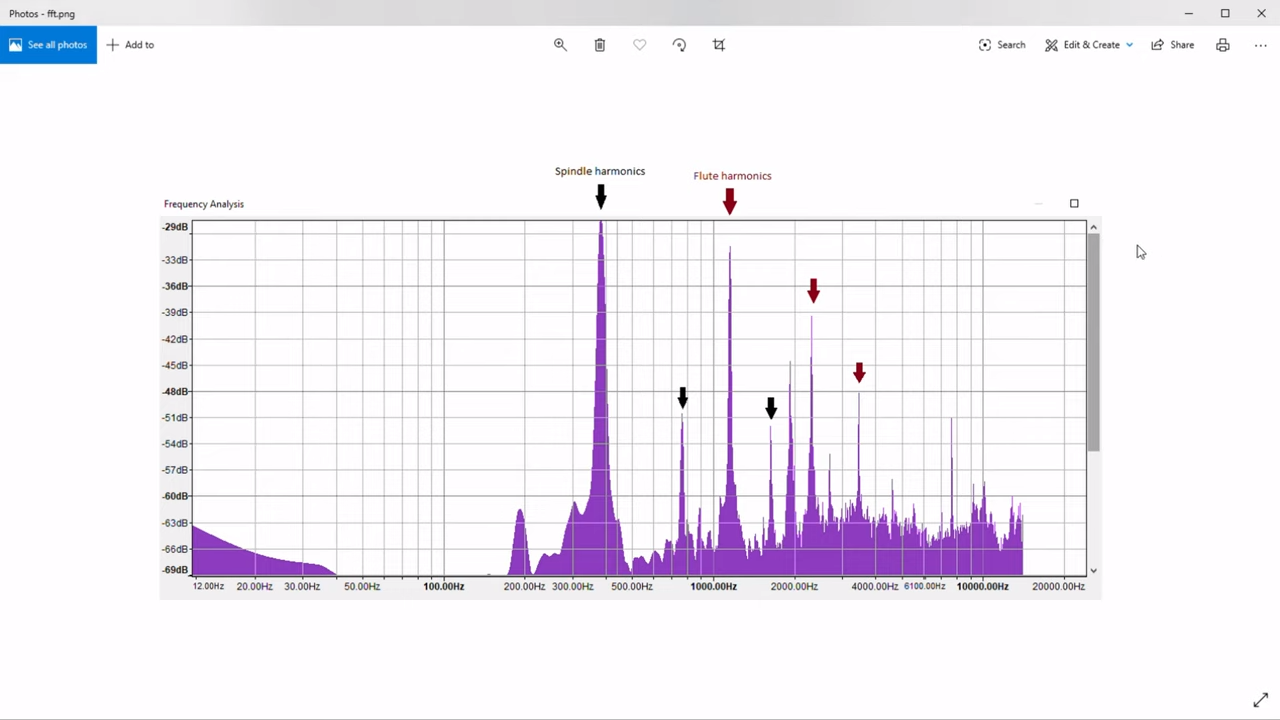

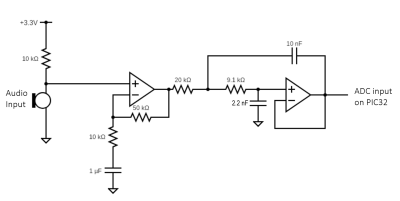

You probably have at least a nodding familiarity with the Fourier transform, a mathematical process for transforming a time-domain signal into a frequency domain signal. In particular, for computers, we don’t really have a nice equation so we use the discrete version of the transform which takes a series of measurements at regular intervals. If you need to understand the entire frequency spectrum of a signal or you want to filter portions of the signal, this is definitely the tool for the job. However, sometimes it is more than you need.

For example, consider tuning a guitar string. You only need to know if one frequency is present or if it isn’t. If you are decoding TouchTones, you only need to know if two of eight frequencies are present. You don’t care about anything else.

A Fourier transform can do either of those jobs. But if you go that route you are going to do a lot of math to compute things you don’t care about just so you can pick out the one or two pieces you do care about. That’s the idea behind the Goertzel. It is essentially a fast Fourier transform algorithm stripped down to compute just one frequency band of interest. The math is much easier and you can usually implement it faster and smaller than a full transform, even on small CPUs.

Continue reading “DSP Spreadsheet: The Goertzel Algorithm Is Fourier’s Simpler Cousin”