It is with great sadness that Hackaday learns of the passing of Steve Evans. He was one of the creators of Eyedrivomatic, the eye-controlled wheelchair project which was awarded the Grand Prize during the 2015 Hackaday Prize.

News of Steve’s passing was shared by his teammate Cody Barnes in a project update on Monday. For more than a decade Steve had been living with Motor Neurone Disease (MND). He slowly lost the function of his body, but his mind remained intact throughout. We are inspired that despite his struggles he chose to spend his time creating a better world. Above you can see him test-driving an Eyedrivomatic prototype which is the blue 3D printed attachment seen on the arm of his chair.

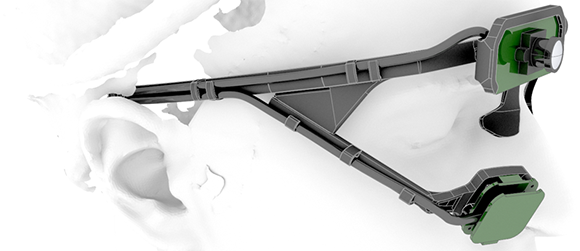

The Eyedrivomatic is a hardware adapter for electric wheelchairs which bridges the physical controls of the chair with the eye-controlled computer used by people living with ALS/MND and in many other situations. The project is Open Hardware and Open Source Software and the team continues to work on making Eyedriveomatic more widely available by continuing to refine the design for ease of fabrication, and has even begun to sell kits so those who cannot build it themselves still have access.

The team will continue with the Eyedrivomatic project. If you are inspired by Steve’s story, now is a great time to look into helping out. Contact Cody Barnes if you would like to contribute to the project. Love and appreciation for Steve and his family may be left as comments on the project log.