There’s a harsh truth underlying all robotic research: compared to evolution, we suck at making things move. Nature has a couple billion years of practice making things that can slide, hop, fly, swim and run, so why not leverage those platforms? That’s the idea behind this turtle with a navigation robot strapped to its back.

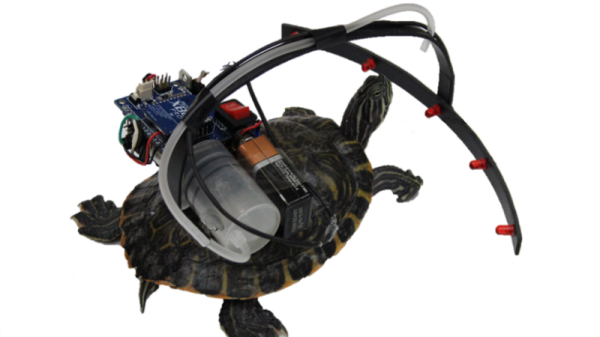

This reminds us somewhat of an alternative universe sci-fi story by S.M. Stirling called The Sky People. In the story, Venus is teeming with dinosaurs that Terran colonists use as beasts of burden with brain implants that stimulate pleasure centers to control them. While the team led by [Phill Seung-Lee] at the Korean Advanced Institute of Science and Technology isn’t likely to get as much work from the red-eared slider turtle as the colonists in the story got from their bionic dinosaurs, there’s still plenty to learn from a setup like this. Using what amounts to a head-up display for the turtle in the form of a strip of LEDs, along with a food dispenser for positive reinforcement, the bionic terrapin is trained to associate food with the flashing LEDs. The LEDs are then used as cues as the turtle navigates between waypoints in a tank. Sadly, the full article is behind a paywall, but the video below gives you a taste of the gripping action.

Looking for something between amphibian and fictional dinosaurs to play mind games with? Why not make your best friend bionic? Continue reading “Head-Up Display Augments Bionic Turtle’s Reality”