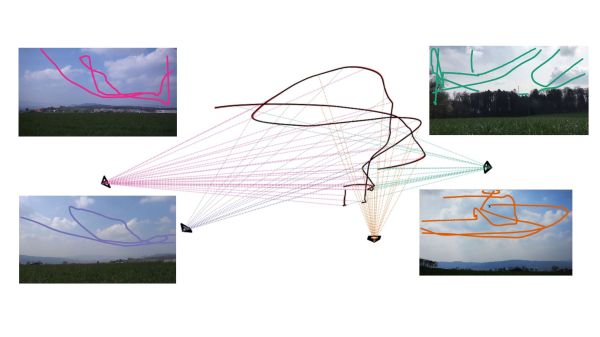

Calculating three-dimensional position from two-dimensional projections are literal textbook examples in geometry, but those examples are the “assume a spherical cow” type of simplifications. Applicable only in an ideal world where the projections are made with mathematically perfect cameras at precisely known locations with infinite resolution. Making things work in the real world is a lot harder. But not only have [Jingtong Li, Jesse Murray et al.] worked through the math of tracking a drone’s 3D flight from 2D video, they’ve released their MultiViewUnsynch software on GitHub so we can all play with it.

Instead of laboratory grade optical instruments, the cameras used in these experiments are available at our local consumer electronics store. A table in their paper Reconstruction of 3D Flight Trajectories from Ad-Hoc Camera Networks (arXiv:2003.04784) listed several Huawei cell phone cameras, a few Sony digital cameras, and a GoPro 3. Video cameras don’t need to be placed in any particular arrangement, because positions are calculated from their video footage. Correlating overlapping footage from dissimilar cameras is a challenge all in itself, since these cameras record at varying framerates ranging from 25 to 59.94 frames per second. Furthermore, these cameras all have rolling shutters, which adds an extra variable as scanlines in a frame are taken at slightly different times. This is not an easy problem.

There is a lot of interest in tracking drone flights, especially those flying where they are not welcome. And not everyone have the budget for high-end equipment or the permission to emit electromagnetic signals. MultiViewUnsynch is not quite there yet, as it tracks a single target and video files were processed afterwards. The eventual goal is to evolve this capability to track multiple targets on live video, and hopefully help reduce frustrating public embarrassments.

[IROS 2020 Presentation video (duration 14:45) requires free registration, available until at least Nov. 25th 2020.]