With a sweeping wave of complexity that comes with using your new appliance tech, it’s easy to start grumbling over having to pull your phone out every time you want to turn the kitchen lights on. [Valentin] realized that our new interfaces aren’t making our lives much simpler, and both he and the folks at MIT Media Labs have developed a solution.

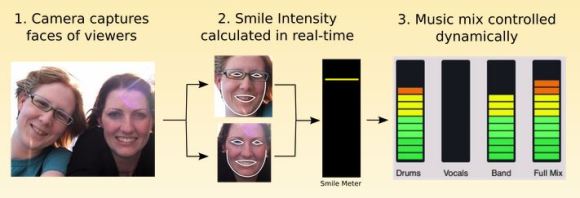

Open Hybrid takes the interface out of the phone app and superimposes it directly onto the items we want to operate in real life. The Open Hybrid Interface is viewed through the lense of a tablet or smart mobile device. With a real time video stream, an interactive set of knobs and buttons superimpose themselves on the objects they control. In one example, holding a tablet up to a light brings up a color palette for color control. In another, sliders superimposed on a Mindstorms tank-drive toy become the control panel for driving the vehicle around the floor. Object behaviors can even be tied together so that applying an action to one object, such as turning off one light, will apply to other objects, in this case, putting all other lights out.

Open Hybrid takes the interface out of the phone app and superimposes it directly onto the items we want to operate in real life. The Open Hybrid Interface is viewed through the lense of a tablet or smart mobile device. With a real time video stream, an interactive set of knobs and buttons superimpose themselves on the objects they control. In one example, holding a tablet up to a light brings up a color palette for color control. In another, sliders superimposed on a Mindstorms tank-drive toy become the control panel for driving the vehicle around the floor. Object behaviors can even be tied together so that applying an action to one object, such as turning off one light, will apply to other objects, in this case, putting all other lights out.

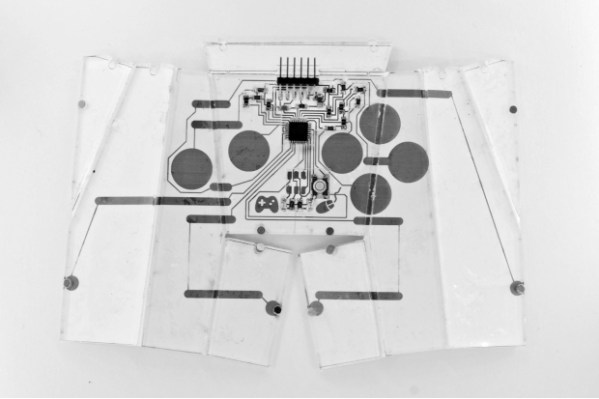

Beneath the surface, Open Hybrid is developed on OpenFrameworks with a hardware interface handled by the Arduino Yún running custom firmware. Creating a new application, though, has been simplified to be achievable with web-friendly languages (HTML, Javascript, and CSS). The net result is that their toolchain cuts out a heavy need for extensive graphics knowledge to develop a new control panel.

If you can spare a few minutes, check out [Valentin’s] SolidCon talk on the drive to design new digital interfaces that echo those we’ve already been using for hundreds of years.

Last but not least, Open Hybrid may have been born in the Labs, but its evolution is up to the community as the entire project is both platform independent and open source.

Sure, it’s not mustaches, but it’s definitely more user-friendly.

Continue reading “Open Hybrid Gives You The Knobs And Buttons To Your Digital Kingdom”