Join us on Wednesday, October 21st at noon Pacific for the Exploring Animal Intelligence Hack Chat with Hans Forsberg!

From our lofty perch atop the food chain it’s easy to make the assumption that we humans are the last word in intelligence. A quick glance at social media or a chat with a random stranger at the store should be enough to convince you that human intelligence isn’t all it’s cracked up to be, or at least that it’s not evenly distributed. But regardless, we are pretty smart, thanks to those big, powerful brains stuffed into our skulls.

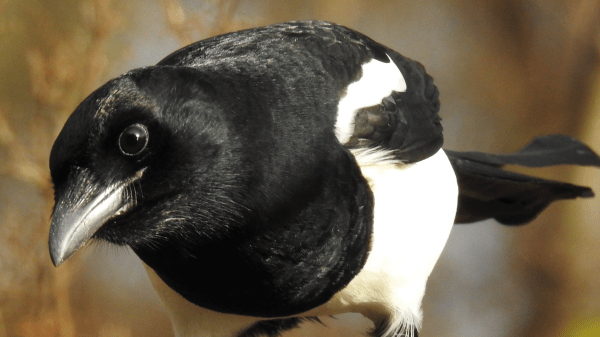

We’re far from the only smart species on the planet, though. Fellow primates and other mammals clearly have intelligence, and we’ve seen amazingly complex behaviors from animals in just about every taxonomic rank. But it’s the birds who probably stuff the most functionality into their limited neural hardware, with tool use, including the ability to make new tools, being common, along with long-distance navigation, superb binocular vision, and of course the ability to rapidly maneuver in three-dimensions while flying.

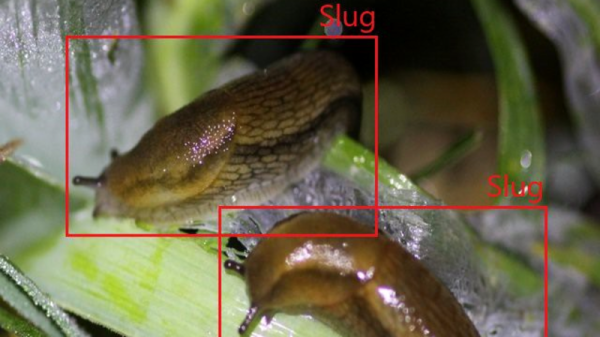

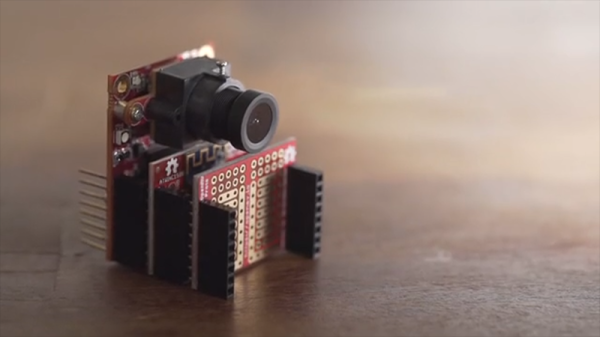

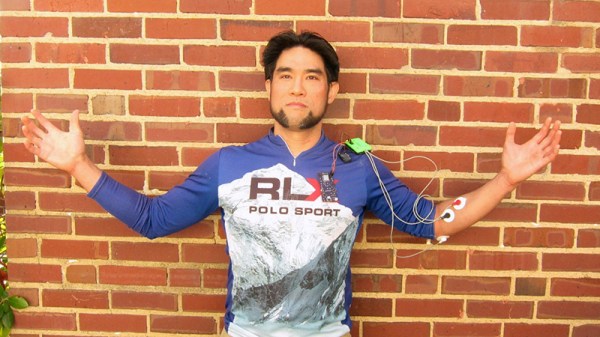

Hans Forsberg has taken an interest in avian intelligence lately, and to explore just what’s possible he devised a fiendishly clever system to train his local magpie flock to clean up his yard, which he calls “BirdBox”. We recently wrote up his initial training attempts, which honestly bear a strong resemblance to training a machine learning algorithm, which is probably no small coincidence since his professional background is with neural networks. He has several years of work into his birds, and he’ll stop by the Hack Chat to talk about what goes into leveraging animal intelligence, what we can learn about our systems from it, and where BirdBox goes next.

Our Hack Chats are live community events in the Hackaday.io Hack Chat group messaging. This week we’ll be sitting down on Wednesday, October 21 at 12:00 PM Pacific time. If time zones baffle you as much as us, we have a handy time zone converter.

Our Hack Chats are live community events in the Hackaday.io Hack Chat group messaging. This week we’ll be sitting down on Wednesday, October 21 at 12:00 PM Pacific time. If time zones baffle you as much as us, we have a handy time zone converter.

Click that speech bubble to the right, and you’ll be taken directly to the Hack Chat group on Hackaday.io. You don’t have to wait until Wednesday; join whenever you want and you can see what the community is talking about.