Join us on Wednesday, January 19 at noon Pacific as we kick off the 2022 Hack Chat season with the Electromyography Hack Chat with hut!

It’s one of the simplest acts most people can perform, but just wiggling your finger is a vastly complex process under the hood. Once you consciously decide to move your digit, a cascade of electrochemical reactions courses from the brain down the spinal cord and along nerves to reach the muscles fibers of the forearm, where still more reactions occur to stimulate the muscle fibers and cause them to contract, setting that finger to wiggling.

The electrical activity going on inside you while you’re moving your muscles is actually strong enough to make it to the skin, and is detectable using electromyography, or EMG. But just because a signal exists doesn’t mean it’s trivial to make use of. Teasing a usable signal from one muscle group amidst the noise from everything else going on in a human body can be a chore, but not an insurmountable one, even for the home gamer.

The electrical activity going on inside you while you’re moving your muscles is actually strong enough to make it to the skin, and is detectable using electromyography, or EMG. But just because a signal exists doesn’t mean it’s trivial to make use of. Teasing a usable signal from one muscle group amidst the noise from everything else going on in a human body can be a chore, but not an insurmountable one, even for the home gamer.

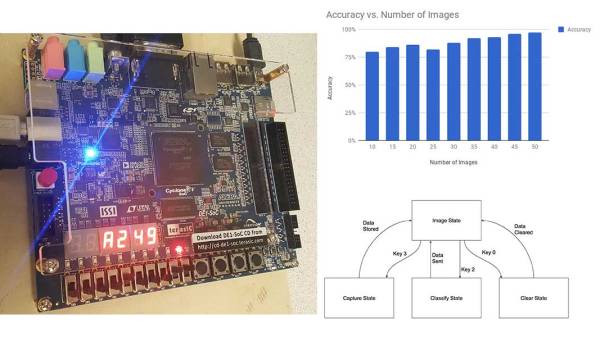

To make EMG a little easier, our host for this Hack Chat, hut, has been hard at work on PsyLink, a line of prototype EMG interfaces that can be used to detect muscle movements and use them to control whatever you want. In this Hack Chat, we’ll dive into EMG in general and PsyLink in particular, and find out how to put our muscles to work for something other than wiggling our fingers.

Our Hack Chats are live community events in the Hackaday.io Hack Chat group messaging. This week we’ll be sitting down on Wednesday, January 19 at 12:00 PM Pacific time. If time zones have you tied up, we have a handy time zone converter.