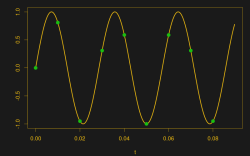

Suppose you take a few measurements of a time-varying signal. Let’s say for concreteness that you have a microcontroller that reads some voltage 100 times per second. Collecting a bunch of data points together, you plot them out — this must surely have come from a sine wave at 35 Hz, you say. Just connect up the dots with a sine wave! It’s as plain as the nose on your face.

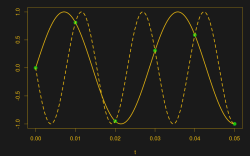

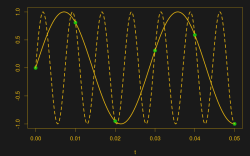

And then some spoil-sport comes along and draws in a version of your sine wave at -65 Hz, and then another at 135 Hz. And then more at -165 Hz and 235 Hz or -265 Hz and 335 Hz. And then an arbitrary number of potential sine waves that fit the very same data, all spaced apart at positive and negative integer multiples of your 100 Hz sampling frequency. Soon, your very pretty picture is looking a bit more complicated than you’d bargained for, and you have no idea which of these frequencies generated your data. It seems hopeless! You go home in tears.

But then you realize that this phenomenon gives you super powers — the power to resolve frequencies that are significantly higher than your sampling frequency. Just as the 235 Hz wave leaves an apparent 35 Hz waveform in the data when sampled at 100 Hz, a 237 Hz signal will look like 37 Hz. You can tell them apart even though they’re well beyond your ability to sample that fast. You’re pulling in information from beyond the Nyquist limit!

This essential ambiguity in sampling — that all frequencies offset by an integer multiple of the sampling frequency produce the same data — is called “aliasing”. And understanding aliasing is the first step toward really understanding sampling, and that’s the first step into the big wide world of digital signal processing.

Whether aliasing corrupts your pristine data or provides you with super powers hinges on your understanding of the effect, and maybe some judicious pre-sampling filtering, so let’s get some knowledge.