Clocks are a popular project around here, and with good reason. There’s a ton of options, and there’s always a new take on ways to tell time. Clocks using lasers, words, or even ball bearings are all atypical ways of displaying time, but like a mathematician looking for a general proof of a long-understood idea this clock from [Julldozer] shows us a way to turn any object into a clock.

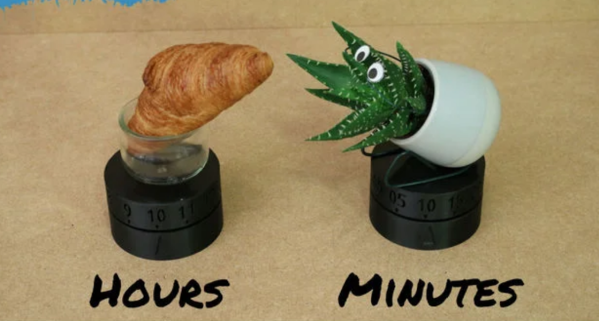

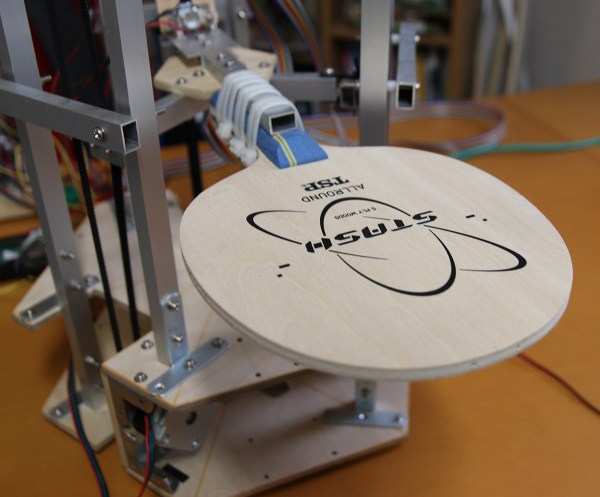

His build uses AA-powered clock movements that you would find on any typical wall clock, rather than reaching for his go-to solution of an Arduino and a stepper motor. The motors that drive the hands in these movements are extremely low-torque and low-power which is what allows them to last for so long with such a small power source. He uses two of them, one for hours and one for minutes, to which he attaches a custom-built lazy Suzan. The turntable needs to be extremely low-friction so as to avoid a situation where he has to change batteries every day, so after some 3D printing he has two rotating plates which can hold any object in order to tell him the current time.

While he didn’t design a clock from scratch or reinvent any other wheels, the part of this project that shines is the way he was able to utilize such a low-power motor to turn something so much heavier. This could have uses well outside the realm of timekeeping, and reminds us of this 3D-printed gear set from last year’s Hackaday prize.