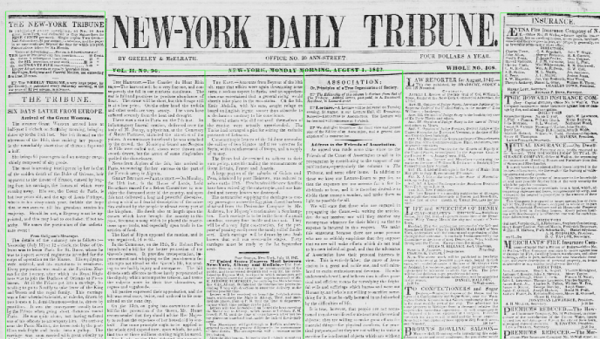

It’s a story that has caused consternation and mirth in equal measure amongst Brits, that the owners of a car in Surrey received a fine for driving in a bus lane miles away in Bath, when in fact the camera had been confused by the text on a sweater worn by a pedestrian. It seems the word “knitter” had been interpreted by the reader as “KN19 TER”, which as Brits will tell you follows the standard format for modern UK licence plate.

It gives us all a chance to have a good old laugh at the expense of the UK traffic authorities, but it raises some worthwhile points about the fallacy of relying on automatic cameras to dish out fines without human intervention. Except for the very oldest of cars, the British number plate follows an extremely distinctive high-contrast format of large black letters on a reflective white or yellow background, and since 2001 they have all had to use the same slightly authoritarian-named MANDATORY typeface. They are hardly the most challenging prospect for a number plate recognition system, but even when it makes mistakes the fact that ambiguous results aren’t subjected to a human checking stage before a fine is sent out seems rather chilling.

It also raise the prospect of yet more number-plate-related mischief, aside from SQL injection jokes and adversarial fashion, we can only imagine the havoc that could be caused were a protest group to launch a denial of service attack with activists sporting fake MANDATORY licence plates.

Header image, based on the work of ZElsb, CC BY-SA 4.0.