We’ve all been there. Pigeons are generally pretty innocuous, but they do leave a mess. If you have a convertible or a bicycle or even just a clean car, you probably don’t want them hanging around. [Max] was tired of a messy balcony, so like you might approach any engineering problem, he worked his way through several possible solutions. Starting with plastic crows, and naturally ending with an automated water gun.

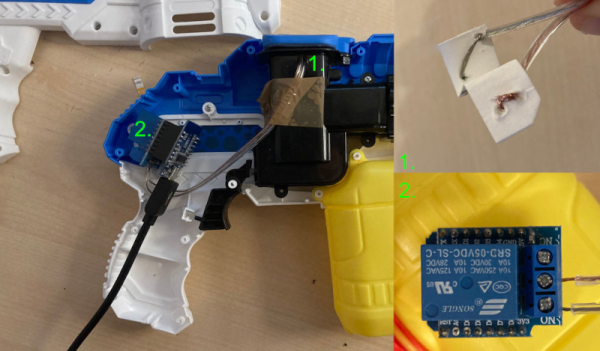

The resulting robotic water gun that targets pigeons with openCV is a dandy project and while we don’t usually advocate shooting at neighborhood animals, we don’t think a little water will be any worse than the rain for the pigeons. The build started with a cheap electric water pistol. A Wemos D1 Mini ESP8266 development board provides the brainpower. The water pistol wouldn’t easily take rechargeable batteries, plus it is a good idea to separate the logic supply and the pump motors, so the D1 gets power from a USB power bank separate from the gun’s batteries.

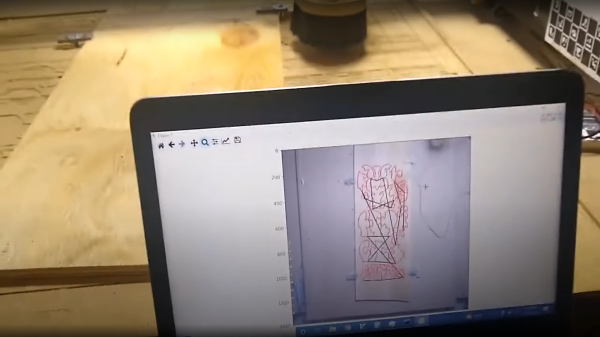

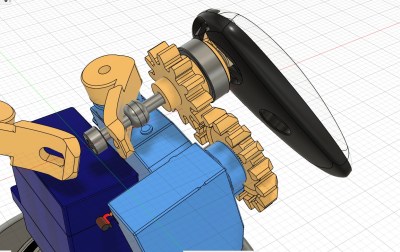

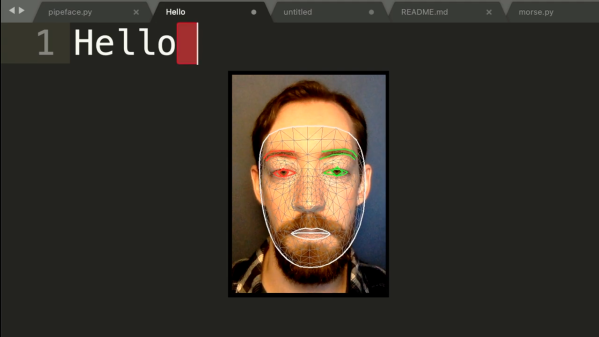

That leaves the camera. An old iPhone 6S with a 3D printed bracket feeds video to a Python script that uses openCV. If looks for changes using a very particular algorithm to detect that something is moving and fires the gun. It doesn’t appear that it actually tracks the pigeons, so maybe that’s a thought for version 2.

Was it successful? Maybe, but it does seem like the pigeons learned to avoid it. We still think azimuth and elevation on the gun would help.

Most of the time when we see pigeon hacking it is to use them for nefarious purposes. [Max] should be glad he doesn’t have to deal with lions.

Finding himself in such a boat, [Fletcher]’s solution was to build

Finding himself in such a boat, [Fletcher]’s solution was to build