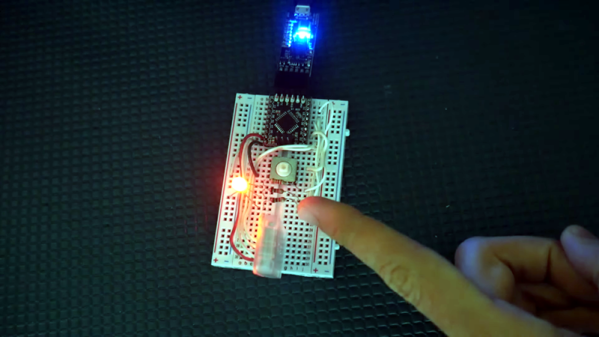

A lot of consumer gadgets use touch sensors now. It is a cheap and reliable way to replace a variety of knobs and switches on everything from headphones to automobiles. However, creating a custom touch controller for a one-off project can be daunting. A recent ACM paper shows how just about any capacitive sensor can work as a multitouch sensor with nothing more than an Arduino although a PC running processing interprets the data for higher-level functions.

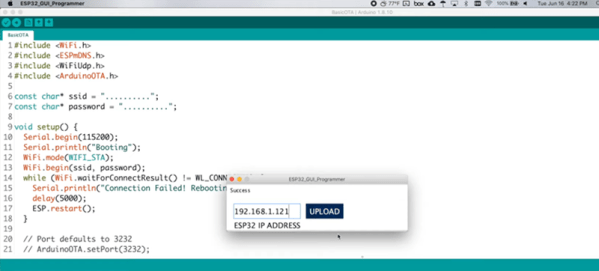

The key is that the Arduino excites the grid using PWM and then examines the signal coming out of the grid. Finger poking changes the response quite a bit and the Arduino can sense it using the analog to digital converters onboard. You can find the actual software kit online. The tutorial document is probably more interesting than the ACM paper if you only want to use the kit.

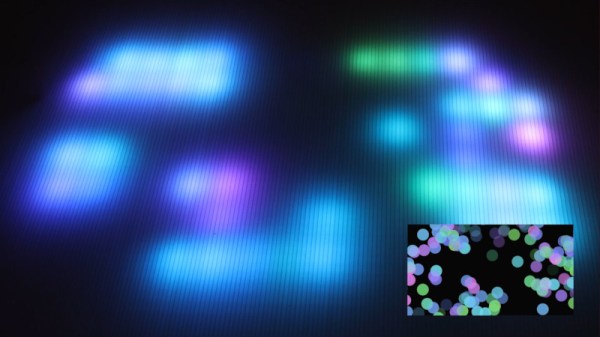

The optimum drive frequency is 10 MHz. The examples rely on harmonics of a lower frequency PWM signal to get there. The analog conversion, of course, isn’t that fast but since your finger touch rate is relatively slow, they treat the signal as an amplitude-modulated input which is very easy to decode.

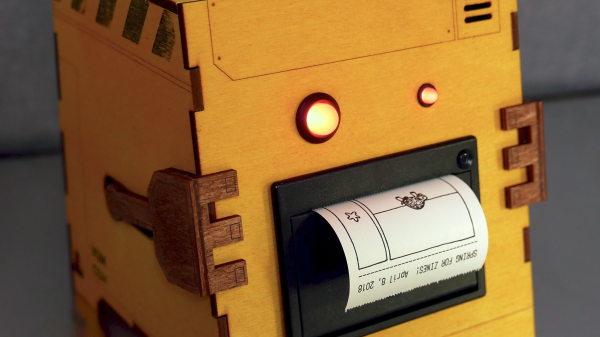

The sensors can be conductive ink, thread, or copper strips. There are several example applications, including a 3D printed bunny you can pet, a control panel on a sleeve, and an interactive greeting card.

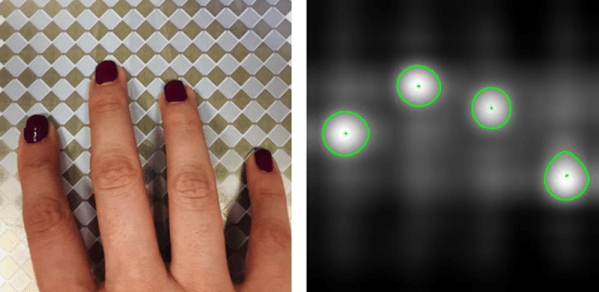

The sensor forms an image and OpenCV detects the actual touch configuration. It appears you can use the raw data from the Arduino, too, but it might be a little harder.

We imagine aluminum foil would work with this technique. If you get to the point of laying out a PCB, this might come in handy.