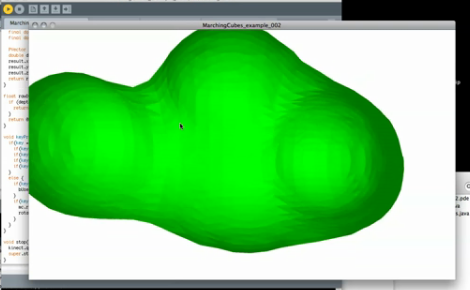

Known as “The Programmers Solid 3D CAD Modeller”, OpenSCAD is used by many people for whom writing code comes more naturally than learning a fiddly user interface. It’s a very capable piece of software, but regular users will tell you that it can be rather slow when it comes to rendering your work. We’re very pleased to see that a fix for this has been produced courtesy of [@ochafik], can now be found as an experimental feature in nightly builds, and will in due course no doubt find its way to official releases.

Despite a modern computer invariably having a multi-core architecture, it might surprise you to find that OpenSCAD wasn’t able to take advantage of this previously. The above-linked thread spans over a decade of experimenting and contains some fascinating discussions if you’re prepared to wade through it, and culminates a few weeks ago in the announcement of the new feature giving access to multiple CPUs. We don’t have it yet, but it’s great to know it’s in the works and we’re looking forward to render time involving considerably less of a wait.

So many OpenSCAD projects have passed through these pages over the years, it’s safe to say that it has a significant user base among Hackaday readers. It’s still something an AI hasn’t mastered yet though.

Thanks [pca006132] for the tip.