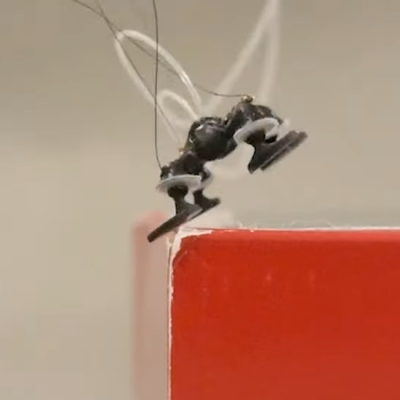

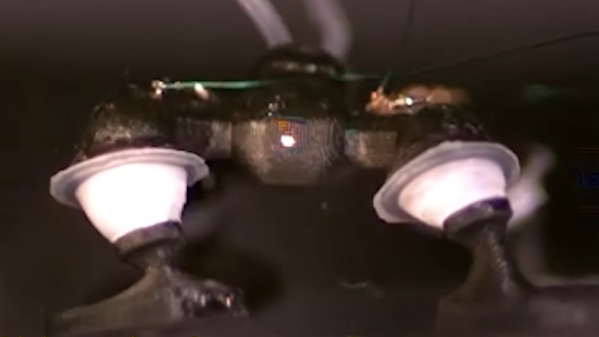

Researchers at Cornell University have developed a tiny, proof of concept robot that moves its four limbs by rapidly igniting a combination of methane and oxygen inside flexible joints.

The device can’t do much more than blow each limb outward with a varying amount of force, but that’s enough to be able to steer and move the little unit. It has enough power to make some very impressive jumps. The ability to navigate even with such limited actuators is reminiscent of hopped-up bristebots.

The device can’t do much more than blow each limb outward with a varying amount of force, but that’s enough to be able to steer and move the little unit. It has enough power to make some very impressive jumps. The ability to navigate even with such limited actuators is reminiscent of hopped-up bristebots.

Electronic control of combustions in the joints allows for up to 100 explosions per second, which is enough force to do useful work. The prototype is only 29 millimeters long and weighs only 1.6 grams, but it can jump up to 56 centimeters and move at almost 17 centimeters per second.

The prototype is tethered, so those numbers don’t include having to carry its own power or fuel supply, but as a proof of concept it’s pretty interesting. Reportedly a downside is that the process is rather noisy, which we suppose isn’t surprising.

Want to see it in action? Watch the video (embedded below) to get an idea of what it’s capable of. More details are available from the research paper, as well.

Continue reading “Micro Robot Disregards Gears, Embraces Explosions”

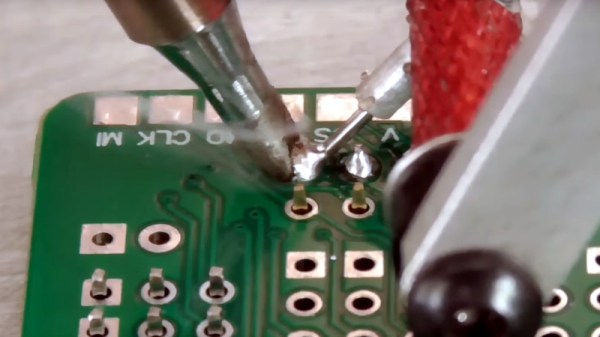

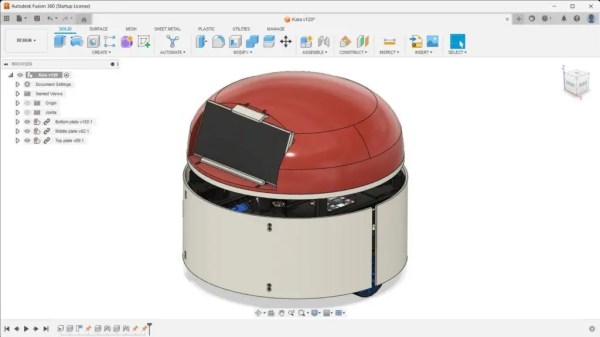

The robot face is introduced to us with a soundtrack befitting Stranger Things, or maybe Luke Million. The build was inspired by The Doorman, a creepy art piece with animatronic eyes. [Jens’] build started with a 3D model of a 3D mask, with the eyes and mouth modified to have rectangular cutouts for LED displays. The displays are run by a Raspberry Pi Pico, which generates a variety of eye and mouth animations. It uses a camera for face tracking, so the robot’s evil eyes seem to follow the viewer as they move around. In good form, the face has a simple switch—from good to evil, happy to angry. Or, as [Jens] designates the modes: “Fren” and “Not Fren.”

The robot face is introduced to us with a soundtrack befitting Stranger Things, or maybe Luke Million. The build was inspired by The Doorman, a creepy art piece with animatronic eyes. [Jens’] build started with a 3D model of a 3D mask, with the eyes and mouth modified to have rectangular cutouts for LED displays. The displays are run by a Raspberry Pi Pico, which generates a variety of eye and mouth animations. It uses a camera for face tracking, so the robot’s evil eyes seem to follow the viewer as they move around. In good form, the face has a simple switch—from good to evil, happy to angry. Or, as [Jens] designates the modes: “Fren” and “Not Fren.”