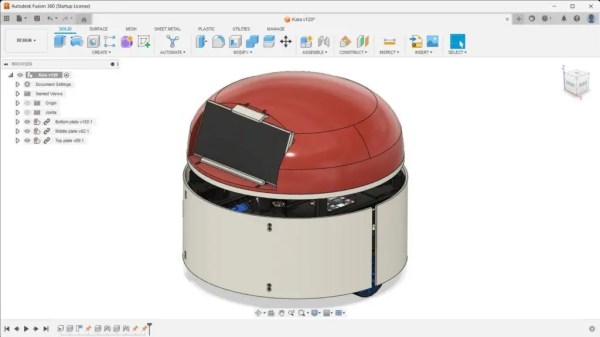

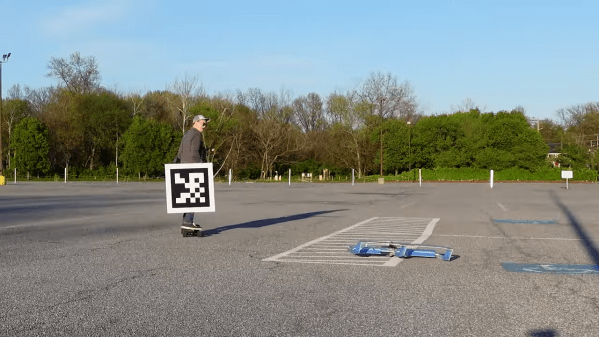

Robots are cool. Robots you build yourself are cooler, especially ones that use stuff you have lying around already. Snoopy is a new open-source robot that uses an Arduino as a brain but with a 3D printed body and a short list of parts that can probably be sourced from the junk drawer. It’s still being developed, but it looks like a cool project heading in the right direction to produce an interesting robot.

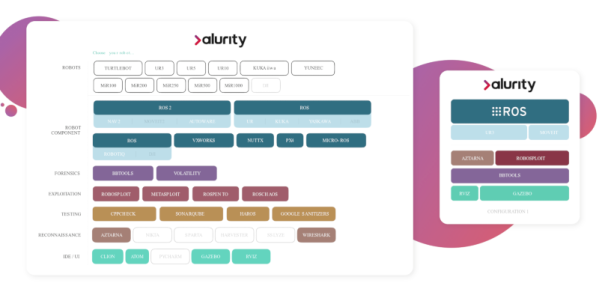

It’s based on a new robot software platform called Kaia.ai that is built on top of the Robot Operating System 2 (ROS2), but with a more friendly and beginner-focused interface. Currently, the Snoopy project includes enough to get up and running with a printed frame and the electronics to install an Arduino running ROS2 that controls it. That’s an excellent place to start if you want to get into robotics, but without diving straight into the technical challenges of working with real-time operating systems.

It is also interesting that the previous project from the creator (called Kiddo) fell into the complexity trap, where you keep adding features and create an overly complex design that is a pain to build. Hopefully the designers have learned from Kiddo and will keep Snoopy simple.

We’ve covered plenty of other robot projects here at Hackaday, from ones that venture into nuclear reactors to ones that write your thank-you notes for you or give you hugs. We’ve even looked at how to give your robots a personality. Combine all those together with Snoopy and you could build a hugging, compassionate robot that has nice handwriting and can repair a nuclear reactor. And if you do, write it up and send it to our tips line!