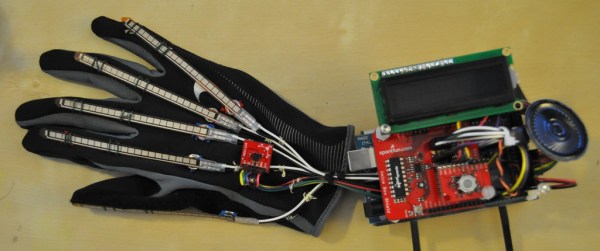

As part of a senior design project for a biomedical engineering class [Kendall Lowrey] worked in a team to develop a device that translates American Sign Language into spoken English. Wanting to eclipse glove-based devices that came before them, the team set out to move away from strictly spelling words, to combining sign with common gesture. The project is based around an Arduino Mega and is limited to the alphabet and about ten words because of the initial programming space restraints. When the five flex sensors and three accelerometer values register an at-rest state for two seconds the device takes a reading and looks up the most likely word or letter in a table. It then outputs that to a voicebox shield to translate the words or letters into phonetic sounds.