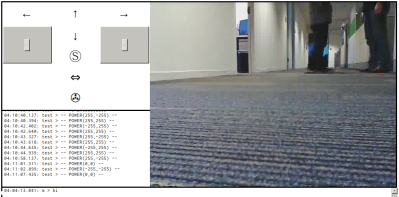

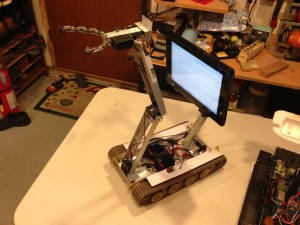

It’s great to see different kinds of hardware and software tossed into a project together, allowing someone to mix things that don’t normally go together into something new. [Freddy Kilo] did just that with a project he calls his VR Robot Tank. It’s a telepresence device that uses a wireless Xbox controller to drive a tracked platform, which is itself headed by a Raspberry Pi.

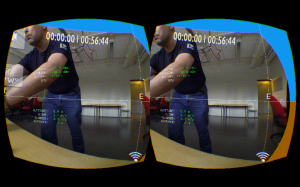

The Pi has two cameras on a pan-tilt mount, and those cameras are both aimed and viewed via a Google Cardboard-like setup. A healthy dose of free software glues it together, allowing things like video streaming (with U4VL) and steering via the wireless controller (with xboxdrv). A bit of fiddling was required for some parts – viewing the stereoscopic cameras for example is done by opening and positioning two video windows just right so as to see them through the headset lenses. It doesn’t warp the image to account for the lens distortion in the headset, and the wireless range might be limited, but the end result seems to work well enough.

The tank is driven with the wireless controller while a mobile phone mounted in a headset lets the user see through the cameras; motion sensing in the phone moves those cameras whenever you move your head to look around. Remote Control hobbyists will recognize the project as doing essentially the same job as FPV setups for model aircraft (for example, Drone Racing or even Snow Sleds) but this project uses a completely different hardware and software toolchain. It demonstrates the benefits of having access to open tools to use as virtual “duct tape”, letting people stick different things together to test a concept. It proves almost anything can be made to work if you have a willingness to fiddle!

Continue reading “VR Telepresence Tank From Raspberry Pi, Google Cardboard, And Xbox Controller”

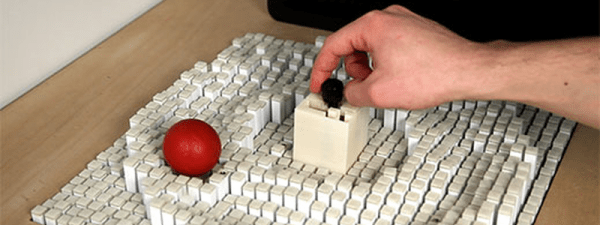

[Chris] works as part of a small team of developers in Cambridge, Massachusetts in the US. [Timo], one of their core members, works remotely from Heidelberg, Germany. In order to make [Timo] feel closer to the rest of the group,

[Chris] works as part of a small team of developers in Cambridge, Massachusetts in the US. [Timo], one of their core members, works remotely from Heidelberg, Germany. In order to make [Timo] feel closer to the rest of the group,