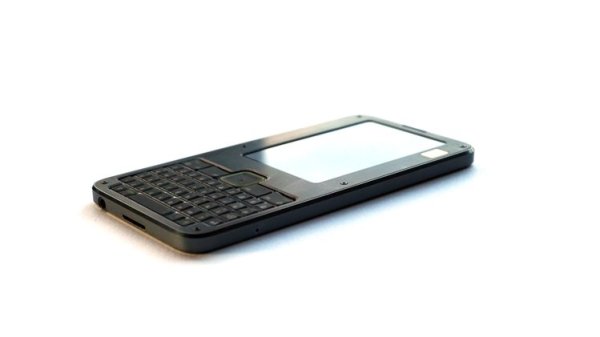

At some point, you will find yourself asking – is my device actually running the code I expect it to? [bunnie] aka [Andrew Huang] is passionate about making devices you can fundamentally, deeply trust, and his latest passion project is the Precursor communicator.

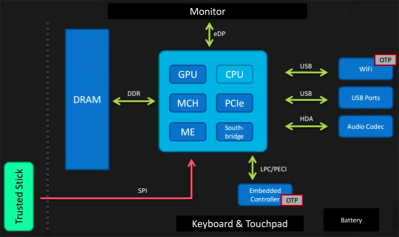

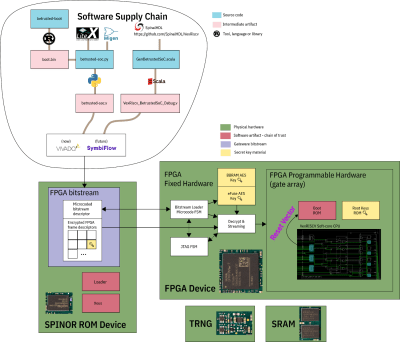

At the heart of it is an FPGA, and Precursor’s CPU is created out of the gates of that FPGA. This and a myriad of other design decisions make the Precursor fundamentally hard to backdoor, and you don’t have to take [bunnie]’s word for it — he’s made an entire video going through the architecture, boot protections and guarantees of the Precursor, teaching us what goes into a secure device that’s also practical to use.

If you can’t understand how your device works, your trust in it might be misplaced. In the hour long video, [bunnie] explains the entire stack, from the lower levels of hardware to root keys used to sign and verify the integrity of your OS, along the way demonstrating how you can verify that things haven’t gone wrong.

If you can’t understand how your device works, your trust in it might be misplaced. In the hour long video, [bunnie] explains the entire stack, from the lower levels of hardware to root keys used to sign and verify the integrity of your OS, along the way demonstrating how you can verify that things haven’t gone wrong.

He makes sure to point out aspects you’d want to be cautious of, from physical security limitations to toolchain nuances. If you’re not up for a video, you can always check out the Precursor wiki, which has a treasure trove of information on the device’s security model.

As you might’ve already learned, it’s not enough for hardware to be open-source in order to be trustworthy. While open-source silicon designs are undoubtedly the future, their security guarantees only go so far.

Whether it’s esoteric hard drive firmware backdoors, weekend projects turning your WiFi card into a keylogger, or rootkits you can get on store-bought Lenovo laptops, hell, even our latest This Week In Security installment has two fun malware examples – there’s never a shortage of parties interested in collecting as much data as possible.