Most of us have seen employees of Boston Dynamics kicking their robots, and many of us instinctively react with horror. More recently I’ve watched my own robots being petted, applauded for their achievements, and yes, even kicked.

Why do people react the way they do when mechanical creations are treated as if they were people, pets, or worse? There are some very interesting things to learn about ourselves when considering the treatment of robots as subhuman. But it’s equally interesting to consider the ramifications of treating them as human.

The Boston Dynamics Syndrome

Atlas being pushed

Spot being kicked

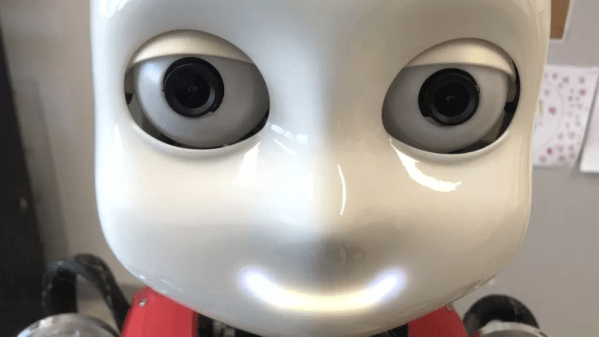

Shown here are two snapshots of Boston Dynamics robots taken from their videos about Spot and Atlas. Why do scenes like this create the empathic reactions they do? Two possible reasons come to mind. One is that the we anthropomorphize the human-shaped one, meaning we think of it as human. That’s easy to do since not only is it human-shaped but the video shows it carrying a box using human-like movements. The second snapshot perhaps evokes the strongest reactions in anyone who owns a dog, though its similarity to any four-legged animal will usually do.

Is it wrong for Boston Dynamics, or anyone else, to treat robots in this way? Being an electronic and mechanical wizard, you might have an emotional reaction and then catch yourself with the reminder that these machines aren’t conscious and don’t feel emotional pain. But it may be wrong for one very good reason.

Continue reading “Our Reactions To The Treatment Of Robots” →