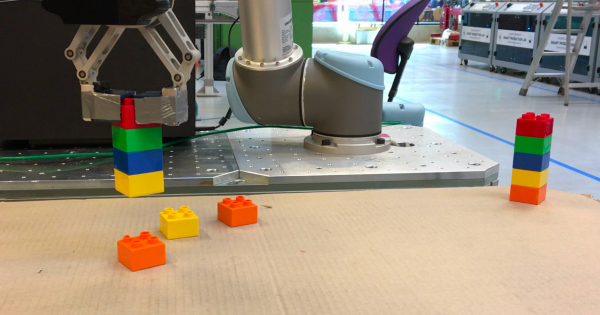

[Thomas Kølbæk Jespersen] and his classmates at Aalborg University’s Robot Vision course used MATLAB code and URscript to program a Universal Robots UR5 to stack up Duplo bricks. The Duplo bricks are stacked into low-fi Simpsons characters — yellow for Homer’s head, white for his shirt, and blue for his pants, for example.

The bricks are scattered randomly on a nearby table, while a camera mounted above the table scans the bricks and assists in determining the location, color, and orientation of the elements. This involves blob analysis which helps the computer decide what pixel is part of a brick and what isn’t. After running a recursive grassfire algorithm with 4-connectivity, the computer gives each pixel a number and assigns it to a blob.

To determine the orientation (the bricks are all assumed to be stud-side up and not overlapping) the blob is divided into quadrants and within each quadrant, the distance between the center of the blob and its farthest pixel is measured. This technique is not likely to work as well with a brick that isn’t square. Each brick’s location in pixels is translated into Cartesian coordinates, making it a cinch for the robot to pick it up. See [Thomas]’s GitHub for MATLAB and URscript code.

Looking for more UR5 projects? Check out the Sewbo garment-making robot we published last year.

Continue reading “Universal Robots Vision-Based LEGO Stacker”