[Hasith] sent in this project where he goes through the process of designing a one instruction CPU in Verilog. It may not win a contest for the coolest build on Hack A Day, but we really do appreciate the “applied nerd” aspect of this build.

With only one instruction, an OISC is a lot simpler than the mess we have to deal with today. There are a few instructions that by themselves are Turing-complete (like Subtract and branch if negative, and Move). Designing an OISC with one of these instructions means it can also emulate a Turing machine.

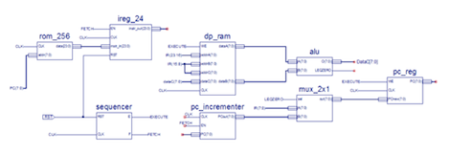

[Hasith]’s build log goes through the entire process of building a fully functional computer – the ALU, program counter, instruction register and RAM. There’s even Verilog code if you want to try this out for yourself.

This isn’t the first time we’ve read about a one-instruction set computer. Years ago, we saw a hardware version of a subtract and branch if negative computer. [Hasith] plans another how-to post on how to write a small compiler for his OISC. [Hasith] seems like a pretty cool guy, so we’re hoping it’s not a Brainfuck compiler; we wouldn’t want him to take up a drinking habit.

You know of Transfer Triggered Architectures? They only have a move-Instruction and then move around the data to “special| units that will do all the work like multiplication, addition, and all the functions you need. If they are finished you can again grab the data from them with a move and put them elsewhere for the next step… Even more complex functions are possible… If you combine that with an automated code generation you get something like this:

http://tce.cs.tut.fi/

seems pretty impressive to me…

I’ve attempted to build one of these too, using TTL. My build was so completely useless I didn’t pursue it.

It used the basic Subtract and Branch if Negative command, but I only used a source and destination register per instruction. The jump value was stored in a register at the end of memory. This saved having an extra word per instruction. When a jump state occurred, the register would be clocked into the program counter.

The work directory for the project is there if anyone’s interested. It contains a short program translated from C to calculate the number e (2.718). I think it should be adaptable to Hasith’s project.

Second diagram (overall block schematic) on his site is miniature and cannot be maximized. Otherwise an inspiring text.

No worries about the need to pipeline it either. But a multi-core would be nice :)

@Miroslav: Oops, fixed. Thanks for the heads-up.

@Darkfader: Oh man, multicore OISCs? Now that’s a special version of my own personal hell. But I’ll think about it :)

@Alexis K: I thought about doing a TTA as well, but I figured it would be easier to show the flow of one instruction than mapping memory space. I will take a look at what you’ve done, though.

I wrote an Evolution library in Java – Currently I have it evolving multi thread OISC scripts (byte copy -> jump to be precise). Strangely no ‘individual'(distinct genome) has ever had two or more concurrent threads and survived a single ‘selection’ process.

I’d imagine there’s probably some very interesting maths (somewhere) relating to why. Either that or it’s just a Bad Idea(TM).

I can imagine two OISC threads operating simultaneously (without locks, flags, or any other thread niceness, over the same data at the same time) would necessitate all sorts of crazy code. Maybe it’s just vastly improbable that any such program could work.

Nice project,

Andy

Interesting way to use such a device. Assuming it is turing complete, then you should be able to make this work. What are your fitness criteria, mutation, predation and unlucky ratio’s? What is your method of combining “fit” genomes?

I have a silly little GA online – just evolves RGB color states – that I did a few years back – only requires a browser with JS support – http://orionrobots.co.uk/orion_apps/evolution/evolving.html. The principles however are much the same, and I have been playing with more advanced ones. I want to build something like this – http://en.wikipedia.org/wiki/Tierra_(computer_simulation)

@dannystaple,

Hi,

As far as I know it is Turing complete, the page on esolang is here; http://www.esolangs.org/wiki/BitBitJump

Survival and Breeding criteria at the moment are both string output match closeness (I do a “character distance” where “AAA” is 3 points different from “BBB” – and 255 points different from “AA” – the closer the match the fitter the individual). They can be different but for a simple example don’t need to be. Breeding is Binary overwriting (half the genome comes from parent one, half from parent two, random blocks of code). Parents are selected by fitness (best with second best – although I have tried others, such as random).

Mutation is n random bits flipped (I have tried other strategies, but for the extra effort I got no different behaviour).

The real problem has been breeding – I have come to the conclusion that this type of program basically cannot breed on a simple level of adaptation. I can envision a higher level organism (say upwards of a few million generations) being able to pass on some form of discrete information to any other member of a small population. But such a highly evolved individual would not be very good at much other than breeding. If I set the individual life time to anything reasonable (say 3 generations) I get nothing at the end of an evolutionary run (the last population is on average no fitter than the first). Basically – heritability is fundamentally Very Unlikely in bitbitjump script evolution.

Currenttly the result is that if I am lucky I can run 10,000 generations capped at 100 individuals starting from a population of null genomes (random genomes) and get a three character match (i.e. depressingly inept evolution).

Having said that I have developed some interesting techniques to getting more evolutionary bang for your buck, if you know a little about how the individuals interact, mutate and evolve; in certain cases you can push the speed and adaptability by startling amounts.

The problem with bitbit jump is that there are no discrete packets of data. Every breeding interaction may as well be a random bit flip mutation, because the “genes” transferred aren’t being used in the same way – fundamentally amoebas and aardvarks are more genomically close than any two individuals in my current population. I am now looking at using specially formatted “lambda calculus” because it is basically a one instruction machine where everything is a function of something else – this means that every conceivable point in the genome is packaged – reducing to a single value and (hopefully) being much more heritable.

Drew.

p.s. I have tried more advanced forms of selection, breeding stratergy and lifecycle – in each case no appreciable positive impact was made on heritability rates.

Sorry for the double post…

I have heard of Terria before – it’s an intriguing idea and I’d love to see a modern implementation using distributed computing.

My focus is on using evolution to form functional programs. My goal is to one day be able to drop an algorithm into the selection criteria (and tell it the balance between solution cycles and criteria matching) and have the evolver evolve it’s be best solution.

Drew.

Really cool project, Hasith! Thanks for sharing

Awesome? Does it mean it can fit several times inside the FPGA? Has anyone programmed these to make trigonometric operations?

@Roberto: A trigonometric operations capable OISC core is….very daunting. Remember, to get ‘classical’ ASM instructions (ADD, MOV, BRANCH, etc) we’re combining several SUBLEQ instructions. In terms of code density, this is very inefficent.

I’m working on another CPU design based on a MIPS layout. This would be closer to a modern CPU rather than the purely academic example that is the OISC. I’m guessing that would be better suited to trigonometric operations.

Calculating trig functions on an OISC is about as difficult as getting division working with some degree of precision (often no small task). You can apply a Taylor series after that works.

If you want extra fun, try doing this on a 1-bit OISC, producing n-bit output. Using a memory-mapped ALU is cheating ;)

If your trying to do trig/division/log/exp functions on a computer without hardware support, I’d suggest you look into Cordic math. Very fast, can be done entirely with add, sub, table lookup and a few bit shift instructions.

I mocked up this design (I think) in Logisim this afternoon, and had a play with it. While kind of interesting, I am looking forward to your follow up so I can figure out what to actually do with it. I note that you need to have data in RAM as the program in ROM can not (unless mistaken) actually set a value in RAM, accept from other values in RAM. For any interested, I’ve popped the (probably laughably bad) logisim file on http://orionrobots.co.uk/dl41

@DannyStaple: Finally, another logisim user! That app is really unbelivably useful.

And no, the keeping RAM and ROM separate was on purpose. I didn’t want to complicate things by using the RAM to store both instructions and data (a von Neumann architecture for the technically minded.) If I had done that, I *might* have been able to jury rig a load operation somehow. As it stands, I’m having the command-expander module I’m putting together do the load operations to a .ram file to be read in at runtime.

I agree about logisim, simply great! Looking forward to part II.

Hasith – I was unable to find a good way to model that dual port RAM – so I’ve currently made a horrible hack. There are two RAM modules, and it pushes any changes (when WE) to both modules, so that the “dual channel” is emulated by it reading from each module. I set up a multiplexer to switch the C address line with the B/A ones when write enabled. Have you got a better way? Since logisim files are XML – I think I’ll pop this into a github repo… https://github.com/dannystaple/ComputingLogic

I wander if I can automate logisim to load a ram file to both banks when a simulation is started.

I’m still working on a few code examples for SUBLEQ. Right now, I’m having trouble working out a few things with Bison grammars.

@DannyStaple: you could run the RAM at double the clock rate and do two sequential fetches from RAM. Instead of doubling CLK, try feeding a divided CLK into the main circuit and CLK to the RAM (use a D-flipfop to do the clock division.) Dunno if that’ll actually work though.

That is not a bad idea. I also think I may have found a neat way in logisim to make the ram contents editable while modularising – create a DPRam adaptor, where a normal logisim ram module can be dropped on top, so you can view the contents and interact with that module normally, while the module adapts it to behave (using the double clock trick) as the dual ported ram.

Yay – it works. The result is in that git repo above.

You may want to have a look at this paper A Simple Multi-Processor Computer Based on Subleq.

A bloke who posted last night about costs of steel coil , it realy depends where you go to buy it, z45 as much as £695 a metric tonne and standard is around £687 per ton- hope this helps