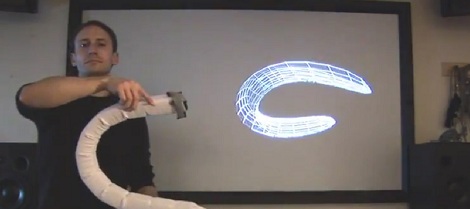

[Joseph Malloch] sent in a really cool video of him modeling a piece of foam twisting and turning in 3D space.

To translate the twists, bends, and turns of his piece of foam, [Joseph] used several inertial measurement units (IMUs) to track the shape of a deformable object. These IMUs consist of a 3-axis accelerometer, 3-axis gyroscope, and a 3-axis magnetometer to track their movement in 3D space. When these IMUs are placed along a deformable object, the data can be downloaded from a computer and the object can be reconstructed in virtual space.

This project comes from the fruitful minds at the Input Devices and Music Interaction Lab at McGill University in Montreal. While we’re not quite sure how modeled deformable objects could be used in a user interface, what use is a newborn baby? If you’ve got an idea of what this could be used for, drop a note in the comments. Maybe the Power Glove needs an update – an IMU-enabled jumpsuit that would put the Kinect to shame.

[youtube=http://www.youtube.com/watch?v=-Dqvf1CXPWg&w=470]

First thing that comes to my mind is a robotic tentacle.

Also iron man.

How about tactile oriented people model things in 3d using their preferred method?

A complex robotic arm like a tentacle would be perfect for this

Cybersextoys.. of course

Teledildonics; Don’t tell me you weren’t thinking it.

The perfect gift for the girl on the go. Works for long distance aniversaries too. :D

if you are thinking about an IMU suit you should check out the work they did for skrillex for his mothership tour. he had a imu on every major joint as well as gloves and the datapoints were rendered into a skeleton and then rendered with different screens and projected onto the stage it was VERY cool but there was some very noticeable lag

http://www.youtube.com/watch?v=e2ISfFmN0UE

Uncanny, I’d feel more confortable with this thing killing people…

hiding the real guy and playing the music at the same lag would make it ok again

delay kills the effect

have to agree…would have been a bit better if…

1. he wasnt visible on stage

2. the music had a delay sync’d with the sensor/render lag.

There are a lot of applications in biomechanics. I designed a similar (less visually impressive) radio based system for my university thesis.

I’m actually developing a robotic snake that happens to produce similar results using just a single IMU. And it can move itself.

I think they should have just stuck to one IMU and then three pull sensors per segment, that would require a lot less data and allow higher time-resolution than IMU chips (even with SPI, a dozen chips wouldn’t be able to do more than 100fps)

There are only two IMUs used in the video – one at each end of the object.

There are a few advantages to using IMUs instead of pull sensors. Pull sensors are mechanically weak and will break after a while, and they depend on the object to be sensed being stiff enough – the IMU approach will work even on very flexible objects. Also, using the IMUs we can easily sense twisting of the object, which is much more complex with pull sensors.

Cool, can anyone see the part number or manufacturer for this all in one imu device?

Brace your wallet….try not to cry…but it is most likely one of these….well two of them actually.

http://www.analog.com/en/mems-sensors/mems-inertial-sensors/products/index.html#iSensor_MEMS_Inertial_Measurement_Units

That is great money is not really an option for my project…

Cheers

Hey – the IMU used in the video is the Mongoose from ckdevices – basically an arduino mini with on-board accelerometer, gyro, magnetometer and barometer. They are $115 each:

http://store.ckdevices.com/products/Mongoose-9DoF-IMU-with-Barometric-Pressure-Sensor-.html

The sensor-fusion algorithm is performed on the IMU board. I used one at each end of the object, with SPI communications between them and an XBee radio to send the data to a computer.

They are not available yet, but personally I’m looking forward to this:

http://www.invensense.com/mems/gyro/mpu9150.html

essentially the same thing but in a single IC

It might do a hell of job when used for motion capturing in movie animation (pixar..). why program every little movement of a virtual human, when you can just put on the motion capturing suit and simply play the movement?

Or one step further: first 3d-scan some person/animal into your animation studio and then record the motion and deformations with these sensors!

Car crash tests would be another application. Recording the material deformation in realtime would make dozens of high speed cameras obsolete.

I think this could be used for robots with different limbs, as a secondary positioning system. Instead of having to calculate the acceleration felt in the limbs based on rotary encoders and servo data (which might not account for bending and oscillation), you could just measure it.

Also, I think this is the way most people sense where their limbs are. It could lead to robots that walk with more natural gaits than ever before.

i find this too complicated, costly, and resource intensive … how about 1 or 2 cheap webcams and have the freakin snake colorcoded? as with the 3d glove .. using 1 webcam ?

This is a test for objects that will be used by dancers for a project were working at the IDMIL. Webcams would not work for us since the we need very nuanced control, the area to be covered is huge, the lighting will be unpredictable, and the dancers’ movements will affect visibility. Also, the visual appearance of the objects is important to the piece! (what you see in the video is only for testing the sensors).

Of course, this method of sensing is not necessarily the best for every project, but for this one it is working very well. IMUs are quickly becoming much cheaper, so it’s also interesting to try out some new approaches to using them…

I see… im not sure about efficiency .. but i got one more idea for you then … but not sure if under the conditions , dancing environment is the worst case scenario i guess … how about IR paint instead, and fudicials? and the webcams without IR filter … just and idea

sorry for the double post .. but considering more in detail .. i guess in your setup … the complicated HW seems essential indeed .. pardon me