[Steve] needed an alternative to the Xserve, since Apple stopped making it. His solution was to stick 160 Mac Minis into a rack. That’s 640 real cores, or 1280 if you count HyperThreading.

First, Steve had to tackle the shelving. Nobody made a 1U shelf to hold four Minis, so [Steve] worked with a vendor to design his own. Once challenge of this was managing the exaust air of each Mini. Plastic inserts were designed to ensure that exaust wasn’t sucked into the intake of an adjancent Mini.

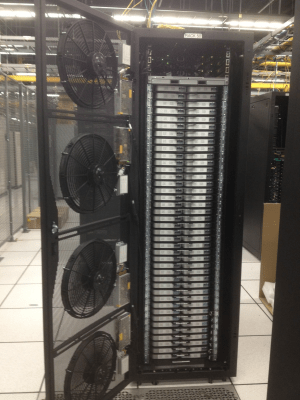

An array of 160 computers is going to throw a lot of heat. To provide sufficient airflow, [Steve] built a custom cooling door out of four car radiators fans, connected to a 40A DC motor controller. This was all integrated into the door of the rack.

An array of 160 computers is going to throw a lot of heat. To provide sufficient airflow, [Steve] built a custom cooling door out of four car radiators fans, connected to a 40A DC motor controller. This was all integrated into the door of the rack.

Another challenge was getting power to all of the Minis. Since this deisgn was for a data center, the Minis would have to draw power from a Power Distribution Unit (PDU). This would have required a lot of PDUs, and a lot of cables. The solution: a one to four Y cable for the Minis. This allows each shelf of four to plug into a single outlet.

The final result is a professional looking rack that can replace a rack of Xserves, and has capacity to be upgraded in the future.

Looks amazing! Some real professionalism there! However replacing a hard drive is going to be a pain! Wonder if there is a way to modify the mac mini to allow a more accessible hard drive access?

Well if you did it right you wouldn’t really store anything on them or would put it in a distributed, redundant system. That way if one failed another of the Minis would have that data and you could take out the failed mini and replace that. Likely simpler would be a gigabit connection to a fat NAS in another rack. Then the contents load into a cache on each device.

Or have a hot spare or two so that you can take your time during that really long process. At least the new ones dont really require anything beyond screwdrivers and a little spudging. No putty knife required.

Yeah that does make sense, I’m still thinking from the consumer point of view! The beauty of this system is that a set of 4 can slide out and so if redundancy was used it could be manageable if they were to be removed. TO be fair if you have 160 of them in a rack, affording some form of network storage is probably a moot issue anyway!

I’m sorry this is off topic but geebles i’ve been trying to get in touch with you about the xbox 360 mod you did with heat sensors. bit-tech declined my forum registration and tumblr is being goofy with me. I’d like to talk to you as i’m attempting the same thing.

Gigabit is a bit underpowered (Infiniband is the gold standard really, cheaper than 10 Gb in fact). Maybe someone will sell him a thunderbolt switch for 100K$.

I doubt it.

You can get 24-port 10Gbe switches starting at $8k http://www.provantage.com/supermicro-sse-x24s~4SUP929Y.htm

Infiniband is way more expensive last time I checked. I would be happy to be proved wrong.

Infiniband != 10Gb Ethernet – it’s an expensive proposition and there is a lot more industry support for 10Gb E than Infiniband. Regardless though what is the application for this rack? I mean you can get 1U servers with dual power supplies and RAID cards that are actually designed for a rack without having to custom build this.

I give a lot of props or the workmanship but I would love to know what this supports

OSX Server. Apple no longer makes the 1RU Xserves.

RAID 6 the HDD’s of them all into one super-drive.

Servers don’t normally hold client data, that is handled by a storage subsystem. The onboard disks will only have the OS and cluster management software.

Given the relative cost between enterprise and consumer hardware (and the power savings), I suspect that replacing hard drives is easy. You just swap out a mini from your stack of 10 spares in the corner and send it back to Apple.

I actually have 15 spares, and since the entire rack is NetBooted, the drives require no changes as delivered from Apple, so it’s plug-in, hold the “N” key and power on, Done!

“simbimbo: “so it’s plug-in, hold the “N” key and power on”

Wait, what ‘N’ key? You have to plug a keyboard into these individually to start them up?

Good catch. You do actually have to attach a keyboard when your first add a new machine ;-)

He’s not using hard drives. Are the SSD’s unreliable? I would think the little bitty fans would be the first thing to go.

I’ve been using consumer SSDs in enterprise RAID arrays for years without any major problems. I occasionally have a DOA or failure after a couple months but not often. Intel brand drives have been the most consistent in performance and longevity for me thus far.

Those little bitty fans are a !#T$$#!W!@!#$@ to replace too.

I would assume they’re being NetBoot’ed

But will it blend?

The funny thing is that, if I recall correctly, Apple’s official stance when they discontinued the Xxerve was “use mac minis instead.” I never thought anyone actually followed that advice…

I’m curious about what he’s doing that needs that much “Mac Power” :S Surely they can’t all be build servers or anything. Granted I’m not really in the loop when it comes to all things Apple.

The most obvious answer is a Render Farm for After effects, Maya, Final cut pro and the like

Final Cut Pro can’t use them and those others don’t need to be on Mac.

Final Cut users will usually use Compressor for encodes. Compressor can use a qmaster cluster to speed up encodes. It divides the job up between all the nodes on the cluster.

But you’re right, the others don’t need to be on a mac. That’s just expensively unnecessary, unless you already have the hardware. I sometimes turn all our office workstations and xserves into a Cinema4D render farm if we really need the computing power.

Final Cut Pro can definitely use Mac mini both for local use and for distributed rendering

Maybe a bunch of remote desktop hosts? Or just a truly insane Mac-loving admin?

Unfortunately Mac mini suck at remote desktop.

May be cloud based app builder…

http://www.macminicolo.net/

A mac mini colocation service. Get your mac mini set up and mail it in. They install, and boot it up for you. $35/month and up.

I’m more interested in what software they’re using since there’s no Linux, BSD or “shudder” Windows equivalent.

Must be some specialized software with a limited client base.

Also Now I’m interested if it’s possible to pull of the same thing with Intels NUC:

http://www.intel.com/content/www/us/en/motherboards/desktop-motherboards/next-unit-computing-introduction.html

i was unaware any one used xserve any more … this hurts the efficiency part of my brain @.@

I know, it’s really hurting the logic sensor and efficiency regulator. What a bizarre waste of money and time. In my experience the mac mini runs out of ram due to memory leaks long before it’s proved useful. About the only thing you can do is run Apple remote desktop which locks you into that vendor. SSH is really the only way to go, then all you have is a bunch of overpriced Unix machines there that are still going to underperform anything else at half the cost.

This is a great build, but i fail to see the benefit of using mac minis. It runs off a quad core i7, you could easily get the same density or greater of the same CPU with bare motherboards stacked tightly and running heatpipes between them, eliminating the problems of cooling 160 consumer computers in the space that would normally hold up to 20 of them.

Where would you get the bare motherboards from? Mac Pros? iMacs? This would probably make it cost a lot more!

Did you at any ppoint consider that there are motherboards in PCs?

As he wanted a ‘Macintosh rack’ I assume that he is running OS X. I don’t believe there is a legal way to run OS X on non Apple hardware.

The vast majority of the worlds legislates that if an install disk is bundled then it is yours to do with as you please. So it is legal, just deliberately difficult. As for running OS X, I don’t see anything that only OS X could do that would warrant anything as ridiculous as this.

“As for running OS X, I don’t see anything that only OS X could do that would warrant anything as ridiculous as this.”

Ever tried building & testing software for OS X without OS X? Mozilla has over 500 racked Mac Minis for just that purpose. http://www.wired.com/wiredenterprise/2012/05/mozillas-new-datacenter/

@a Except that os x mountain lion is not available on physical media. Apple hardware can reinstall it from a recovery partition, or in case of disk replacement, stream the installer from the internet when you hold down the recover key-combo on boot.

That’s how apple got around the “You have the disk, you can install it” legislation. They don’t hand out media anymore.

You buy the software for download, you download it. The installer doesn’t stream. The software can then be loaded onto a thumb-drive as a legal physical ‘back up.’

Sure it is.

Enterprise support sends a physical Mountain Lion disks with licensing.

Honestly, it’s a really nice hack. Lovely idea and all but the reason behind it baffles me.

Just Google “mac mini hosting”

Crysis FPS?

No better than on a mac mini in the first place sadly. Crysis doesn’t scale across multiple cores too well, not many games do tend to use more than 2 or 3.

Most server applications however will probably be bashing the hell out of those cores there.

Wow six677, and lol to Fred, not quite sure its enough

Can you actually run all of those with 1 os beingas you put it 640 cores or 1280? If so it must have grest performance. Otherwise i am not sure how you could do that?

Distributed Computing Environment; Plan9.

This is destined to become a Mac vs PC thread

Macs are PCs, even more so now that they use the same hardware architecture. Do you mean OSX vs. everything else?

As for me, i would have made the same questions if someone had bought 160 non-apple expensive consumer computers for this purpose.

What is all this used for? Is it a Render Farm? Distributed computing system?

Http servers for host farm

Each customer as is own server instead of a vm on a big server. Well I heard of someone doing just that with mac mini…

I’m also confused as to what purpose these could serve, my best guesses are 1) easy is more important than cheap and 2) it’s iirc against the terms of use to run OSX in a VM, and maybe they rent out machines to people. It’s certainly interesting though.

I’m pretty sure Mountain Lion and Lion have provisions in their licensing to allow for a certain number of virtualized machines per physical machine/license.

you can run osx in a vm, it just needs to have apple hardware under the hypervisor.

I’m pretty sure it is EULA legal to run OS X Server in a VM on PC hardware, just not the client OS.

You need Apple hardware to run 10.5-10.8 in a VM, server or not. There is no OS X Server anymore, it’s the same OS (and EULA) and Server.app goes on top. We’re running ESXi on Mac Minis for this specific reason.

He could have gotten better density going vertical and still doubling up front to back. 208 units with some gaps by my math. Throw a few more in horizontally if you want.

I’m also very curious to know the driver for this.

Now that I think about it, you might even be able to remove the bottom cover from each and squeeze one more in each row. That would be another 16 total so 224, not including 4U available for horizontal mounting. Add another 16 for his horizontal method and you’re up to 240 total for the rack!

Since he opened them up anyways to replace the memory, imagine the density if you tossed the nice cases and individual power supplies.

The bottom cover is integral to the cooling of the machine. The front portion of the bottom cover has a small open area for air intake, but the rest of the cover is gasketed to force the air through the case and out the back.

Maybe cooling is less efficient vertically?

Vertically a Mac mini uses 5U worth of rack space. Considering the switches and the number of outlets/PDUs required, a 48U rack comfortably holds 140 Mac minis. I’m currently building our fourth of such racks – http://www.macminivault.com/media/

Can I have his job!?!? lol

When he opens the door then what happens with the airflow?

I know that Oracle Virtualbox can run OSX with minor tweaks, and while it’s AGAINST the OSX EULA, it’s not downright illegal.

So it’s in some sort of grey area of legal limbo..

Hence why Hackintosh and the communities based around it are still well going.

Next step: fill a rack of iPhones so as to have the long awaited ARM-based server

Or use any of the equally powerful and miles cheaper ARM dev boards available

Allwinner A10 for the, ah, win.

You’re missing the cool factor there though. I’m not an Apple fan, but a rack of phones sounds pretty sweet.

Who does he sell these services to? Pay 10x more for hardware and your webserver can run on a genuine Apple, come make Jobs proud!

Thanks for the great write up Hack A Day. I would like to answer some of the questions posted. @Geebles these machines all run SSD’s and I ordered them with AppleCare, so I hope to never have to change a drive ;-)

As for the reason I built this.. Well, I guess I’m just like a challenge ;-), but seriously, the company I work for has a need to have large numbers of machines to build and test the software we make.

There were plenty of discussions of Virtual environments and other “Bare Motherboard”/Google Datacenter-type solutions, but the fact is, the Apple EULA requires that Mac OS X run on Apple Hardware, since we are a software company we adhere to these rules without exception. These Mac Machines all run OS X in a NetBooted environment. We require Mac OS X because the products we make support Windows, Linux and Mac so we have data centers with thousands of machines configured with all 3 OS’s running constant build and test operations 24 hours a day 365 days a year.

As for device failure, we treat these machines like pixels in a very large display, if a few fail, it’s ok, the management software disables them until we can switch them out. This approach allows us to continue our operations regardless of machine failures.

@bitbass I tried the vertical approach, but manufacturing the required plenum to keep the air clean to the rear machines cost too much for this project, but it’s not off the table for the next rack

@Kris Lee When I open the door I can literally watch the machine temps go up, but I can keep it open for 15-20 minutes before the core temps reach 180F

@Adam Ahhh.. Nope, you can’t have my job ;-)

As a small armchair critique — looks like you’ve got the fans mounted on a screen door that’s otherwise open, so the air that they’re blowing can turn around and go right back out the screen beside them. You’d probably get much better airflow through the system if you blocked off all of the door except for the fans, so the air had to actually go through the rack to escape out the other side.

That is a great suggestion.. I have been trying to source some thin plastic sheeting, but I can’t find a sheet large enough, but I’m still looking

I know it’ll look bad, but why plastic sheeting ? use ducktape ;))) silver :)P will match mac’s :))) (joke). besides that looks great. (im not familiar with this hardware, but from what I’ve seen in comments, each individual Mac, has power suppy on board?)

I’d second using the duck tape, at least to try it first. I suspect it’ll make little difference. There used to be a guy in our office doing CFD on generator set cooling for his PhD. (think diesel engine with car rad/fan attached to the front of it. If I recall correctly he was trying to figure out a recirculation problem. Basically the air was happy to recirc through the fan, it’s more to do with the fan blade shape than anything else. They gave up when the couldn’t get the CFD to settle to a steady state and decided testing the fan mounting angle might be more effective. It makes no difference either.

you just need to create a vortex behind the fan

Plastic is easy. You buy it at your local hardware store (clear plexiglass for making/fixing windows), or your local sign shop or plastics distributor. Yellow Pages, FTW: Let your fingers do the walking, and if the local shop you find can’t help you, they’ll gladly send you somewhere else that can.

Or, if you want something more like a basic distributor that likes dealing with small customers, try US Plastics in Lima, Ohio (there’s others probably far closer, but this one is not far from here and I’m familiar with them).

Indeed, some of these local places have tooling to make a quick job of cutting things to shape and making holes for fans. Just sketch it up and have then do it — it’s ridiculously cheap, in my experience*.

If metal is preferred, any HVAC shop or tin smith can do the same thing out of galvanized steel darn near for free** if it’s easy.

(*Last time I needed special plastic widgets, I needed fourteen 1/8″ thick polycarbonate rectangles of about 2.5″x3″. Found the polycarbonate in the scrap bin at the sign shop and had them cut it; $8 and ten minutes later I was on my way…)

(**Last time I needed specially-cut flat sheet metal (I needed some chunks to cover holes in wood floors), I walked into a local HVAC shop and asked. The guy wrote down what I wanted, and asked me to wait. A few minutes later he shows back up at the counter with precisely the items I’d requested, freshly and cleanly cut on their shear from their stock, and refused to take money for them. I just said “thank you,” took my sheet metal, and left.)

(***I expect a cabinet-sized piece of whatever to cost more than $0, especially with holes cut in it, but I think you’d be surprised at just how inexpensive some of this stuff can be. These folks are used to making whole systems; asking for mere parts isn’t even normally on their radar, though they’ll gleefully oblige.)

Use some plastic wrap (Yes the same you use for food) This way you can test to see if blocking it off really helps with temps. If so, then go for something permanent.

Just my 2 cents.

It’s a very nice build, but from a cost/benefit/management/maintenance perspective it doesn’t make much sense to me. Mac Mini is pretty pricey for something that’s not really enterprise ready from a redundancy or security standpoint… not to mention not being rack mountable. I’m sure he had a reason for going this route, though, and made a real clean job of it.

there is not such thing as enterprise grade macs anymore, but apple’s commercial grade is well above what most people consider typical consumer grade. The mini’s provide the highest bang for your buck, if you must buy mac. And, I think this setup clearly has redundancy covered, and i don’t know what the model has to do with security.

i had the same problem when the xserve disappeared but went a different route. instead stuffed the rack full of mac pros running vmware vsphere5. each had two 6-core xeons and 64GB of memory, and they were connected to FC storage. used the fat racks and got three across and four high, so 12 per rack.

Isn’t that less cores and memory than this???

6cores X 2CPUs X 12systems = 144cores vs 640cores here

64gb X 12systems = 768gb vs 2560gb here

Seems like this guy wins!

What a waste of money. I’d fire this guy.

Forgot to read the comments before posting one, eh?

I read the comments. I also know how much it costs to power a setup like this, how much it costs to build and that there’s better ways of achieving the same performance. I could see if it was one or two 1U racks with four each, this is, in my eyes, a case of overkill.

OK Larry. Ball is in your court: you build native Mac software, and you need a cluster of Macs to compile that software through an entire development lifecycle (development, integration, testing, etc.). What are your “better ways” based on the design constraints outlined?

OK. I’ll bite. You build native Mac software, and you need a cluster of Macs to compile that software through an entire development lifecycle (development, integration, testing, etc.). What are your “better ways” based on the design constraints outlined?

Larry, I would be interested in hearing some of your ideas on how to achieve the requirements I had handed to me.

1. A single datacenter footprint

2. As many machines as possible

3. Apple hardware only. (limited by licensing agreements)

4. 12kw cap on power

5. All NetBooted

It seems like the combo of 1, 2 and 3 made the Mac Mini decision for you. I’m really impressed by the custom engineering that went into the execution of this solution. Are you seeing any EMI or RFI issues with the car radiator fans? Is there a contingency plan for replacing the Xserve boot machine when it eventually fails?

I work with this guy. Read his comment above as to why this build was necessary. My hat is off to him for getting the job done and meeting business requirements in an elegant and professional manner.

Sour grapes much ?

No sour grapes at all. I love the suggestions, I just wish folks took the time to read the blog to understand the requirements before they just spout out how stupid I am for using Mac mini’s.

I’d rather take a normal, maybe slightly old server with server grade hardware instead of this 160000$ rack anytime. Why?

-hardware that lasts

-ECC memory

-RAID

-probably cheaper

-proper cooling

-big amount of shared memory

I mean, what happens if one of those gets bad memory or harddisk. First a lot of fun finding the broken node, then data loss ensured unless there’s a backup. Say I take 20 2U Opteron machines, 4×12 cores each. Cost maybe 20X15000$. I get 960Cores, alot more memory and failsafe hardware for slightly more money.

…then you would be fired for not reading the requirements laid out in simbimbo’s reply above.

What data loss? These are netbooted machines that are used only for their cores and RAM. Storage is completely separate. Also, he has stated several times that licensing requirements determine the need for Apple machines. The Mac mini is the only device that fits all the requirements placed on him by his bosses: Footprint, core/RAM count, power draw limits, OS X/Windows/Linux, redundancy…it’s all there in a very efficient manner.

I think it’s a brilliant build and a great example of coming up with the right tool for the job.

Just curious on the power usage of those things? I’ve been tempted to send a few down to the DC before, how many amp’s do they use?

11W at idle and 85W at full load per Mac Mini according to Apple. Full load means 4 cores at 100%, HDD spinning up, heavy GPU load, etc.

However this guy replaced the HDD with SSD, and doesn’t seem to use the GPU at all, so I would estimate ~40-50W (0.17-0.21A at 240V) per machine. That is 6.4-8.0kW (26-33A at 240V) for the full rack.

It seem like the objective of all of this is to make a nice looking server with the minimum amount of effort where initial cost is not really a concern.

‘[Steve] needed an alternative to the Xserve, since Apple stopped making it. His solution was to continue his negligent dependence on a mediocre UNIX vendor.’

Fixed that for you.

Great setup, clean job for something not made to be rackable.

I guess these models are Mac Mini Servers which comes with Thunderbold and OS X Server.

hard to grasp the point here – lots of custom engineering but not, in the end, any exceptional result. a standard 42u rack filled with bog-standard half-U 2-socket servers would give the same core density, but with ECC and IPMI (and probably more reliable). maybe the point was to use cut-rate mac minis. I guess there are licensing advantages if you really must use MacOS for some reason.

how do you turn them on and off?

I bet you it is to manage a pool of ipads. Those fuckers will kill any server I have ever tried to manage them with. Good try though xserve ;_;

how do you turn them on and off remotely?

42° 33′ 01″N, 71° 12′ 55″W

Damn… It’s amazing that just because someone reads HaD, they think that they’re now a datacenter architect. Given the requirements that had to be met, it is a very nice, professionally executed solution. I’m not sure that I would have gone the AppleCare route though. I tend to lean towards having cold / warm spares and order hardware accordingly when tackling a large quantity project link this. When ordering in the quantity, you can make some demands of the distributor such as having them stock “replacement spares” for you (and only you) as well. Depending on how far Apple bent you over for the AppleCare on 160 machines, it might have been more fiscally responsible to just go with the “spares” route than with the AppleCare route. Not a criticism at all though since I don’t know what percentage of the invoice AppleCare represented. Simply pointing out how I tend to do things.

In answer to the individual who said that the open areas of mesh on the door should be closed off to improve airflow, you should note that those fans are pulling from the “cold row”, not pushing into the “hot row”. The volume of “turn around” air is very nominal. In addition to that, in the event of a fan failure, you want as much of the cabinet open as possible to take advantage of convection cooling. If you’re unfamiliar with the “hot row” and “cold row” concept, you shouldn’t be critiquing anyone on their datacenter cooling design.

The solution isn’t nescient; you are.

Half the comments on this thread:

Durh, I dont likes Mac minis. They costs moneys. Why you gotta use those? You should have used arduinos and spent the rest of the moneys on donuts. I’m smarter than you because I read hackaday all the time while you waste all your time getting paid to do stuff that I can read about on the internet for free.

You complain about people being slightly passive aggressive by being incredibly passive aggressive.

You REALLY accomplished something there.

Yeah, but, do you even lift?

Incredibly sexy. I drewl’d :]

These are just overpriced pcs with closed source linux . Been done better with more then 500 cpus on one Amiga computer ,and with that moron no virtual threads dont count as actual cpus. secondly, The Amiga I am describing was and is mixing and matching true 64bit cpus of alpha,ppc,sgi , PArisc,and the now Itanium of True 256bit of 64 core . This has been done in 1990 and 2003 respectively. Plus True real-time multitasking and you need both to do the other. Toaster oven or Toaster Screamer -Yes Amiga based. So whatever Try as apple people might they are so far behind the times.

Please dont use “OSX” and “Linux” in the same sentence please. In the 90’s Apple felt they needed to reinvent the wheel, so they bought Steve Jobs old company NeXT and got NEXTSTEP, a BSD/Mach OS upon which OSX is build … It’s has very little to do with GNU/Linux, atleast where it counts i.e. kernel and licensing.

(cut out the usual Apple vs. Windows vs. Linux rant)

Did anyone read the effing blog? He uses them for software testing — presumably Mac OSX software. Sure you could get most of the code covered on any *nix box, but its gonna be hard to test the whole product on anything other than a Mac.

Building a render farm cluster on a smaller scale very similar to this one. Basically 3 shelves of minis to start and then potentially expanding out. The key was their low power footprint and cost. They ended up costing so much less than dedicated blades for this type of work. Awesome to see a full top to bottom rack buildout though.

People who implemented this and defend their position as it were: “necessary”; sure, it was, and for what you had to do- it’s a really great job, but at the end of the day… this embodies why I hate Apple in the first place. So yeah, I wouldn’t say it’s dumb, or that you’re dumb. But I would say that the company is dumb, and that Apple is dumb. Why wold people be using a computer marketed, priced, and designed for consumers’ desktops be used as a server node? Why isn’t there an alternative that’s, you know, actually designed for that? With easy to access parts and no extra money spent on case flare, optimized for the task? Apple wants more cash, that’s why. So you dedicate all this effort to supporting a company that’s so obviously fleecing the shit out of your company and so many consumers- where does that get you years from now? A larger market share of people being deceived about the value of hardware? More people with the misconception that they’re more secure because their OS is obscure? At what point does this flip and go sour as people exploit the now not so minor number of people using devices that developers regularly admit even they don’t like dealing with? Is this what healthy competition should look like? Markups and limitations?

“Why wold people be using a computer marketed, priced, and designed for consumers’ desktops be used as a server node?”

Because he’s not using it as a server node, he’s using it for testing OSX products.

Read the thread before putting your foot in your mouth.

Is there another sff computer with integrated Thunberbolt interconnects? 2 x 10g pipes with the ability to plug into fiber fabrics or 10G Ethernet sounds kinda cool.

Think distributed computing version of RAID.

What is the geekbench score of the complete rack?

just a comment on mac mini racks… this company has been making them for 2 years now, and is a source for apple’s own server rack systems.. http://www.mk1manufacturing.com/www.mk1manufacturing.com/Computers.html

I know the owner personally, he is very efficient in his design for heat removal and wire handling.

Do you have a source you can share for the power cables you used for each sled?

A millennium ago I bought Cobalt RaQ2 servers by the 42U rack including a dedicated NetApp storage in the base.

I am a major Apple fanboy, and buy the newest toys for close friends/family every Christmas, and I still prototype new web apps on a 2010 era mac pro.

I continue to put my money into Apple on the consumer side and but I admit to be being clueless about why one should use the mac mini for deployment. Any server that doesn’t recoup the hardware cost in a month is poorly budgeted, so the profitability is based upon management software. Where is the Apple advantage there?

Has anyone found a vendor who can do a one to four Y cable for the Minis for this purpose?