One of the standout talks at the 33rd Chaos Communications Congress concerned pseudo-random-number generators (PRNGs). [Vladimir Klebanov] (right) and [Felix Dörre] (left) provided a framework for making sure that PRNGs are doing what they should. Along the way, they discovered a flaw in Libgcrypt/GNUPG, which they got fixed. Woot.

Cryptographically secure random numbers actually matter, a lot. If you’re old enough to remember the Debian OpenSSL debacle of 2008, essentially every Internet service was backdoorable due to bad random numbers. So they matter. [Vladimir] makes the case that writing good random number generators is very, very hard. Consequently, it’s very important that their output be tested very, very well.

Cryptographically secure random numbers actually matter, a lot. If you’re old enough to remember the Debian OpenSSL debacle of 2008, essentially every Internet service was backdoorable due to bad random numbers. So they matter. [Vladimir] makes the case that writing good random number generators is very, very hard. Consequently, it’s very important that their output be tested very, very well.

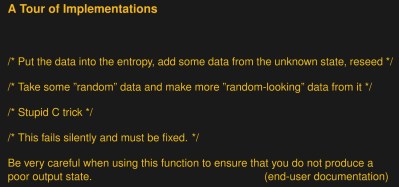

So how can we test them? [Vladimir] warns against our first instinct, running a statistical test suite like DIEHARD. He points out (correctly) that running any algorithm through a good enough hash function will pass statistical tests, but that doesn’t mean it’s good for cryptography.

Instead, there are two ways to test a PRNG. First, if you’re using a standard function and have a set of reference seeds and outputs, check them. At least you’ll verify that your code is doing what it should. Secondly, and more generally, you want to make sure that the algorithm isn’t losing entropy — PRNGs don’t create randomness, so the best they can do is not lose it.

Here are some things you can check. If a part of the seed doesn’t influence the output, or if two seeds produce the same output, or equivalently if there are fewer possible outputs than seeds, the algorithm is losing entropy. If you can run through an arbitrarily large number of seeds and outputs, you could possibly brute-force this test (hopefully before the universe dies its inevitable heat death).

Or you could do it analytically. They test six PRNG implementations using the CBMC and Minisat static analysis tools to test for these requirements. Doing so caught all of the problems that they were expecting, and one that they didn’t. Using their “entroposcope”, they trace the loss of entropy through the flow of the program, find the bug, and save the day.

This isn’t a beginners talk on cryptographic PRNGs, but it’s a darn good one. PRNGs that look random, and pass statistical tests, can still lose entropy. As the pair pointed out in the question and answer, the only way to be sure within a reasonable amount of time is to dig through the code and verify that it’s not. The implication of this is that the only secure PRNG is an open-source PRNG. But you knew that already, right?

I always wanted to build a white noise generator and feed that into an analog to digital converter.

I believe that is how E.T. actually phoned home…

As opposed to pink noise?

Shouldn’t Trump be generating orange noise?

Shameless self promotion here but the product that I’m just about to release uses a white noise generator to create random MIDI notes to compose music.

Think of it as a Triadex Muse meets an Enigma machine except there’s no repeat. Ever.

http://www.synthimuse.com

Is it pleasant to a human listener? Most of the current generation of “CPU generated music” is lacking in something similar to how sex with a silicone lovedoll would feel like compared to intimacy with a real human. It just falls short of having that special element. Plus, the whole lack of movement or voice or intimacy or sharing desires or potential for pregnancy in opposite sex encounters, minus the expense in a situation where that would be for hire though, etc. In other words, it is not *bad* but it isn’t exactly great or quite right either.

That said, there are some artificial music generating software packages that are actually getting pretty decent. Like Jukedeck or Computoser. DeepBeat is also sort of in that area too.

@[Internet]

It doesn’t sound anything like crappy “computer” music!

Go check it out –

https://synthimuse.wordpress.com/samples/

I like “Drumming”

@[Germanium SynthiMuse]

Love your work man!

I when looking for a schematic to see how you use so many pots with a uC.

I wanted to play with sound in CPLD/FPGA to add the ability to give the sound source a location, vector, arc, velocity and phase. Like an extension to MIDI that has these extra features. Something that treats sound like it is in nature like when a car goes past at high speed and has frequency, amplitude ,phase and tonal changes.

I am hungry for more info on your project!

Thanks for the kind words. I can’t take credit for ‘Drumming’ that was put together by my colleague who also jazzed up the wordpress site.

The word from the few beta testers is that the SynthiMuse doesn’t sound like a computer driven composer. Mainly because it has no algorithms as such. It just takes the settings and pumps out MIDI notes.

If you hear anything you like, you snapshot it and save it in to one of it’s 4 loop memories.

It’s interesting that when you take a random phrase and repeat it, it starts to take on a musical meaning.

The SynthiMuse can take triggers from the built in microphone, input from external midi as well as the built in LFO & noise generator.

I feel I should limit my self-promotion here as the SynthiMuse is a self-financed commercial product that, while created by a hacker, is an attempt to just about try and break even on the expense of development.

I’m glad and grateful for any comments, good or bad.

Hi Rob

I can’t publish the schematics but I’m happy to tell you the most efficient way I know on how to scan lots of pots.

I use analog muxes with their select and enable lines driven by the uC. Nothing special here.

The main trick is that I have a timer interrupt running that:

1. Reads the currently selected pot value in to an array.

2. Selects the next pot input

3. Starts the ADC sampling

4. Exit the interrupt

The key thing is that I don’t *wait* for the ADC to finish. I just go away and do other stuff untill the timer interruptts again and I know that the ADC will have a new value to be transferred.

So, it’s not original by any means but it’s a technique that many people miss.

http://www.synthimuse.com

Lavarand was a hardware random number generator designed by Silicon Graphics that worked by taking pictures of the floating material in lava lamps, extracting random data from the pictures, and using the result to seed a pseudorandom number generator. Although the secondary part of the random number generation uses a pseudorandom number generator, the full process essentially qualifies as a “true” random number generator due to the random seed that is used. However, its applicability is limited by its low bandwidth.

the scary thing about the “true random” rabbit hole is that with the right variables, even that lava lamp’s state can be predicted. is it hard? sure. but TRUE random is by definition something that can’t exist within a “system”. sort of like the whole quantum thought experiment things.. they make the brain hurt

The rabbit hole you refer to is the issue of determinism in all its various manifestations. Adequate indeterminism is sufficient and can had from any practical random number generator that can be linked to an irreducible property of quantum indeterminism.

exactly, we must march towards a greater sufficient. Like the “perfect sphere” or the “exact weight”. my point was just that “true random” is (with current technology) improbable, so the lava lamp example would be mislabeled. Otherwise we would have to assume that it will always be impossible to accurately model the fluid/thermo interactions happening inside that weird glass bubble.

http://demonstrations.wolfram.com/LavaLamp/

@DV82XL: “linked to an irreducible property of quantum indeterminism” is the right way to go. And for that: zener diode noise, transistor avalanche breakdown noise, Johnson noise in resistors if you don’t care about whiteness, etc. It ain’t hard.

I’ve made a few based on transistor breakdown, and for bandwidths in the 100 kHz range — so maybe 10K bits/sec — it’s easy. Use those as a seed for a good PRNG, and you’ve got a good setup. The hard part is if you need to certify that the device is tamper-proof and always working as it should. But that’s the difference between a $2 hobbyist lashup and a $1000 professional unit.

Anyway, any PRNG needs good seed data. Quantum uncertainty is a great source of that seed data. This article is about not messing up the next step.

If you have 10k random bits, you have enough randomness for the rest of your life. The device doesn’t have to be tamper proof either, because nobody knows you had one running for one second at some point in time. The only thing is that you need to keep your 10k random bits securely stored.

Oh yeah. And: http://hackaday.com/2014/12/19/nist-randomness-beacon/

These cats are aiming at a Bell’s paradox quantum RNG, which takes it to the next level — a quantum RNG that’s also provably un-influenceable because of a) distance and b) the speed of light. Dig into that if you want your brain to hurt!

like say, a nice hot cup of tea…

Infinite improbability drives seem to be less fussy about where the initial seeds come from than most pseudorandom number generators.

>TRUE random is by definition something that can’t exist within a “system”

Sure, there’s bounded randomness, such that a fish cannot become a rock but simply exist as a fish, but that doesn’t mean the selection of states within the range of possibilty isn’t truly random.

The very opposite is true. If you argue that it isn’t random, then you have to point out what causes it, and there isn’t anything; no “hidden variable” to account for the progression of states from one to another that would make any physical or theoretical sense.

I’m no math expert, but isn’t the concept behind “chaos theory” that some things simply cannot be predicted, such as the vibrations of atoms and as a result the Brownian motion in fluids? Then there’s the whole Heisenberg Uncertainty Principle bit which, to my crude understand, says that you can’t actually measure every particle’s position and inertia, and without that information you can’t accurately predict their movements.

Adequate Determinism is the kind of determinism we have in the world. It is a statistical determinism, where the statistics are near to certainty for large macroscopic objects. Adequate Determinism also includes indeterminism, an irreducible property of the microscopic quantum world. This should should be distinguished from pre-determinism, the idea that the entire past (as well as the future) was determined at the origin of the universe. Pre-determinism implies that all the information in the universe today was implicit in the earliest moments of the universe and that this information is conserved. The Heisenberg Uncertainty Principle establishes that this cannot be the case, but it does not imply that every event is not the inevitable and necessary consequence of an antecedent state, only that they cannot be known perfectly.

Then there’s also Superdeterminism, which is the idea that in reality there are no events, rather the world is playing back as if projected from a spool of film.

Such that in any point of space and time, reality is just what information happens to be there, and if you take a step to the left the world is different entirely, therefore you can’t say anything at all happens – the next moment you’ll contain different information that doesn’t need to have anything to do with what came before.

The most realistic way to get the state of the lava lamp is break into the room where it is stored. But then it will be easier just to grab the random number that is generated.

In particular with lavarand, they also mixed in radio static from a few FM radios.

I know what you’re thinking…

The links to CBMC and Minisat are broken (relative paths…)

Thanks, should be fixed now.

Any improvement in an open-source OS&Userland security is always good.

Though with some standardization and defined long-term road-maps for the mainstream GNU-Linux distros.

Just as an opinionated thought of mine:

Since the sysvinit is creeping into everything, then it should be developed as though it were a long-term integral part of the GNU boot and service subsystem.

It’s purpose should be to run 3rd party services (i.e. made by another Open Source project) communicating over a common API-bus as modules/applications and should go no further into the user experience as to get a terminal or blank graphic display (i.e. configured by an init flag)

Just my opinion, Please take it with a pinch of salt (or pepper if you wish)

it would be interesting to see this approach applied to the 8266 hardware random number generator, most of the people so far reviewing it seem to think it is good – and it’s the cheapest easiest to use hardware random number generator out there (that I know of..).

It uses wifi for the randomness source, or so they tell us as it isn’t open source..

So you just have to “trust” something you can’t verify for security, sure seems legit.

I agree with your point, ie though lots of people are testing the 8266 random numbers, one day the chip might wake up and do something different and utterly not random….

Though that – as discussed often on this site – is the problem with anything running on a chip you didn’t make yourself… Or passing the data through anything you didn’t do yourself…

Taking that approach is fine if you only need a small number of random numbers – I’m in the middle of writing a paper for a client and I am telling them to roll dice for something they want demonstrability random… – however if you need millions it’s almost impossible to do that and use them on trusted hardware…

Wouldn’t it be quite trivial in a net connected envorment to create seeds based on things like latency and content from pages that have frequently changing content among many other sources of conditions taking place external to the processor?

One of the baseline requirements of any cryptographic RNG is that no attacker should ever be able to influence it in any way. If they can, that makes breaking your crypto massively easier for them. That’s what this whole article is about – they don’t even need to _influence_ your RNG, just knowing that it’s imperfect in a specific way gives them too much of an edge.. And I think it’s obvious how somebody messing around with your internet connection would be in a position to completely screw you over…

General rule: don’t do anything with time unless you have enough resolution and there’s a lot of unpredictable variation in the timing.

If I ping out right now, I get numbers in the 30-45 ms range, so you can brute-force these 15 likely values pretty fast. If I measure the pings in microseconds, maybe the last three digits are random? So that’s 8-10 bits.

You _can_ keep subdividing, but if you want 128 or 256 random bits, you’re in timescales that you can’t measure, and you have to aggregate up a lot of observations. This may expose some structure in the “randomness” that you’d hoped to avoid — averaging is a great way to improve signal/noise ratio, exactly what we don’t want. Computers can easily make actions repeatably on a microsecond timescale.

So… meh. Multiple (uncorrelated) sources whenever possible, and treat timings derived from computer systems with hard skepticism. (IMO, not expert. Know something about probability.)

$10 hardware random number generator.

https://github.com/pwarren/rtl-entropy

yes, put as I commented above, a < $3 nodemcu board will do the same with the wifi freq..

How often do they need new numbers? I use a Geiger counter and it takes a pretty long interval to have reasonable number of states.

I recall reading something about older Intel CPUs having a circuit deliberately left floating and dumping its input into a register. Anything from EMI to cosmic rays could effect a low-voltage gate with a floating input, so it was theoretically as random as a Geiger counter.

I would have to see the analysis ;-)

Radioactive decay is not effected by temperature, gravity, light, electric or magnetic fields or any shielding. A Geiger Counter with a little bit of radium will be random. Rather than collect counts, which will have a narrow distribution over long intervals, count the time between events.

The video is too big for their dialup modem. But it is also on youtube:

https://www.youtube.com/watch?v=c_CalG1rL0I

No need to point to a bootlegged copy of the video. All the talks are available in the CCC youtube channel – https://youtu.be/C6JKQbOvkgI

This video is no longer available because the YouTube account associated with this video has been terminated.

Sorry about that.

:(

Take a mediocre random number sequence, and encrypt the stream with AES-256 to get a good one.

Congrats, you just came up with the crypto equivalent of over-unity…

Damn, Now the over-unity nutters (unless one day they succeed) will be passing that stream out into coils and stuff claiming to be making over-unity electrickerzity.

“Take a mediocre random number sequence, and encrypt the stream with AES-256 to get a good one.”

Here’s the though experiment. My mediocre random number sequence is 7, every time. No bits of entropy going in to the AES-256. How many bits come out?

That’s what the “over-unity” comments are getting on about, and that’s the real trojan horse in this idea. Say you put 10 bits of entropy in. You get something that looks random, passes (maybe) some statistical sniff tests, but is brute-forceable in a millisecond. This is what happened to OpenSSL.

Obviously you shouldn’t put a stream of 7’s in. But you could encrypt a sequence of 0, 1, 2, 3, 4… etc..

Get 256 bits of entropy and use it as your key. The only way to guess the next random number is to break AES.

I stared at the picture at the top for quite a while trying to figure out what “em rof skrow” is supposed to mean. Sometimes it’s really annoying if your brain automatically mirrors text because the letters are mirrored…

I had a slightly different problem not too long ago. I needed random numbers for my Crazy Clock, but since the controller was running at 32 kHz, the random function in AVR libc was way too slow. I didn’t need something of cryptographic quality, but I didn’t want the clock’s behavior to fall into patterns. I wound up finding a good replacement in a forum post somewhere, but what I’m writing here about was the method I used to test it: I had it generate a million bits of output and turned that into a 1024×1024 PBM file and took a look at the result. If you do that with anything that has a short repeating pattern, it becomes pretty easy to see on the screen. In this case, it looked just like a frame of old analog TV snow.

I don’t claim for a moment that this is rigorous enough for cryptographers, but it’s a good “sniff test” if you’re writing an embedded game or something like that that has to be fast, but you don’t want it to be easy to find patterns.

I’ve seen this test before. Here’s PHP’s built-in PRNG:

https://i.stack.imgur.com/uzSyZ.png

Taken from here: http://stackoverflow.com/questions/8838413/php-rand-is-it-possible-to-guess-the-next-number-in-a-sequence-and-generatin

The same method was used to evaluate Javascipt’s PRNG here on Hackaday: http://hackaday.com/2015/12/28/v8-javascript-fixes-horrible-random-number-generator/