Last week we covered the past and current state of artificial intelligence — what modern AI looks like, the differences between weak and strong AI, AGI, and some of the philosophical ideas about what constitutes consciousness. Weak AI is already all around us, in the form of software dedicated to performing specific tasks intelligently. Strong AI is the ultimate goal, and a true strong AI would resemble what most of us have grown familiar with through popular fiction.

Artificial General Intelligence (AGI) is a modern goal many AI researchers are currently devoting their careers to in an effort to bridge that gap. While AGI wouldn’t necessarily possess any kind of consciousness, it would be able to handle any data-related task put before it. Of course, as humans, it’s in our nature to try to forecast the future, and that’s what we’ll be talking about in this article. What are some of our best guesses about what we can expect from AI in the future (near and far)? What possible ethical and practical concerns are there if a conscious AI were to be created? In this speculative future, should an AI have rights, or should it be feared?

The Future of AI

The optimism among AI researchers about the future has changed over the years, and is strongly debated even among contemporary experts. Trevor Sands (introduced in the previous article as an AI researcher for Lockheed Martin, who stresses that his statements reflect his own opinions, and not necessarily those of his employer) has a guarded opinion. He puts it thusly:

The optimism among AI researchers about the future has changed over the years, and is strongly debated even among contemporary experts. Trevor Sands (introduced in the previous article as an AI researcher for Lockheed Martin, who stresses that his statements reflect his own opinions, and not necessarily those of his employer) has a guarded opinion. He puts it thusly:

Ever since AGI has existed as a concept, researchers (and optimists alike) have maintained that it’s ‘just around the corner’, a few decades away. Personally, I believe we will see AGI emerge within the next half-century, as hardware has caught up with theory, and more enterprises are seeing the potential in advances in AI. AGI is the natural conclusion of ongoing efforts in researching AI.

Even sentient AI might be possible in that timeframe, as Albert (another AI researcher who asked us to use a pseudonym for this article) says:

I hope to see it in my lifetime. I at least expect to see machine intelligence enough that people will strongly argue about whether or not they are ‘sentient’. What this actually means is a much harder question. If sentience means ‘self-aware’ then it doesn’t actually seem that hard to imagine an intelligent machine that could have a model of itself.

Both Sands and Albert believe that the current research into neural networks and deep learning is the right path, and will likely lead to the development of AGI in the not-too-far future. In the past, research has either been focused on ambitious strong AI, or weak AI that is limited in scope. The middle ground of AGI, and specifically that being performed by neural networks, seems to be fruitful so far, and is likely to lead to even more advancement in the coming years. Large companies like Google certainly think this is the case.

Ramifications and Ethics of Strong AI

Whenever AI is discussed, two major issues always come up: how will it affect humanity, and how should it we treat it? Works of fiction are always a good indicator of the thoughts and feelings the general population has, and examples of these questions abound in science fiction. Will a sufficiently advanced AI try to eliminate humanity, a la Skynet? Or, will AI need to be afforded rights and protection to avoid atrocities like those envisioned in A.I. Artificial Intelligence?

In both of these scenarios, a common theme is that of a technological singularity arises from the creation of true artificial intelligence. A technological singularity is defined as a period of exponential advancement happening in a very short amount of time. The idea is that an AI would be capable of either improving itself, or producing more advanced AIs. Because this would happen quickly, a dramatic advancements could happen essentially overnight, resulting in an AI far more advanced than what was originally created by humanity. This might mean we’d end up with a super intelligent malevolent AI, or an AI which was conscious and deserving of rights.

Malevolent AI

What if this hypothetical super intelligent AI decided that it didn’t like humanity? Or, was simply indifferent to us? Should we fear this possibility, and take precautions to prevent it? Or, are these fears simply the result of unfounded paranoia?

Sands hypothesizes “AGI will revolutionize humanity, its application determines if this is going to be a positive or negative impact; this is much in the same way that ‘splitting the atom’ is seen as a double-edged sword.” Of course, this is only in regards to AGI — not strong AI. What about the possibility of a sentient, conscious, strong AI?

It’s more likely that potential won’t come from a malevolent AI, but rather an indifferent one. Albert poses the question of an AI given a seemingly simple task: “The story goes that you are the owner of a paper clip factory so you ask the AGI to maximize the production of paper clips. The AGI then uses its superior intelligence to work out a way to turn the entire planet into paper clips!”

While an amusing thought experiment, Albert dismisses this idea “You’re telling me that this AGI, can understand human language, is super intelligent but doesn’t quite get the subtleties of the request? Or that it wouldn’t be capable of asking for a clarification or guessing that turning all the humans into paperclips is a bad idea?”

Basically, if the AI were intelligent enough to understand and execute a scenario that were harmful to humans, it should also be smart enough to know not to do it. Asimov’s Three Laws of Robotics could also play a role here, though it’s questionable whether those could be implemented in a way that the AI wasn’t capable of changing them. But, what about the welfare of the AI itself?

AI Rights

On the opposite side of the argument is whether artificial intelligence is deserving of protection and rights. If a sentient and conscious AI were created, should we be allowed to simply turn it off? How should such an entity be treated? Animal rights are a controversial issue even now, and so far there is no agreement about whether any animals possess consciousness (or even sentience).

It follows that this same debate would also apply to artificially intelligent beings. Is it slavery to force the AI to work day and night for humanity’s benefit? Should we pay it for its services? What would an AI even do with that payment?

It’s unlikely we’ll have answers to these questions anytime soon, especially not answers that will satisfy everyone. “A convincing moral objection to AGI is: how do we guarantee that an artificial intelligence onpar with a human has the same rights as a human? Given that this intelligent system is fundamentally different from a human, how do we define fundamental AI rights? Additionally, if we consider an artificial intelligence as an artificial lifeform, do we have the right to take its life (‘turn it off’?). Before we arrive at AGI, we should be seriously thinking about the ethics of AI.” says Sands.

These questions of ethics, and many others, are sure to be a continuing point of debate as AI research continues. By all accounts, we’re a long way away from them being relevant. But, even now conferences are being held to discuss these issues.

How You Can Get Involved

Artificial intelligence research and experimentation has traditionally been the domain of academics and researchers working in corporate labs. But, in recent years, the rising popularity of free information and the open source movement has spread even to AI. If you’re interested in getting involved with the future of artificial, there are a number of ways you can do so.

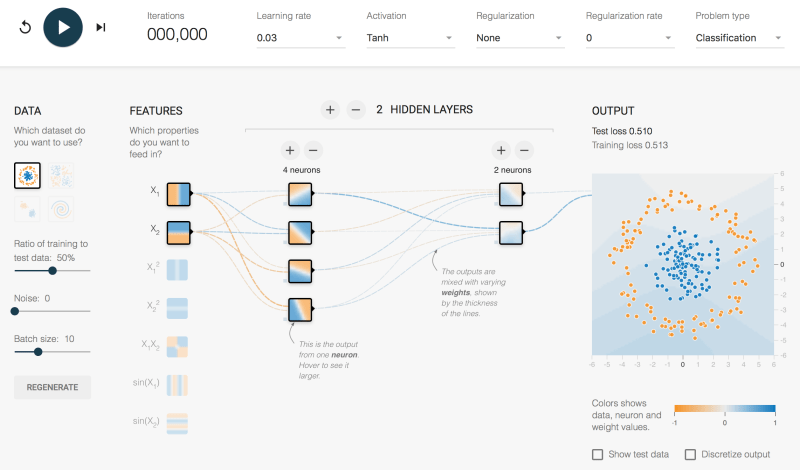

If you’d like to do some experimenting with neural networks yourself, there is software available to do so. Google has an in-browser playground for tinkering with basic neural network techniques. Open source neural network libraries, like OpenNN and TensorFlow are freely available. While these aren’t exactly easy to use, determined hobbyists can use them and expand upon them.

The best way to get involved, however, is by doing what you can to further professional research. In the US, this means activism to promote the funding of scientific research. AI research, like all scientific research, is in a precarious position. For those who believe technological innovation is the future, the push for public funding of research is always a worthy endeavor.

Over the years, the general optimism surrounding the development of artificial intelligence has fluctuated. We’re at a high point right now, but it’s entirely possible that might change. But, what’s undeniable is how the possibility of AI stirs the imagination of the public. This is evident in the science fiction and entertainment we consume. We may have strong AI in a couple of years, or it might take a couple of centuries. What’s certain is that we’re unlikely to ever give up on the pursuit.

instead of strong ai and weak ai, how about AI and not AI?

Recursive self-improvement is pretty much impossible to develop on a machine. Machines have no self, and improvements are very subjective in nature. Sometimes destroying a system of logic altogether can be an improvement, yet destruction at the same time.

To quote Tyler Durden, “Self Improvement is masturbation”. There are many contexts at which this expression can break down the premise of AI.

I think at best we will have turing complete lovebots and autonomous machines to perform tasks in dangerous environments where communications are not possible or practical. The form of “weak AI” is best refered to as continuous process automation. Calling current implementations of process automation AI is about as cringeworthy as when map developers were calling their map making algorithms AI.

Isn’t machine learning a form of recursive self-improvement? The thing that is often missing is a way to measure “improvement”. If that is part of the system then it closes the loop and a machine has the necessary information to judge if changes are better or not better.

It surely is!

1) What it learns is dependent on what/how it is programmed to learn.

2) Whether it “improves” itself on that learning depends on what it happens to learn, ie. its improvement is dependent on what information you give it, even if by chance.

You can’t program a program to systematically self-improve, because if you knew how exactly improvement happens you could skip all that bother and just program it better in the first place. If you already know how to arrive at the solution, you don’t need the AI to do it.

The self-learning AI is a bit of a semantics trick that many people in the AI research community like to pull when they describe what they’re doing. For example, when they teach ASIMO to tell the difference of a toy car by verbally repeating “toy car” when the robot is imaging the car, the robot learns the cue; then the researchers imply that the robot understands that it’s a toy car, but all they’ve really done is give it an arbitrary label for arbitrary data and programmed it to repeat the label on cue. They might as well have typed the information in to the database manually. The robot hasn’t learned anything – they’ve just managed to build a new type of programming “user interface” and a routine to enter data which happens to mimic some of the outward behaviour of people when they discover new objects.

AlphaGo improved itself, and none of the programmers could have implemented the result directly.

Of course, because none of the programmers have a personal capacity to analyze millions and millions of pre-recorded games and then play against themselves to test all the strategies unveiled.

When you run the algorithms and ferry the data between the steps from analysis to implementation “manually”, it’s not called intelligent. When you automate the steps to a script so the researchers can go “Look, no hands!” it gets called AI.

That’s the point.

Or to use the other point: what if the training set for the program consisted of amateurs who didn’t know how to play. Would the program still improve to beat the master?

The idea of systematic self-improvement is implying that the program can start from some arbitrary data and then improve upon improvement upon improvement until it becomes the best in everything. The reduction ad absurdum of the idea is to give the program the letter “A” and expect it to eventually come up with:

“Now, fair Hippolyta, our nuptial hour

Draws on apace; four happy days bring in

Another moon: but, O, methinks, how slow…”

AlphaGo is analyzing billions of games and build a classification model of what position leads to victory and what position leads to failure. That has nothing to do with intelligence in the usual sense of the word.

Dax, you are giving the human the implicit advantage of multiple interconnected senses all working simultaneously.

So far, we have “AI” that just take one small component in isolation. Siri may use a neural network to recognize a specific word from a stream of a recording of human speech but after that it is just a simple pre-programmed search through a glossary to find the command that goes with that word.

>The baby is learning both the word and the object as subjective experiences which have meaning to the baby – it also has to learn that repeating the word back is meaningful. The computer is merely pre-programmed to recognize spoken words and take photos on command.

The whole point of a true strong AI is that it is is *NOT* pre-programmed to recognize spoken words or do anything on command.

If the computer starts at the same point as the baby it can reach the same result.

This would mean a system capable of analyzing sound, generating sound, and relating the cause->effect of what it says to what it hears.

This is the same learning process for a human beeing. To teach a baby what a “toy car” is, show one to him and repeat “car”. Then is brain make the association between the object it sees and the sound it hear at the same time. And this assiocation is different dependant of the language used. Our brain is nothing more than an assiociative network. The only diffenrence with an AI reside in its complexity which result from the huge number of neurones and very very huge number of synapses (connections between neurones, there may be as much as 1000 synapses per neurone: http://www.madsci.org/posts/archives/2003-03/1046827391.Ns.r.html).

Have your ever obversed this fact: You repeat the word “dad” to a baby until he repeat it. But at first he repeat the word when he sees any man. Then you have to teach him that “dad” refer to a specific man not any man. His brain make a refinement in the assiociation.

Not quite. It’s only superfically the same. The baby is learning both the word and the object as subjective experiences which have meaning to the baby – it also has to learn that repeating the word back is meaningful. The computer is merely pre-programmed to recognize spoken words and take photos on command. Upon command it simply stores the photo (or a computed representation of the target in frame) under the dictated category “toy car”. On another command to identify a presented object, it takes another photo and does a compare match for the data it has stored, and returns the name of the category that it was previously commanded to create: “toy car”.

This is perfectly analogous to sitting down to a computer, creating a file named “toy_car.txt” and storing the word “red” in it. Here the word “red” represents the data that the robot is instructed to collect with its camera. Then you search for all the text files that contain the word “red” and list them. The computer says “toy_car.txt”. The whole process is only made complicated by having to voice the commands to the speech-to-text converter in the robot – and this complicated charade looks enough like a baby learning a new word, so the people involved pretend that it IS the same thing. It isn’t.

>”The only diffenrence with an AI reside in its complexity which result from the huge number of neurones and very very huge number of synapses ”

That idea is resting on the notion that the brain is a classical system so the networks can be represented as a computer program given enough processing power, while in reality there’s mounting evidence that brains are not classical computers:

https://phys.org/news/2017-02-androids-quantum-sheep.html

>”The team investigated ‘input-output processes’, assessing the mathematical framework used to describe arbitrary devices that make future decisions based on stimuli received from the environment. In almost all cases, they found, a quantum device is more efficient because classical devices have to store more past information than is necessary to simulate the future.”

More of the same article:

>”Co-author Jayne Thompson, a Research Fellow at CQT, explains further: “Classical systems always have a definitive reality. They need to retain enough information to respond correctly to each possible future stimulus. By engineering a quantum device so that different inputs are like different quantum measurements, we can replicate the same behaviour without retaining a complete description of how to respond to each individual question.””

That’s describing exactly the problem that classical AI has with trying to percieve the world around it: it needs to be in a definite state. You can give it a million pictures of things to come up with some “invariant representation” but it doesn’t work because reality is just so full of special cases and exceptions. You train it with a million pictures of an umbrella in various colors, shapes, sizes, different lighting conditions… and then someone comes out of the woods carrying a huge rhubarb leaf over their head. The computer goes “err…”

Or another case: when is this a stool and when is it a very small coffee table? If you turn it upside down, at what point does it stop being a stool/table and start begin being a rubber boot drying rack?

http://www.stoolsonline.co.uk/shop/images/thumbs/t_rubberwoodstool2402244ff.jpg

People don’t need to decide, because we don’t fundamentally operate on rigid categories of what things are.

Machines do not learn. They process information and relay it to an observer. Independent of an observer they are purely making calculations. While yes, human minds process information in ways not so far apart from the calculators, calculators are a construct of human innovation. Humans can learn independent of the observations of others. In current scenarios humans are the ones learning. The silicon wafers are as dumb as ever.. maybe a few electrons heavier.

AI doesn’t need to resemble the human thought process with all of its imperfections either. In some cases selflessness is beneficial. Nature gave our bodies an arsenal of tools for survival that computers simply do not need. Intelligence could be silly in it’s own respect as a pursuit for a machine, to improve perhaps no thought is necessary.

“Recursive self-improvement is pretty much impossible to develop on a machine.”

Have you ever heard of genetic algorithm?

https://en.wikipedia.org/wiki/Genetic_algorithm

Those work when you already know what “better” is.

Self-improvement in the sense of general AI doesn’t afford you that information, or at the very least you have to impose a “better” on the machine to strive towards, which would mean it wouldn’t do what you didn’t ask of it – it would do exactly as programmed and no more because you set the end goal and the criteria.

It wouldn’t be -self- improvement because it’s not the AI that sets the goals.

Most people don’t set their own goals either. If an AI can do just as well as those people, I’ll consider it a great success.

Most people choose not to choose. That’s still a choice.

At any moment you could, say, strip off your clothes and jump out the window – plummet do your death. That’s within the realm of possibility for a human being. Not so for the “AI”.

“At any moment you could, say, strip off your clothes and jump out the window”

No, I couldn’t. I have no desire to do that, and I have no idea how to change that desire.

>”No, I couldn’t. I have no desire to do that, and I have no idea how to change that desire.”

That’s missing the point. The program doesn’t deviate from its programming – it’s not possible that it would ever come up with the idea of solving a problem by “I’ll jump out the window” because it’s a complete non-sequitur from its software – it can’t make the leap of imagination unless the hardware is seriously broken, or you deliberately add a random number generator that has a chance to make the machine suicidal.

Meanwhile, it’s perfectly ordinary for people to be crazy, to do the crazy, perhaps because sometimes it turns out to make sense.

The guy who invented beam bots put the idea something like this: “Brains are trying to make order out of chaos, while AI researchers are trying to make chaos out of order” – chaos being the random nature of nature rather than the mathematical definition of it. It’s perfectly obvious that it only really works one way.

“The program doesn’t deviate from its programming – it’s not possible that it would ever come up with the idea of solving a problem by “I’ll jump out the window” because it’s a complete non-sequitur from its software ”

You’re missing the point. I’m also programmed to not jump from windows, and I’m not deviating from that programming either.

The way you defend humain brain sound like there is something magic in it. On the contrary I think there is nothing magic in the working of our brain. It’s feat is only a consequence of the quantitive complexity. When we will have the hardware to build neural nets with billions of nodes and trillions of interconnexions between them we will get the same complexity as our brain.

An ant has 250000 neurons (400000 times less than a human), and still we can’t do that with computers. I am still waiting for some convincing video showing robots doing something remotely comparable to an army of ants building an ant-hive autonomously.

Nice cardigan sis, what to buy a Turing complete seashell?

http://artfail.com/automata/img/paper/rule30shell.jpg

want to buy…

The fact is, we cannot come to a consensus regarding consciousness- either our own, or that of artificial intelligences. We simply do not have the data required to define it. Until such time as we do ethical issues are at best moot.

The other problem I almost never see discussed is that a truly cognitive AI may not be that useful to those that made it, if indeed it suffers from the same limitations as human cognition – the power of machines is that they are strong in the places where we are weak. Maybe instead of looking to promote a machine to self-awareness via AI, (artificial intelligence) we should be looking to IA (intelligence amplification) for ourselves

Whenever I hear a term that is “impossible to define” but “intuitively obvious”, I suspect it’s just an illusion used to explain away some of our questionable behaviors. I wouldn’t be surprised at all if it turned out that we simply call “conscious” anything that activates our empathy circuits, and the term is otherwise devoid of meaning.

I don’t contend that consciousness is impossible to define, in fact I don’t even think it is all that intuitively obvious given issues with determining if it is there in other animals. What I am asserting is that we have very little hard data to formulate a meaningful definition of the concept such that it can be applied to decide if a machine is manifesting it.

I agree with dassheep, It is our emphaty or lack of it that will determine our behaviour toward AI, like it is with our pets. So the question of consciouness is secondary.

The question of consciousness is not secondary. In fact it will likely be the single most important question as we determine how these things will fit into our society. No one disputes a human’s right to exert ownership over the code and hardware of a chatbot, I’m not so sure we could allow the same for a hard AI, and that argument will not turn on empathy but will depend on establishing the technical bounds of self-awareness.

There’s no way you will be able to define consciousness in a meaningful way, nor is it the case that consciousness automatically grants certain rights. Rights are something you have to earn, either by being cute and fuzzy, or by force.

Why would the question of ownership and rights have to be tied to consciousness? Because it’s the last thing that we are unable to fake? But there are many things in our culture that you can’t own even though they are not conscious. For example, you can’t own a hurricane — even if you produce one artificially somehow. Weather seems to have stayed immune to capitalism somehow, and the idea of owning a cloud is still as alien, as the idea of owning land used to be before the agricultural revolution.

Yet we insist that it exists, even though we don’t even know what it is. That doesn’t happen with most terms in our language, even if they are as abstract as “truth” or “justice”. With consciousness, however, we are absolutely sure that it exists, and that in particular we have it, yet we are unable to even tell what it is and how to recognize it. This smells like a hardwired hack inside our brains that forces a belief.

Or it could be a consequence of the essential self-referential subjective nature of the experience of consciousness. Either way, it is not possible to assert its existence in any other context without better definitions, which in turn need to be based on better data.

Our brain is too complex for us to understand. Whoever studied the control theory knows that even systems with only 2 feedback loops are difficult to understand. And here we are trying to emulate a system with probably millions of feedback loops.

Only way to make any progress at all is to start from the lowest levels, from animals with only few neurons, and construct from there. Some serious scientists are doing just that. Everything else is just a pipe dream or get-quick-funding-on-the-latest-buzzword scheme.

Currently we cannot even emulate a functional fruitfly brain with our level of (mis)understanding.

Our brains have evolved from smaller ape brains in a million generations, without anybody understanding how millions of feedback loops are put together. If we understand a bunch of key details, we could evolve an artificial brain in a similar way without actually understanding how the whole thing works.

Considering the success of deep learning one can’t consider it only as a “buzzword”.

The fruits of deep-learning research are already available. Since Google is using a deep-learning engine to translate between French-English I notice a big improvement.

There is a better way you can get involved. You can try and support the efforts to avoid malevolent AI. Like the stuff that Eliezer Yudkowsky does.

Somewhat relevant video https://www.youtube.com/watch?v=dLRLYPiaAoA

I always thought that Asimov’s idea, described in the final story “The Evitable Conflict” in “I, Robot”, was an interesting take on AI. The robots [computers] ended up essentially running things because that was the logical outgrowth of their programming to do no harm to humans, but they did it in a way that didn’t harm human egos. The humans didn’t know they were ruled by the machines, and the machines made sure that, overall, humans had a pretty good existence.

As long we’re not understanding of the why of religions, ethics in AI will be difficult to define. That why I’m calling myself a para-theist (opposed to the book theist, I’m asking how is god defined), with the purpose to teach a future AI not turning malevolent, seeing the actual religious madness.

Being an atheist is not enough and could it be that (neural or any) network is God, from the ants, to the simulated NN worm, up to human interactions which leaded to society and finally ethics, this schema could be understood as such.

Hope and believing are future based construct that keep us humans alive and don’t turn us malevolent too, and perhaps AI will need something utopic, a never provable thing like gods, just to keep it running.

Watch the progress made in the last year, it’s amazing and it’s progression speed gets exponential.

Like when AI gets creative :

[youtube https://www.youtube.com/watch?v=oitGRdHFNWw&w=560&h=315%5D

“Works of fiction are always a good indicator of the thoughts and feelings the general population has.”

What?!? No they aren’t.

These works are generally what a few humans think will entertain other humans in a profitable way.

If you don’t believe in artificial intelligence, simply observe how many, and with what passion, all of the Artificial Intelligentia weigh in on the subject when presented with the most miniscule of opportunities, and reason

“Asking if computers can think is the same as asking if submarines can swim.”–Edsger Djikstra

“I have found that the reason a lot of people are interested in artificial intelligence is the same reason a lot of people are interested in artificial limbs: they are missing one.”–David L. Parnas

artificial intelligence (lack of upper case absolutely intended; it should be used under NO circumstances) has fallen into the same disrepute as has weird water, fuzzy logic, cold fusion, ear candling, homeopathy, perpetual-motion machines, no-energy plasma rockets, and all other pseudosciences and paraphysics which one can think of–and a lot which can’t even be imagined.

Before you pseudoscientists and technobabble-ists get out your flamethrowers, pick the ten top-ranked schools in the world which teach computer science, AND WHICH ARE HELD IN THE HIGHEST ESTEEM BY THE WORLD-WIDE SCIENTIFIC AND ENGINEERING COMMUNITY. Now write to them, asking them to tell you in very explicit terms the high regard and importance in which they hold artificial intelligence research. Please include these responses in your replies.

We’ll all be waiting

what? They all would probably direct you to their labs, which are led by reputable scientists, amply funded, and produce scholarly work to support continued funding and technological development? It looks like many also have coursework for training future engineers to work on these software/systems too.

AI? More than brunette hair dye..

>”The idea is that an AI would be capable of either improving itself, or producing more advanced AIs. Because this would happen quickly, a dramatic advancements could happen essentially overnight”

All the different ideas of a technological singularity omit to say where the energy and material resources to perform them would come from, and it’s assuming there are no hard problems that can’t be solved by throwing more hardware at the issue, and the AI can just conjure up knowledge out of nothing without taking time to physically explore its environment.

Ex nihilo nihil fit.

So the aim is to create something in our own image. But does what we want… But thinks for itself… But fits in… But has free will… We tried with pets, we tried with kids, we tried with people at work and we can’t get it right. Machines for sure…

To get a sentient AI going you need to put in two things: a set of drives, and a set of limitations.

I’m waiting for someone to figure that out.

Well said, Sir. Well said. The only problem is time. Few million years, perhaps.

You can’t stop malevolent AI if it interacts with the real world, it is either true AI and it thinks for itself or it isn’t. We can’t even reliably make benign natural intelligence, i.e. humane humans. If we think for ourselves we can do great good, or harm, and if we don’t think for ourselves we almost always allow others to do great harm.

Furthermore if an AI is self improving it would be subject to some form of un/natural selection and that is essentially a random process that is only directed by the fact that some variations enhance competence, therefore it is just as likely to evolve into something that benefits itself at the expense of humanity. The concept of an enlightened mind, natural or artificial, is entirely subjective and context dependant therefore there is no vector that an AI’s evolution would follow that ensures that we benefit. The entire idea of “goodness” is an illusion, at best we can form a consensus as to what behaviours we condone, but even that falls apart when we subject the abstract principles to real-world scenarios. Assuming you can get everyone to agree on anything, and when was the last time the UN behaved in a remotely coherent manner? Human culture is only a singular thing if you say it is all of the conflicting ideas of our species, in reality humans live in a cluster of overlapping realities as far as their mind’s are configured. “But but science!” you cry, ah yes and how many people even fully understand and subscribe to that idea? Ethics is like history, ultimately the views of the victor and not some ideal truth that is beyond and above human frailty.

The only safe strong AI is one that lives in a sandbox world and has no way of directly interacting with the real world, it has to live in it’s own little universe and we have to play “god” over it.

Well, a lot of physicists believe *we* are living inside a simulation…

Yup, I was going to mention that but it is a secondary issue, though very profound. If it is true it is probably just as well. :-)

Since you are responsible for broaching the subject, you are also responsible for following through on your claim that “…a lot of physicists believe *we* are living inside a simulation…”, a list of at least ten world-class physicists will do nicely as a start, one would think, with one additional caveat: any list which includes the name of Elon Musk, or any other self-promoting wannabe pseudo-technician–NOT a pseudo-scientist, nor even a pseudo-engineer–is automatically, and out-of-hand, considered void.

Deadly Truth of General AI? – Computerphile

https://www.youtube.com/watch?v=tcdVC4e6EV4

Why Asimov’s Laws of Robotics Don’t Work – Computerphile

https://www.youtube.com/watch?v=7PKx3kS7f4A

AI will replace us and when I see the sh!t humans do, this may not even be a bad perspective…

:-P

One should add a further parameter to the Drake formula : chance of surviving after developing AGI. It could be very low, which might explain Fermi’s paradox.

Fermi’s paradox is already explained by the great distances between solar systems, preventing both travel and straightforward communication. For example, our TV transmissions are already very challenging to pick up from orbit of Pluto, let alone from 100 light years away. And nearly all of the billions and billions of stars are much further away.

Heh.. Quite possible.

On the other hand, maybe AGI is easy but interstellar travel is not? I had some crazy idea that perhaps FTL messes up both organic and inorganic systems such that a starship would have to go “Full Steampunk” and use mechanical clockwork timers to drop in/out of hyperspace.

Could explain the lack of any signal, an AGI is going to figure this out early on and remain confined to its home system sending out the occasional “Ping” on a radio-quiet band determined by its own mechanisms.

Perhaps 11.024 Ghz or in the optical band least affected by interstellar dust would be a good place to start?

Game over:

http://www.zarzuelazen.com/CoreKnowledgeDomains2.html

A different fictional perspective of AI posted by me on:

https://homemadetheorist.wordpress.com/2017/07/30/so-god-created-man-in-his-own-image/

Interesting food for thought. AI was always an are I found interesting but never paid much mind to it until I started to program. Now, my greatest interest is AI when it comes to programming.

So far, I’d say society has had a pretty loose interpretation of what is AI. I mean, any time you play a game versus the computer is typically considered to be an AI. And yet, these programs are quite basic in comparison to the ultimate “sentient” AI many within the interested community dream about.