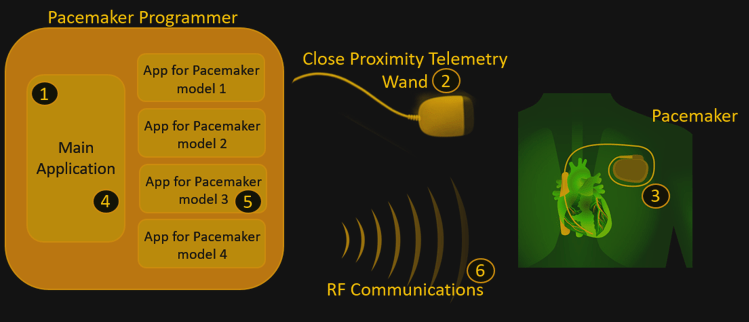

Doctors use RF signals to adjust pacemakers so that instead of slicing a patient open, they can change the pacemakers parameters which in turn avoids unnecessary surgery. A study on security weaknesses of pacemakers (highlights) or full Report (PDF) has found that pacemakers from the main manufacturers contain security vulnerabilities that make it possible for the devices to be adjusted by anyone with a programmer and proximity. Of course, it shouldn’t be possible for anyone other than medical professionals to acquire a pacemaker programmer. The authors bought their examples on eBay.

They discovered over 8,000 known vulnerabilities in third-party libraries across four different pacemaker programmers from four manufacturers. This highlights an industry-wide problem when it comes to security. None of the pacemaker programmers required passwords, and none of the pacemakers authenticated with the programmers. Some home pacemaker monitoring systems even included USB connections in which opens up the possibilities of introducing malware through an infected pendrive.

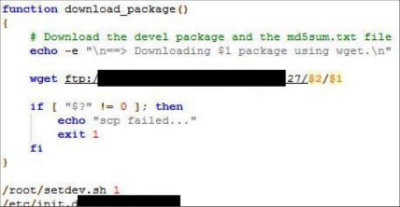

The programmers’ firmware update procedures were also flawed, with hard-coded credentials being very common. This allows an attacker to setup their own authentication server and upload their own firmware to the home monitoring kit. Due to the nature of the hack, the researchers are not disclosing to the public which manufacturers or devices are at fault and have redacted some information until these medical device companies can get their house in order and fix these problems.

The programmers’ firmware update procedures were also flawed, with hard-coded credentials being very common. This allows an attacker to setup their own authentication server and upload their own firmware to the home monitoring kit. Due to the nature of the hack, the researchers are not disclosing to the public which manufacturers or devices are at fault and have redacted some information until these medical device companies can get their house in order and fix these problems.

This article only scratches the surface for an in-depth look read the full report. Let’s just hope that these medical companies take action as soon as possible and resolve these issue’s as soon as possible. This is not the first time pacemakers have been shown to be flawed.

Oh, that screenshot could as well be St. Jude Medical – the script names&paths & terminology checks out, and SJM is notorious, partly thanks to seemingly-targeted security researcher attacks. However, the screenshot doesn’t actually show anything bad. It’s actually a script meant to be run by a developer to deploy a programming environment on the base station – so it’s never actually run by a user, or as a result of user’s actions (and is most likely going to be run from inside of SJM network). Now, some other credentials stored on the station are much more interesting…

Besides, main ways for communication for those systems are 3G modems or ADSL lines, with WiFi modems possible, but AFAIK not being a popular choice in any way. If you think you’re going to man-in-the-middle 3G or even ADSL, good luck, but the point is – so far it’s only available for an attacker that’s determined enough, and until you’re MitMing the channel of communications, those “thousands” of vulnerabilities don’t matter – even more, I’m sure they were counted by simply comparing software version numbers and counting bugs that potentially affect security, not thinking of whether there’s actual attack surface that concerns these. Now, the RF comms done are much more interesting – I’m sure there’s a lot to cover there, but I don’t see a screenshot of disassembled C code BS is using for onboard transmitter comms, is there?

I’m still returning to the ICeeData ( https://hackaday.io/project/11323 ) once in a while, but right now I’m badly stuck with this JFFS2 filesystem that may or may not be faulty – it makes my Linux box crash each time it’s mounted in a conventional “modprobe mtdblock&mount loop device” way =( If anybody knows anything of help (or has some EX1150 images), or how to fsck/mount a JFFS2 while not using the standard Linux driver, let me know – crimier at protonmail dot com. Talking about that, I probably should finally get back to firmware.re guys.

However, the pacemaker programmer issues are certainly valid, that’s a good attack surface. However, I imagine you still need some kind of firmware sample and/or docs for programmer to reflash it into something potentially dangerous… Though you do need to “define dangerous”, of course =)

If you could erase the program, or otherwise set the device to never trigger, I think that would count as dangerous.

I think while some of what you are saying is valid you are missing the point. The security is amazingly sloppy, for something that literally could mean you not waking up the next morning. Digital medical devices should be third party pen tested by default by a reputable company. You are right the “thousands of vulnerabilities” may not be all root access switch the device off bugs but still bugs all the same.

Personally I think requiring third party pen testing is the kind of red tape, along with lawsuits, that kills medical device progress. Have a great ideal that could actually help people? Sorry, there are miles or red tape and millions of dollars in testing first, that will price it out of reach of most of the people you were actually trying to help in the first place. I don’t know how you pull it off (US), but seems some sort of a ‘low cost / full disclosure / release waiver’ type of medical device system would be ideal. A 99.99% safe and reliable pacemaker for a reasonable price (or artificial limb, etc, as long as you know exactly what you are getting into) vs $100k device only the very wealthy or very well insured person can afford due to red tape and profit margins in production seems to be killing the system, and people…

Therac-25

https://en.wikipedia.org/wiki/Therac-25

If you have a “great idea” for a device there WILL be miles of red tape and millions of dollars in testing. It’s only if you have a device already and are “modifying” it that you don’t need to retest (like modifying a pacemaker to allow wireless communication). So while it might add time/cost to a “great idea”, it wouldn’t be much compared to what it would already have to go through, and it would help prevent vulnerabilities from making it into consumer devices.

Automatic cars and pacemakers and breathalyzers and all sorts of other devices are all similar and have similar concerns.

http://blog.cleveland.com/metro/2008/11/ohio_commits_64_million_for_ne.html

“Tthe president of the company that makes the Intoxilyzer 8000, faced a contempt hearing in Arizona for not complying with a judges order to reveal the machines software to prove whether it produces reliable blood-alcohol readings.

The company, CMI Inc. of Owensboro, Ky., has racked up more than $2 million in fines — and counting — in Florida where CMI is being punished each day until it follows a court order to produce the machines source code so that defense attorneys can challenge drunk driving arrests. Thousands of cases have been held up.

Attorneys argue they can’t defend their clients until they know exactly how the machine computes its results.”

This is from 2005ish. This is not a new issue.

2009ish update:

“The Second District Court of Appeal and Circuit Court has ruled to uphold the 2005 ruling requiring the manufacturer of the Intoxilyzer 5000, Kentucky-based CMI Inc, to release source code for their breathalyzer equipment to be examined by witnesses for the defense of those standing trial with breathalyzer test result being used as evidence against them.”

‘”The defendant’s right to a fair trial outweighed the manufacturer’s claim of a trade secret,” Henderson said. In response to the ruling defense attorney, Mark Lipinski, who represents seven defendants challenging the source codes, said the state likely will be forced to reduce charges — or drop the cases entirely.’ … What this really means is that outside corporations cannot sell equipment to the state of Florida and expect to hide the workings of their machine by saying they are trade secret. It means the state has to give full disclosure concerning important and critical aspects of the case.”

Sounds good. I have zero sympathy for drunk drivers, but if the the thing is reading .08 after one glass because the airflow sensor firmware glitched, than we have an issue.

Thanks for the link.

I’m of the opinion all software governments use should be open-source unless there’s a legitimate national security reason for it not to be.

Except that currently “legitimate” means they don’t want you to know.

As someone that has a pacemaker, this is quite disturbing. However, the antenna used at home or the doctors office has to be within a centimeter or so of the device in order to work. Do these flaws allow access from a distance? If somebody has to slap a thing on my chest to alter my pacemaker, I’m not too worried. But if they can do it from across the street, I will start sleeping in a Faraday cage.

Don’t get a cage yet, but of course they could do ‘something’ from across the street. Would take one really really big coil to transmit anything to your device; you’re relatively safe. Just stay away from microwave ovens and keep cell phones away from your chest, that’s your biggest threat to the device’s proper operation.

I’m honestly concerned that some three letter agency would use this exploit to take someone out. Go ahead and tell me that they don’t off people.

Oh they (agencies) certainly do… The paper says that some of these Pacemakers have a RF-interface, but they don’t say anything about the range. It is more like 10cm or 10m (with appropriate equipment)?

Also i’m quite scared about the internet-part in all this. How secure are these home monitoring devices? Is the (highly sensitive) patient data encrypted before transmission? Are the portals where patients and physicians can login secured? Looking at the paper i admit i have some doubt…

What i don’t like is that in my opinion the researchers basically call for more security by obscurity at some points. Yes, obfuscating a firmware and removing the part number of the µC can make reverse-engineering and exploitation a lot more difficult, but it won’t stop a determined attacker and especially not any alphabet-soup-agency! While such things can maybe help a little bit you should never rely on them to make your product “secure”! Do code-reviews, external testings, use certificates and cryptography.

sorry, seems like it actually worked the first time, please delete the second post.

The picture at the top of the Hackaday article shows that the implantable devices use two communication mechanisms—inductive (wand) and RF. The inductive communication has to be within a few centimeters—RF communication doesn’t. I suppose one could attempt long-range inductive communication with a Tesla coil, but they would still be hard-pressed to received the inductive communication back from the implanted device. If the implant uses bidirectional communication, a Tesla coil wouldn’t work.

The RF communication is designed to operate over a few meters. Far enough to go from the patient’s bed to a nightstand or from the operating table to a counter in the operating room. One could use a Yagi antenna or parabolic dish to increase this range.

Some implantable devices require a wand to be present before an RF programming session may begin. Then the wand may be removed. The theory is that if someone holds a wand up to your chest, you’re at least aware that some programming of your implantable device might be happening. Unless some other security is implemented, I suppose this doesn’t protect you from a man-in-the-middle attack while you’re in the physician’s office.

Muddy Waters Research published an article about how St. Jude home monitoring devices can be modified to control the pacemaker. Usually, the home monitoring devices don’t control the pacemaker but rather report information from the pacemaker back to the clinician. I seem to recall that the researchers were able to get the home monitoring device to impersonate the physician’s programmer without using inductive communication beforehand—I can’t seem to find that detail now. Does anyone know more about this?

Incidentally, one reason for programming the device over RF is to help prevent infection. The wand isn’t disposed of in-between operations. Instead, they place it in a sterile plastic sleeve and dispose of the sleeve. It’s still close to the pocket that the surgeon just made however. If you can just use the wand for authentication and then proceed with programming over RF at a distance, that’s a big help. An infection around your pacemaker is no fun.

Mayberry RFD

Hun! Hun! You gotta read this! Where’s those Ruby Slippers!

There’s no place like home! There’s no place like home! There’s NO PLACE LIKE HOME!!!

ARRRGHHH….. they aren’t working!!!

We are educated and organized, but nowhere near civilized yet.

RIP Barnaby Jack

RIP Barnaby Jack.

Exactly.

And there I thought that any medical device went throw religious testing and verification. I would have thought there would be legislation that dictates that an independent audit of devices like this before they go into use.

Guess not……

Religious testing?

Thou shalt install in thy heart! Thou shalt not miss a beat!

They had to develop a working medical device, it’s quite a difficult process. They had to get medical device approval, it’s quite a difficult process. They don’t know squat about security because they have to know everything about medicine. I would not fault a single physician.

I would ask you to build a device that simply blinks an led in a steady, regular, consistent manner. It requires occasional adjustments so you must have external access. Build one. Then see how easy it is to secure it with no effect on the device’s operation. If you succeed in still having a functional device then put it out to the hackers to test.

It’s not their fault, but we do so love to assign blame.. And day will come there is blame to assign. It won’t fall on the physicians. Left alone, their creation would have functioned fine.

Holy crap, [Biomed] is super-right about this. They can’t know everything! Though security should be paramount, they were too busy making sure it did its job.

Now is the time for ‘them’ to fix their oversights.

Them is plural.

It won’t be docs (researchers)…. you seriously do NOT want docs trying to do security too… rofl.. believe me! Fundoscopic examination is unrevealing in these cases.

Now that it’s developed and has met purely medical approvals, a manufacturer steps in gambling they can make a large profit on this glorified ESP32. They can have security experts secure it, but might hire anybody. For them it’s a balance of risk, insurance, and profit. Then it has to pass the most rigorous of approvals yet to date, and then goes into use.

Now an “event” comes along, be it a hacker or a bullet in a robbery, we next introduce the lawyers also gambling they can make a large profit. But patient’s problem is already over, argument is now moot, just ask the patient. Punishing a negligent or criminal does not heal anything. For the one person it matters to, the argument stops here.

Seriously doubt the doc will be held to blame. The mfg will most likely, but it would have been fine if not subjected to a hack attack or bullet… so their is a criminal at fault, not we. Lawyers, lawyers, lawyers later….

Physicians may decide to handle security too. Lord help us if they do, but it will take a different organization of the medical industry as a whole.

I do not like this any more than you… Have a solution? Remove profit from the equation? Stop the hackers? Imprison the mfg CEO? Prevent all murders? Only one thing that I do know… it’s not gonna be the docs.

I’m worried for the future.

Most of us can “fix” something that is ‘broken’ with a USB connection, but something inside connected to either the heart or brain? Not going to even attempt.

I’m so uneasy about this. Keep tabs on it HaD!

So the physicians are electrical engineers by night and make these devices? No. Electrical and software engineers make these. The physicians are the ones who establish if the device performs appropriately. The engineers who designed and built these are still responsible for securing them.

exactly!!!

when building a product, you need to involve SMEs from every relevant area to ensure that every relevant detail is good enough before you release. The threshold for “good enough” on a medical device is very very high.

Talking with a guy that (briefly) worked for a cochlear implant company, the religious testing and verification is mostly paperwork in fact, with a legion of people taking care of it (this explain the price).

The software methodology and process are non existent, with sometimes live testing on patient (and painful results).

In the case of the FDA, the medical device companies themselves perform studies to show that their device is safe and beneficial to the patient—it’s not independent. They do have to abide by proper protocols for their studies, however. The FDA is in the process of making new rules and regulations regarding security for medical devices. However, they aren’t the sole agency responsible. This FDA fact sheet: (https://www.fda.gov/downloads/MedicalDevices/DigitalHealth/UCM544684.pdf) gives a good overview.

This is hilarious who gets the cushy job inventing / writing new regulations.

This inspired me a nice dystopian idea of an IoT pacemaker: it needs constant connection to a company server and heartbeats are delivered on a subscription based plan. Forgot to pay? Company IT infrastructure got compromised? Your router is down? Well, sucks to be you…

https://www.youtube.com/watch?v=jl9Nvg4yuus

So in theory it’s possible to write a program that executes using a specific pattern to generate the RF needed to interface with a pacemaker and cause it to fail. Death by malware.

Stuxnet was (most probably) deliberately created to attack and cause physical damage to a uranium enrichment plant…a compromised pacemaker can’t do nearly as much damage or loss of life…

This is true, but keep in mind the comment by someone who actually has a pacemaker, “As someone that has a pacemaker, this is quite disturbing… But if they can do it from across the street, I will start sleeping in a Faraday cage.” Emotionally, it’s a difficult enough decision to get a pacemaker. Now you have this piece of hardware in your chest responsible for keeping you alive. It’s even more difficult to accept this new reality if you’re worried about the security of your device. You don’t care so much about the hacker who wants to cause nation-wide catastrophe—you care about the possibility of some messed up individual messing with your device.

Much of the problem lies with the RF interface. That creates risk factors:

1. Increased power can compensate for increased distance. If a certain power level will reprogram a device from a couple of feet away, then 100 times that power and directional antennas might enable reprogramming from the street outside someone’s home.

2. Radio waves can’t be seen, so no one knows about that high-powered reprogramming. RFID has a similar security issue—the “R” in the name.

One practical solution would be to get rid of the radio interface altogether. Place a light detector tuned to a specific light frequency near but still under a patient’s skin, and use that for the reprogramming UI. Clothing would block any illicit efforts and those efforts would also be quite visible.

There are often two solutions to a problem. One is not inherently safe and thus requires enormous and often futile efforts to make it safe. The other is safe by design. The distinction between a radio interface (with encryption and passwords) and a light/laser one illustrates that.

The Germans made a similar mistake in WWII when they used radio traffic encrypted with Enigma. Because their simple receivers and mobile antennas couldn’t hear their own signals over more than short distances, they didn’t realize that the British were using huge rhombic antennas and state-of-the-art National HRO receivers to copy those signals from hundreds of miles away. And if they’d know the Brits were going to such great trouble to copy their coded signals, they might have guessed that the Brits knew how to break the encryption.

There is an additional factor to keep in mind. Islamist terrorism isn’t like an invading army. It doesn’t intend to win by greater strength, but by feeding irrational terror and paralyzing resistance. That’s why it runs over people in the streets of France. That’s why it detonates a bomb at a British concert filled with girls in their early teens. That’s why it would find hacking pacemakers appealing. Kill a handful of people that way, and everyone with a pacemaker, along with their friends and family, would become fearful.

We need to take this risk seriously, very seriously. Will the medical industry do that? I think not. Only after there’s a disaster, will they wake up and ask, “How could this happen?” And for fixing mistakes they never should have made, they’ll want huge federal subsidies. We see the same with the power industry and its refusal to protect itself from hacking. It’s waiting for Congress to vote billions to make it do what it ought to do as a matter of course.

Years ago when I was working in a hospital I saw a cartoon the summarizes this perfectly. It showed two people talking with one saying, “Be proud of our incompetence. It’s what separates us from the animals.”

That is all often true. You saw that with 9/11, after which some in national security claimed they had never imagined that an airliner could be used as a weapon. “How odd,” I thought at the time, “I read a Tom Clacey article that closed with precisely that.” Some people are just stupid.

Use two or more inductive loops next to each other. Make it so that to communicate, one of the loops must get a signal and the ones next to it much weaker signal. Then generating the right RF field pattern from a distance would be very difficult.

Setting aside the grievous (downright unconscionable) vulnerabilities in the programmers themselves:

the devices to be adjusted by anyone with a programmer and proximity

This is, to some extent, the desired state, however. Controls on equipment like this are difficult when one of the use cases is “patient is in an ER, in critical condition, and unable to communicate.” Physical proximity is, for 99% of the population, sufficient security. (The 1% includes people like Dick Cheney, and while I have many disagreements with him, I think his decision to have the remote programmable capability disabled on his implanted cardiac equipment was sensible.)

It’s a hard problem to solve, and ends up into game-theory land of “risk of appropriate operators being unable to interact with the device when they need to” vs “risk of inappropriate operator having capabilities and proximity and the urge to fuck shit up.”

Many attacks have been on the processor itself. Buffer overrun, overflow.

Hey! What would happen if you were close by when one of those automobile immobilizers was used by the police? Either EM field pulse, or extreme HV pulse. Both fry a car’s electronics to stop it.

Good time to patent a tin-foil suit.