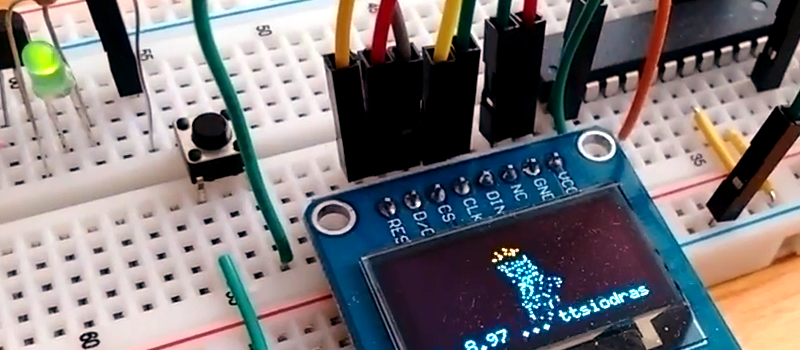

Small OLED displays are inexpensive these days–cheap enough that pairing them with an 8-bit micro is economically feasible. But what can you do with a tiny display and not-entirely-powerful processor? If you are [ttsiodras] you can do a real time 3D rendering. You can see the results in the video below. Not bad for an 8-bit, 8 MHz processor.

The code is a “points-only” renderer. The design drives the OLED over the SPI pins and also outputs frame per second information via the serial port.

As you might expect, 3D output takes a good bit of math, and the chip in question isn’t very good at handling real numbers. [Ttsiodras] handles this using an old technique: fixed point arithmetic. The idea is simple. Normally, we think of a 16-bit word as holding unsigned values of 0 – 65535. However, if you choose, you can also use it to represent numbers from 0-50.999, for example. Mentally, you scale everything by 1,000 and then reverse the operation when you want to output. Addition and subtraction are straightforward, but multiplication and division require some extra work.

If you want to read more about fixed point math, you are in the right place. We’ve also covered a great external tutorial, too. But if you think this is the first time we’ve covered a 3D graphics engine for the ATmega parts, you’re wrong.

How about using the same chip to do Wolf3d, with texture mapping, on a 5110 monochrome display: http://gamebuino.com/forum/viewtopic.php?f=13&t=3312

Been there, done that:

http://www.youtube.com/watch?v=9ryBeJAs2I0

http://www.youtube.com/watch?v=-Xer8gVS5Ww

But will it run Crysis

I’m not sure if I understand your meaning, but Wolf3D is not 3D, it is 2D raycasting. Very nice project though.

This is also raycasting on the ATmega328.

Doing point or wireframe 3D is easier than raycasting on the CPU.

Technically it was the hidden-surface-removal that was 2D (which the demo above, while still good, doesn’t do any of at all). The projections were still 3D and the texture mapping of both walls and sprites were still 3D (albeit constrained to be affine, for performance reasons).

I thought “wow, 3D real time rendering, no way!”… and indeed, no way. This is not 3D rendering.

From Wikipedia: “3D rendering is the 3D computer graphics process of automatically converting 3D wire frame models into 2D images with 3D photorealistic effects or non-photorealistic rendering on a computer.”

Even the NES had more impressive 3D titles than that, e.g. Cosmic Epsilon, and that’s running on a sub 2MHz CPU.

Oddly, that definition would rule out POVRAY and other programs that can do renderings off spheres, quadratic and quartic equations, and other things that aren’t wireframe based. Wikipedia wrote that definition a little narrowly.

I get what you mean, but I guess rendering a 3D point cloud is also 3D renering in a way.

No, I’d call it “3D plotting” if anything. Or specifically, plotting of some 2d-projected 3D point data.

I thought Autism was Automatic, Kind of like the reply of an autistic types expressionless desire for a literal definition, 3D Point Rendering vis-à-vis 3D Line / Spline etc etc the list goes on, Point rendering is such without the line math, Vertices….

Look up the “Point cloud” article at Wikipedia. As samples it currently shows a torus (non photoreal) and a landscape (photoreal). Check the links at the bottom for more examples. 3D is full of tricks: meshes, math surfaces, point clouds… anything goes if it works.

Firstly, Wikipedia is not an authoritative reference. b) Even Wikipedia has that article marked as being problematic (not citing sources). 3) That article doesn’t claim they’re giving a definition of what 3D rendering is or is not, they’re just describing it. And IV) Even if a formal definition exists for a phrase, it’s very common for its use among people not specializing in that field will mean something very different while not being wrong.

Please see answer to Megol below.

Or maybe isn’t Collins Dictionary “an authoritative reference” either?

That definition is bullshit. You thinking wikipedia always being right is one thing – you behaving like an arrogant (censored) based on faulty information is another.

Point clouds, lines (with or without hidden lines removal) or curves are all examples of things that can be done as a 2D projection from a 3D world representation.

Here is a much better definition: The process for converting a 3D representation into a 2D image using 2D projection.

This one also includes the case of using a vector monitor however there the actual 2D rendering is done in the monitor itself rather than in the (software) process.

Alright, another one then:

https://www.collinsdictionary.com/dictionary/english/render

“8. computing

to use colour and shading to make a digital image look three-dimensional and solid”

Oh, but Collins Dictionary is bullshit anyway with faulty information written by a bunch of arrogant (censored).

But hey, you’re right, the definition you pulled out of your own (censored) is bound to be the correct one since you came up with it.

And 8.8 or 16.16 fixed point math is what you use when need for speed over floating point maths.

Or when you dont have a FPU available.

This reminded me of 3D rendering on a Canon point-and-shoot camera using custom CHDK:

https://www.youtube.com/watch?v=9qCjseHoXcw

it’s just a simple rasterization algorithm with some basic shading calculation.

Hmm. GraFORTH’s 3-D graphics ran pretty snappily on a 1MHz 6502 (Apple ][ ), and in very little memory. I wonder how much trouble it would be to port/rewrite for a modern microcontroller?

Shame noone wrote about this awesome dude

https://www.youtube.com/watch?v=sNCqrylNY-0

Agree, I hope that is not because he just haven’t used Arduino or Adafruit or trendy parts, but only because it is an old project. I am still astonished about the overall efficiency. Is this out there this guy?

I gotta admit i’m not without a degree of that unjustified aversion toward -inos and whole “microcontroller diy must be just as simple as sandwich yourself a couple of modules” concept, but lft’s project is somewhat more impressive imo and people should hear more about it. AND IT ALSO HAS SOUND which is nice. However it’s implementation is on a different level (e.g. assembly magic here and there) and probably not suitable as a way to design 3D library or as a generic graphics backend for 8bit mcu platform.

I’ve seen this led module on the “SanDisk Sansa Clip+” mp3 player. It’s very hackable and you can install Rockbox on it. Perfect for playing some nice old chiptunes or running any code you want on it really.

Meh. Realtime 3d rendering was seen on the BBC, Spectrum, C64… (Elite + others). Hell, you even had Vu3D on the Speccy…

I think fixed point math is far better that floating point. Floating point looses it’s precision as you move away from zero. It gets bad very quickly even if you’re using doubles. Floating point is the real hack here. ;)

BTW another nice little trick is to use an unsigned 16bit value for angles and define one degree as 182. Will mean as the value wraps round so does the angle, correctly, with minimal error.

Why use an Atmega? A 555 could do this.

Really?

I thought this was one of the things a 555 wont make it?

Sure. A 555 can do that.

You just need a Beowulf cluster of them…